deepseek-v3対gpt-4oおよびllama 3.3 70b:明らかにされた最強のAIモデル

The evolution of AI language models has set new standards, especially in the coding and programming landscape. Leading the charge are DeepSeek-V3, GPT-4o, and Llama 3.3 70B, each offering unique advantages. In this blog, we will do an AI language model comparison, focusing on the architectures, parameters, coding capabilities, and practical use cases of GPT-4o and its two alternatives. Through a detailed analysis of DeepSeek-V3 vs GPT-4o vs Llama 3.3 70B, I will uncover which model is best suited for programming tasks and how these advancements are shaping the future of AI in 2025.

Table of Contents

- Model Architectures and Design

- DeepSeek-V3

- GPT-4o

- Llama 3.3 70B

- DeepSeek-V3 vs GPT-4o vs Llama 3.3 70B: Model Evaluation

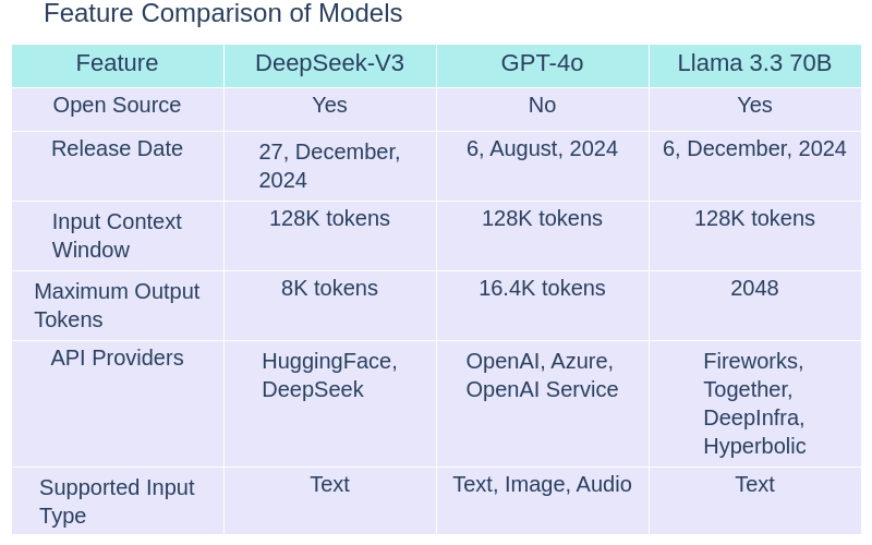

- 1. Model Overview

- 2. Pricing Comparison

- 3. Benchmark Comparison

- Comparison Insights

- DeepSeek-V3 vs GPT-4o vs Llama 3.3 70B: Coding Capabilities

- Task 1: Finding the Factorial of a Large Number

- Task 2: Checking if a String is a Palindrome

- Conclusion

- Frequently Asked Questions

Model Architectures and Design

DeepSeek-V3 is an open-source AI model that excels in large language model benchmarks with its efficient Mixture-of-Experts (MoE) architecture. Llama 3.3 70B is impressive with its scalability and adaptability, making it a strong contender in AI model parameter comparison. Meanwhile, GPT-4o stands out with its extensive resources, giving its competitors a run for their money.

Now, let’s begin our comparison by understanding the design and architectures of the three models.

DeepSeek-V3

DeepSeek-V3 is an open-source Mixture-of-Experts (MoE) model with 671 billion parameters, activating 37 billion parameters per token. It leverages cutting-edge load balancing and multi-token prediction methods, trained on 14.8 trillion tokens. Achieving top-tier performance across multiple benchmarks, the model maintains training efficiency with a cost of only 2.788 million H800 GPU hours.

DeepSeek-V3 incorporates reasoning abilities from DeepSeek-R1 Lite and offers a 128K context window. Moreover, it can process a variety of input types, including text, structured data, and complex multimodal inputs, making it versatile for diverse use cases.

Also Read: Building AI Application with DeepSeek-V3

GPT-4o

GPT-4o is an advanced language model developed by OpenAI, featuring state-of-the-art architectural improvements. It is trained over a vast dataset of input tokens, making it highly capable across various tasks with impressive accuracy.

The model supports multimodal inputs and has enhanced reasoning abilities, providing versatility for numerous applications. With a context window of 128K tokens, it can generate up to 16,384 tokens per request and processes around 77.4 tokens per second. Released in August 2024, its knowledge extends up to October 2023, making it one of the most powerful and adaptable models on the market.

Llama 3.3 70B

The Meta Llama 3.3 70 B multilingual large language model (LLM) is an open-source, pre-trained, instruction-tuned generative model with 70 billion parameters. It is designed to be optimized for efficiency and scalability. It employs cutting-edge techniques to handle a broad range of tasks, trained on over 15 trillion tokens.

Llama 3.3 70B is an auto-regressive language model that uses an optimized transformer architecture. The model achieves remarkable performance on several benchmarks, keeping training costs minimal with optimized resource allocation.

Llama 3.3 70B supports a wide context window and incorporates advanced reasoning capabilities for nuanced and precise task handling. It is designed to process text-based inputs but can also handle structured data, offering flexibility in various applications.

DeepSeek-V3 vs GPT-4o vs Llama 3.3 70B: Model Evaluation

1. Model Overview

2. Pricing Comparison

3. Benchmark Comparison

| Benchmark | Description | DeepSeek-V3 | GPT-4o | Llama 3.3 70B |

| MMLU | Massive Multitask Language Understanding- Test knowledge across 57 subjects including maths, history, law and more | 88.5% | 88.7% | 88.5% |

| MMLU-Pro | A more robust MMLU benchmark with more complex reasoning focused questions and reduced prompt sensitivity | 75.9% | 74.68% | 75.9% |

| MMMU | Massive Multitask Multimodal Understanding: Text understanding across text, audio,images and videos | Not available | 69.1% | Not available |

| HellaSwag | A challenging sentence completion benchmark | 88.9% | Not available | Not available |

| HumanEval | Evaluates code generation and problem solving capabilities | 82.6% | 90.2% | 88.4% |

| MATH | Tests Mathematical problem solving abilities across various difficulty levels | 61.6% | 75.9% | 77% |

| GPQA | Test PhD-level knowledge in physics, chemistry and biology that require domain expertise | 59.1% | 53.6% | 50.5% |

| IFEval | Test model’s ability to accurately follow explicit formatting instructions, generate appropriate outputs and maintain consistent instructions | 86.1% | Not available | 92.1% |

You can find the results of their individual benchmark tests here:

- GPT-4o: https://github.com/openai/simple-evals?tab=readme-ov-file#benchmark-result

- Llama 3.3 70B: https://build.nvidia.com/meta/llama-3_3-70b-instruct/modelcard

- DeepSeek-V3: https://github.com/deepseek-ai/DeepSeek-V3

Comparison Insights

Speaking of pricing, GPT-4o is roughly 30x more expensive compared to DeepSeek-V3 for input and output tokens. Meanwhile, Llama 3.3 70B Instruct is roughly 1.5x more expensive compared to DeepSeek-V3 for input and output tokens.

DeepSeek-V3 excels in benchmarks that require structured task completion, such as MMLU (Massive Multitask Language Understanding), and HumanEval (Code Generation). However, it faces challenges in benchmarks like MATH (Mathematical Problem-Solving), where its performance is not as competitive. It also leads in GPQA (Generalized Passage Question Answering), outperforming the other models in this domain.

GPT-4o performs particularly well in HumanEval and MMLU, where it stands out with its ability to generate high-quality responses across various topics. While it shines in reasoning-based tasks, it struggles in specialized areas such as MATH and GPQA, where other models perform better.

Llama 3.3 70B shows its strengths in benchmarks like MATH and IFEval (Instruction Following Evaluation). It demonstrates superior problem-solving abilities and follows complex instructions more effectively than the other models. Its performance in other benchmarks like HumanEval and GPQA is less impressive than DeepSeek-V3 and GPT-4o.

DeepSeek-V3 vs GPT-4o vs Llama 3.3 70B: Coding Capabilities

Now we will compare the code writing capabilities of DeepSeek-V3, GPT-4o, and Llama 3.3 70B. For that, we are going to give the same prompt to all three models and evaluate their responses on the below metrics:

- Efficiency

- Readability

- Comment and Documentation

- Error Handling

Task 1: Finding the Factorial of a Large Number

Prompt: “Hi, you are an excellent code instructor so help me with this question.

Q. Write Python code for finding the Factorial of a Large Number.”

Response by GPT-4o

To compute the factorial of a large number using an array (to manually handle multiplication and carry operations), here’s a Python implementation:

def multiply(arr, x):

"""

Multiplies an array representation of a number with an integer x.

: param arr: List of integers representing the digits of the number.

: param x: Integer to multiply with.

:return: Updated array with the result.

"""

carry = 0 # Initialize carry

for i in range(len(arr)):

prod = arr[i] * x carry

arr[i] = prod % 10 # Store last digit of the product in the array

carry = prod // 10 # Update the carry

# Process carry, add digits to the array

while carry > 0:

arr.append(carry % 10)

carry //= 10

return arr

def factorial_large_number_array(n):

"""

Calculate the factorial of a large number using array representation.

: param n: Integer for which the factorial is to be computed.

:return: Factorial of n as a string.

"""

if n

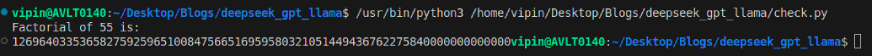

Output of the Generated Code

Response by Llama 3.3 70B

Here’s a Python code snippet that calculates the factorial of a large number using an array to store the digits.

def multiply(x, res, res_size):

"""Multiplies x with the number represented by res[]"""

carry = 0

for i in range(res_size):

prod = res[i] * x carry

res[i] = prod % 10

carry = prod // 10

while carry:

res.append(carry % 10)

carry = carry // 10

def factorial(n):

""" Calculates the factorial of a number n"""

res = [1]

res_size = 1

for i in range(2, n 1):

multiply(i, res, res_size)

res_size = len(res)

return res

def print_factorial(n):

""" Prints the factorial of a number n"""

res = factorial(n)

print("Factorial of", n, "is:")

for digit in reversed(res):

print(digit, end="")

# Example usage:

n = 55

print_factorial(n)

Output of the Generated Code

Response by DeepSeek-V3

To calculate the factorial of a large number using an array in Python, we can store the result in an array (or list) to handle very large numbers that exceed the limit of standard data types. Here’s how you can implement it:

def factorial(n):

# Initialize the result as a list with 1

result = [1]

# Multiply the result list with numbers from 2 to n

for x in range(2, n 1):

carry = 0

# Multiply each digit in the result list with x

for i in range(len(result)):

product = result[i] * x carry

result[i] = product % 10 # Store the last digit

carry = product // 10 # Carry over the remaining digits

# Add the remaining carry to the result list

while carry > 0:

result.append(carry % 10)

carry = carry // 10

# Reverse the result list to get the correct order

result.reverse()

return result

# Input: Number to find factorial of

n = int(input("Enter a number: "))

# Compute factorial

factorial_result = factorial(n)

# Convert the list of digits to a string for display

factorial_str = ''.join(map(str, factorial_result))

# Output the result

print(f"Factorial of {n} is: {factorial_str}")

Output of the Generated Code

Comparison Results

GPT-4o’s response is the most complete, balancing efficiency, readability, and robust error handling. It includes detailed comments and clear variable names, and handles invalid input gracefully, making it well-suited for practical use or collaborative development.

Llama 3.3 70B’s response is functional, but it lacks proper error handling and detailed documentation. Its structure is less intuitive, and the use of redundant variables impacts the efficiency. This makes it the weakest of the three.

DeepSeek-V3’s response is efficient and cleanly structured but lacks robust error handling and sufficient documentation. It is a strong contender but falls short in clarity for people to follow or adapt easily.

Recommendations:

- For practical use: GPT-4o is the best choice due to its robust error handling, clear documentation, and strong readability.

- For educational purposes: GPT-4o would again be the preferred option due to the detailed docstrings and comments in its response, which can help learners understand things logically.

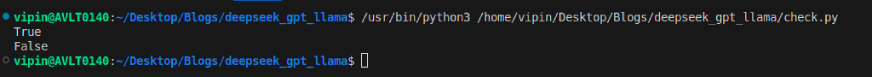

Task 2: Checking if a String is a Palindrome

Prompt: ‘‘Hi, you are an excellent code instructor so help me with this question.

Q. Given a string s, return true if the s can be palindrome after deleting at most one character from it.’’

Response by GPT-4o

To solve this problem, we can use a two-pointer approach to check if the string can be a palindrome after removing at most one character. Here’s the Python implementation

def valid_palindrome(s):

"""

Check if the string can become a palindrome by removing at most one character.

: param s: The input string.

:return: True if the string can become a palindrome, otherwise False.

"""

def is_palindrome_range(left, right):

# Helper function to check if a substring is a palindrome

while left

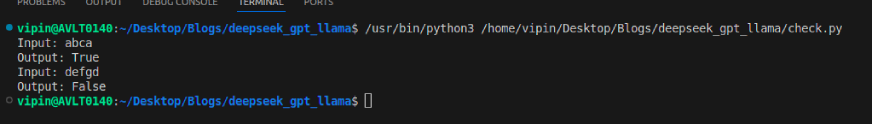

Output of the Generated Code

Response by Llama 3.3 70B

Here’s a Python solution that checks if a string can be a palindrome after deleting at most one character.

def valid_palindrome(s: str) -> bool:

"""

Returns True if the string can be a palindrome after deleting at most one character.

:param s: The input string.

:return: True if the string can be a palindrome, False otherwise.

"""

def is_palindrome(i, j):

"""

Checks if the substring from index i to j is a palindrome.

:param i: The start index.

:param j: The end index.

:return: True if the substring is a palindrome, False otherwise."""

while i

Output of the Generated Code

Response by DeepSeek-V3

To solve this problem, we need to check if a string can become a palindrome by deleting at most one character. A palindrome reads the same forwards and backward. Here’s how we can approach this:

def validPalindrome(s: str) -> bool:

def is_palindrome(s, left, right): # Helper function to check if a substring is a palindrome

while left

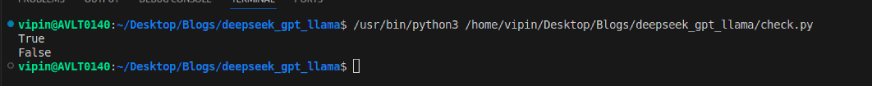

Output of the Generated Code

Comparison Insights

GPT-4o’s response is the most complete and well-documented. It handles the core functionality with clarity, making it easy for future developers to modify or extend the code. Its combination of efficiency and clear documentation makes it ideal for production environments.

Llama 3.3 70B’s response is a functional solution but lacks the clear variable naming and in-depth documentation found in GPT-4o. The lack of comments within the main logic makes it harder to follow, and there is room for improvement in terms of readability. However, it is efficient enough for small tasks where quick implementation is the priority.

DeepSeek-V3’s response strikes a good balance between efficiency and simplicity but falls short in documentation. It’s concise and quick but lacks enough detail for others to follow the code easily. Its approach can be beneficial in scenarios where time and resources are limited, but it would need more thorough explanations and error handling to make the code production-ready.

Recommendations:

- For practical use: GPT-4o response is the best due to its thorough documentation, clear structure, and readability.

- For educational purposes: GPT-4o is the most suitable, providing comprehensive insights into each step of the process.

Conclusion

GPT-4o outperforms both Llama 3.3 70B and DeepSeek-V3 in terms of efficiency, clarity, error management, and comprehensive documentation. This makes it the top choice for both practical applications and educational purposes. While Llama 3.3 70B and DeepSeek-V3 are functional, they fall short due to the lack of robust error handling and clear documentation. Adding proper error management, improving variable naming, and including detailed comments would elevate their usability to match GPT-4o’s standard.

Unlock the power of DeepSeek! Enroll in our “Getting Started with DeepSeek” course today and learn how to leverage this cutting-edge AI model for your projects. Don’t miss out—join now and elevate your AI skills!

Also Read:

- DeepSeek R1 vs OpenAI o1: Which One is Better?

- DeepSeek R1 vs OpenAI o1 vs Sonnet 3.5

- Chinese Giants Faceoff: DeepSeek-V3 vs Qwen2.5

- DeepSeek V3 vs Claude Sonnet 3.5

- DeepSeek V3 vs GPT-4o

Frequently Asked Questions

Q1. Which model delivers the highest code quality for real-world applications?A. GPT-4o excels in real-world coding due to its efficient error handling, clear documentation, and well-organized code structure, making it the best choice for practical use.

Q2. How do these models compare in terms of code readability and ease of understanding?A. GPT-4o stands out for its readability, offering clear variable names and thorough comments. In comparison, Llama 3.3 70B and DeepSeek-V3 are functional but lack the same level of clarity and documentation, which can make them harder to follow.

Q3. Which model is most suitable for educational purposes?A. GPT-4o is the ideal choice for education, providing in-depth documentation and detailed explanations that help learners grasp the underlying logic of the code.

Q4. What steps can be taken to enhance DeepSeek-V3 and Llama 3.3 70B to match GPT-4o’s quality?A. To elevate their performance, both models should focus on implementing robust error handling, using more descriptive variable names, and adding detailed comments and documentation to improve their readability and overall usability.

-

ユーザーガイド:FALCON 3-7B指示モデルTIIのファルコン3:オープンソースの革新的な飛躍ai TIIのAIの再定義の野心的な追求は、Advanced Falcon 3モデルで新たな高みに達します。 この最新のイテレーションは、新しいパフォーマンスベンチマークを確立し、オープンソースAIの機能を大幅に進めます。 Falcon 3...AI 2025-04-20に投稿しました

ユーザーガイド:FALCON 3-7B指示モデルTIIのファルコン3:オープンソースの革新的な飛躍ai TIIのAIの再定義の野心的な追求は、Advanced Falcon 3モデルで新たな高みに達します。 この最新のイテレーションは、新しいパフォーマンスベンチマークを確立し、オープンソースAIの機能を大幅に進めます。 Falcon 3...AI 2025-04-20に投稿しました -

deepseek-v3対gpt-4oおよびllama 3.3 70b:明らかにされた最強のAIモデルThe evolution of AI language models has set new standards, especially in the coding and programming landscape. Leading the c...AI 2025-04-18に投稿されました

deepseek-v3対gpt-4oおよびllama 3.3 70b:明らかにされた最強のAIモデルThe evolution of AI language models has set new standards, especially in the coding and programming landscape. Leading the c...AI 2025-04-18に投稿されました -

トップ5 AIインテリジェントな予算編成ツールAIで金融の自由のロックを解除:インドのトップ予算編成アプリ あなたはあなたのお金がどこに行くのか絶えず疑問に思ってうんざりしていますか? 法案はあなたの収入をむさぼり食うようですか? 人工知能(AI)は強力なソリューションを提供します。 AI予算編成ツールは、リアルタイムの財務洞察、パーソナ...AI 2025-04-17に投稿されました

トップ5 AIインテリジェントな予算編成ツールAIで金融の自由のロックを解除:インドのトップ予算編成アプリ あなたはあなたのお金がどこに行くのか絶えず疑問に思ってうんざりしていますか? 法案はあなたの収入をむさぼり食うようですか? 人工知能(AI)は強力なソリューションを提供します。 AI予算編成ツールは、リアルタイムの財務洞察、パーソナ...AI 2025-04-17に投稿されました -

Excel Sumproduct機能の詳細な説明 - データ分析学校Excelの等式関数:データ分析Powerhouse 合理化されたデータ分析のためのExcelの等式関数の力のロックを解除します。この汎用性のある関数は、合計と乗算機能を簡単に組み合わせて、対応する範囲または配列全体の追加、減算、および分割に拡張します。 傾向を分析するか、複雑な計算に取り組む...AI 2025-04-16に投稿されました

Excel Sumproduct機能の詳細な説明 - データ分析学校Excelの等式関数:データ分析Powerhouse 合理化されたデータ分析のためのExcelの等式関数の力のロックを解除します。この汎用性のある関数は、合計と乗算機能を簡単に組み合わせて、対応する範囲または配列全体の追加、減算、および分割に拡張します。 傾向を分析するか、複雑な計算に取り組む...AI 2025-04-16に投稿されました -

詳細な調査は完全にオープンで、ChatGptとユーザーの利点がありますOpenaiの深い研究:AI研究のためのゲームチェンジャー Openaiは、すべてのChatGPTと加入者の深い研究を解き放ち、研究効率の大幅な後押しを約束しています。 Gemini、Grok 3、Perplexityなどの競合他社から同様の機能をテストした後、Openaiの深い研究を優れた選...AI 2025-04-16に投稿されました

詳細な調査は完全にオープンで、ChatGptとユーザーの利点がありますOpenaiの深い研究:AI研究のためのゲームチェンジャー Openaiは、すべてのChatGPTと加入者の深い研究を解き放ち、研究効率の大幅な後押しを約束しています。 Gemini、Grok 3、Perplexityなどの競合他社から同様の機能をテストした後、Openaiの深い研究を優れた選...AI 2025-04-16に投稿されました -

Amazon Nova Today Real Experience and Review -AnalyticsVidhyaAmazonがNovaを発表する:強化されたAIおよびコンテンツ作成のための最先端の基礎モデル Amazonの最近のRe:Invent 2024イベントは、AIとコンテンツの作成に革命をもたらすように設計された、最も高度な基礎モデルのスイートであるNovaを紹介しました。この記事では、Novaの...AI 2025-04-16に投稿されました

Amazon Nova Today Real Experience and Review -AnalyticsVidhyaAmazonがNovaを発表する:強化されたAIおよびコンテンツ作成のための最先端の基礎モデル Amazonの最近のRe:Invent 2024イベントは、AIとコンテンツの作成に革命をもたらすように設計された、最も高度な基礎モデルのスイートであるNovaを紹介しました。この記事では、Novaの...AI 2025-04-16に投稿されました -

ChatGPTタイミングタスク関数を使用する5つの方法ChatGptの新しいスケジュールされたタスク:ai で一日を自動化する ChatGptは最近、ゲームを変える機能:スケジュールされたタスクを導入しました。 これにより、ユーザーはオフライン中であっても、所定の時期に通知または応答を受信して、繰り返しプロンプトを自動化できます。毎日のキュレ...AI 2025-04-16に投稿されました

ChatGPTタイミングタスク関数を使用する5つの方法ChatGptの新しいスケジュールされたタスク:ai で一日を自動化する ChatGptは最近、ゲームを変える機能:スケジュールされたタスクを導入しました。 これにより、ユーザーはオフライン中であっても、所定の時期に通知または応答を受信して、繰り返しプロンプトを自動化できます。毎日のキュレ...AI 2025-04-16に投稿されました -

3つのAIチャットボットのうち、同じプロンプトに応答するのはどれですか?Claude、ChatGpt、Geminiなどのオプションを使用して、チャットボットを選択すると圧倒的に感じることができます。ノイズを切り抜けるために、同一のプロンプトを使用して3つすべてをテストに入れて、どちらが最良の応答を提供するかを確認します。すべてのツールと同様に、出力はそれを使用す...AI 2025-04-15に投稿されました

3つのAIチャットボットのうち、同じプロンプトに応答するのはどれですか?Claude、ChatGpt、Geminiなどのオプションを使用して、チャットボットを選択すると圧倒的に感じることができます。ノイズを切り抜けるために、同一のプロンプトを使用して3つすべてをテストに入れて、どちらが最良の応答を提供するかを確認します。すべてのツールと同様に、出力はそれを使用す...AI 2025-04-15に投稿されました -

chatgptで十分で、専用のAIチャットマシンは必要ありません新しいAIチャットボットが毎日起動している世界では、どちらが正しい「1つ」であるかを決定するのは圧倒的です。しかし、私の経験では、CHATGPTは、プラットフォーム間を切り替える必要なく、私が投げたすべてのものを、少し迅速なエンジニアリングで処理します。 スペシャリストAIチャットボットは、多く...AI 2025-04-14に投稿されました

chatgptで十分で、専用のAIチャットマシンは必要ありません新しいAIチャットボットが毎日起動している世界では、どちらが正しい「1つ」であるかを決定するのは圧倒的です。しかし、私の経験では、CHATGPTは、プラットフォーム間を切り替える必要なく、私が投げたすべてのものを、少し迅速なエンジニアリングで処理します。 スペシャリストAIチャットボットは、多く...AI 2025-04-14に投稿されました -

インドのAIの瞬間:生成AIにおける中国と米国との競争インドのAI野心:2025アップデート 中国と米国が生成AIに多額の投資をしているため、インドは独自のGenaiイニシアチブを加速しています。 インドの多様な言語的および文化的景観に対応する先住民族の大手言語モデル(LLMS)とAIツールの緊急の必要性は否定できません。 この記事では、インドの急...AI 2025-04-13に投稿されました

インドのAIの瞬間:生成AIにおける中国と米国との競争インドのAI野心:2025アップデート 中国と米国が生成AIに多額の投資をしているため、インドは独自のGenaiイニシアチブを加速しています。 インドの多様な言語的および文化的景観に対応する先住民族の大手言語モデル(LLMS)とAIツールの緊急の必要性は否定できません。 この記事では、インドの急...AI 2025-04-13に投稿されました -

気流とDockerを使用してCSVのインポートをPostgreSQLに自動化するこのチュートリアルは、Apache Airflow、Docker、およびPostgreSQLを使用して堅牢なデータパイプラインを構築して、CSVファイルからデータベースへのデータ転送を自動化することを示しています。 効率的なワークフロー管理のために、DAG、タスク、演算子などのコアエアフローの概念...AI 2025-04-12に投稿されました

気流とDockerを使用してCSVのインポートをPostgreSQLに自動化するこのチュートリアルは、Apache Airflow、Docker、およびPostgreSQLを使用して堅牢なデータパイプラインを構築して、CSVファイルからデータベースへのデータ転送を自動化することを示しています。 効率的なワークフロー管理のために、DAG、タスク、演算子などのコアエアフローの概念...AI 2025-04-12に投稿されました -

Swarm Intelligence Algorithms:3つのPython実装Imagine watching a flock of birds in flight. There's no leader, no one giving directions, yet they swoop and glide together in perfect harmony. It may...AI 2025-03-24に投稿されました

Swarm Intelligence Algorithms:3つのPython実装Imagine watching a flock of birds in flight. There's no leader, no one giving directions, yet they swoop and glide together in perfect harmony. It may...AI 2025-03-24に投稿されました -

ラグ&微調整によりLLMをより正確にする方法Imagine studying a module at university for a semester. At the end, after an intensive learning phase, you take an exam – and you can recall th...AI 2025-03-24に投稿されました

ラグ&微調整によりLLMをより正確にする方法Imagine studying a module at university for a semester. At the end, after an intensive learning phase, you take an exam – and you can recall th...AI 2025-03-24に投稿されました -

Google Geminiとは何ですか? GoogleのChatGptのライバルについて知る必要があるすべてGoogle recently released its new Generative AI model, Gemini. It results from a collaborative effort by a range of teams at Google, including members ...AI 2025-03-23に投稿されました

Google Geminiとは何ですか? GoogleのChatGptのライバルについて知る必要があるすべてGoogle recently released its new Generative AI model, Gemini. It results from a collaborative effort by a range of teams at Google, including members ...AI 2025-03-23に投稿されました -

DSPYでのプロンプトのガイドdspy:LLMアプリケーションを構築および改善するための宣言的なフレームワーク dspy(宣言的自己改善言語プログラム)は、迅速なエンジニアリングの複雑さを抽象化することにより、LLMアプリケーション開発に革命をもたらします。 このチュートリアルは、DSPYの宣言的アプローチを使用して強力な...AI 2025-03-22に投稿されました

DSPYでのプロンプトのガイドdspy:LLMアプリケーションを構築および改善するための宣言的なフレームワーク dspy(宣言的自己改善言語プログラム)は、迅速なエンジニアリングの複雑さを抽象化することにより、LLMアプリケーション開発に革命をもたらします。 このチュートリアルは、DSPYの宣言的アプローチを使用して強力な...AI 2025-03-22に投稿されました

中国語を勉強する

- 1 「歩く」は中国語で何と言いますか? 走路 中国語の発音、走路 中国語学習

- 2 「飛行機に乗る」は中国語で何と言いますか? 坐飞机 中国語の発音、坐飞机 中国語学習

- 3 「電車に乗る」は中国語で何と言いますか? 坐火车 中国語の発音、坐火车 中国語学習

- 4 「バスに乗る」は中国語で何と言いますか? 坐车 中国語の発音、坐车 中国語学習

- 5 中国語でドライブは何と言うでしょう? 开车 中国語の発音、开车 中国語学習

- 6 水泳は中国語で何と言うでしょう? 游泳 中国語の発音、游泳 中国語学習

- 7 中国語で自転車に乗るってなんて言うの? 骑自行车 中国語の発音、骑自行车 中国語学習

- 8 中国語で挨拶はなんて言うの? 你好中国語の発音、你好中国語学習

- 9 中国語でありがとうってなんて言うの? 谢谢中国語の発音、谢谢中国語学習

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning