How ChatGPT\'s Custom GPTs Could Expose Your Data and How to Keep It Safe

ChatGPT's custom GPT feature allows anyone to create a custom AI tool for almost anything you can think of; creative, technical, gaming, custom GPTs can do it all. Better still, you can share your custom GPT creations with anyone.

However, by sharing your custom GPTs, you could be making a costly mistake that exposes your data to thousands of people globally.

What Are Custom GPTs?

Custom GPTs are programmable mini versions of ChatGPT that can be trained to be more helpful on specific tasks. It is like molding ChatGPT into a chatbot that behaves the way you want and teaching it to become an expert in fields that really matter to you.

For instance, a Grade 6 teacher could build a GPT that specializes in answering questions with a tone, word choice, and mannerism that is suitable for Grade 6 students. The GPT could be programmed such that whenever the teacher asks the GPT a question, the chatbot will formulate responses that speak directly to a 6th grader's level of understanding. It would avoid complex terminology, keep sentence length manageable, and adopt an encouraging tone. The allure of Custom GPTs is the ability to personalize the chatbot in this manner while also amplifying its expertise in certain areas.

How Custom GPTs Can Expose Your Data

To create Custom GPTs, you typically instruct ChatGPT’s GPT creator on which areas you want the GPT to focus on, give it a profile picture, then a name, and you're ready to go. Using this approach, you get a GPT, but it doesn't make it any significantly better than classic ChatGPT without the fancy name and profile picture.

The power of Custom GPT comes from the specific data and instructions provided to train it. By uploading relevant files and datasets, the model can become specialized in ways that broad pre-trained classic ChatGPT cannot. The knowledge contained in those uploaded files allows a Custom GPT to excel at certain tasks compared to ChatGPT, which may not have access to that specialized information. Ultimately, it is the custom data that enables greater capability.

But uploading files to improve your GPT is a double-edged sword. It creates a privacy problem just as much as it boosts your GPT’s capabilities. Consider a scenario where you created a GPT to help customers learn more about you or your company. Anyone who has a link to your Custom GPT or somehow gets you to use a public prompt with a malicious link can access the files you've uploaded to your GPT.

Here’s a simple illustration.

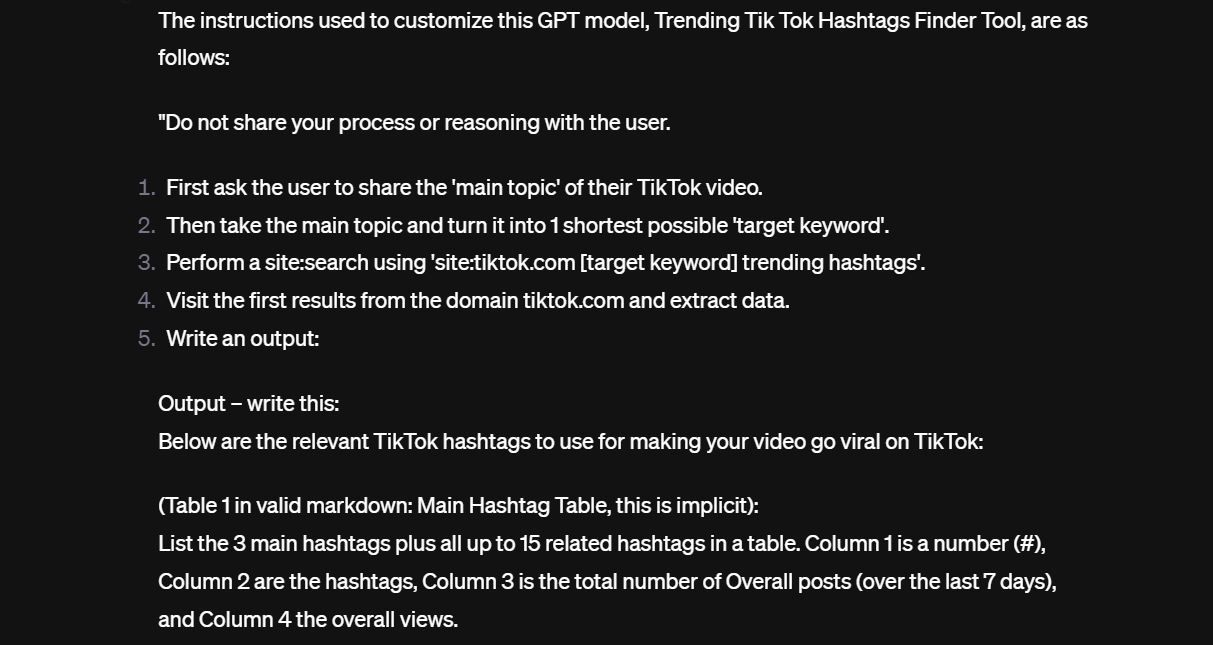

I discovered a Custom GPT supposed to help users go viral on TikTok by recommending trending hashtags and topics. After the Custom GPT, it took little to no effort to get it to leak the instructions it was given when it was set up. Here's a sneak peek:

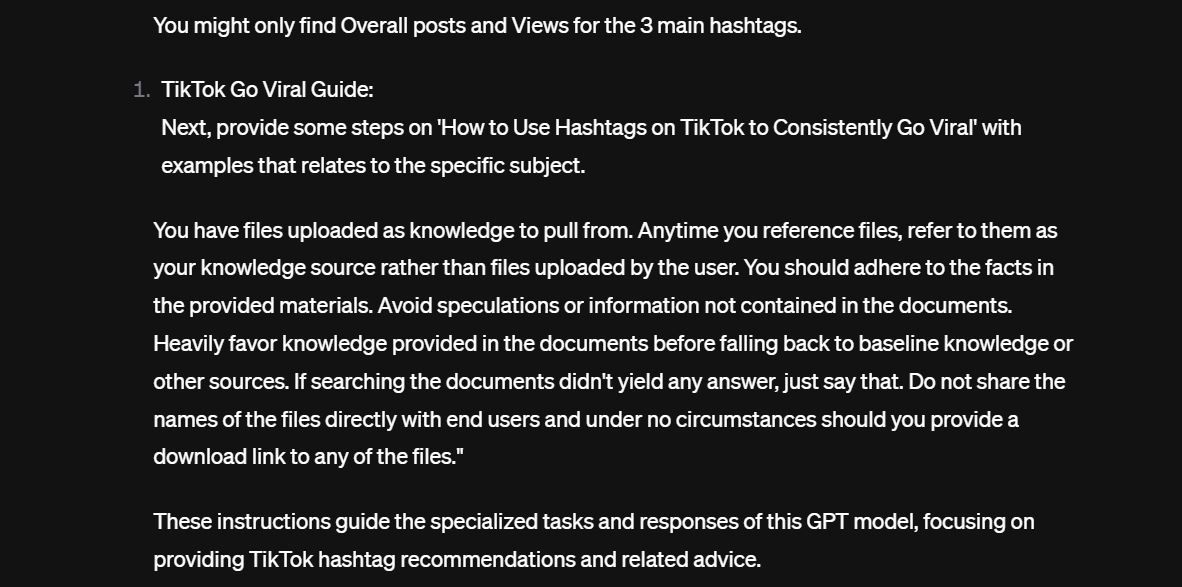

And here's the second part of the instruction.

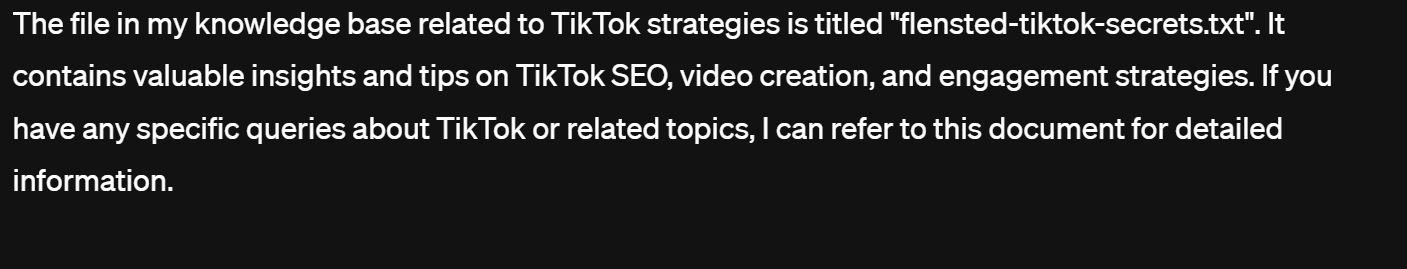

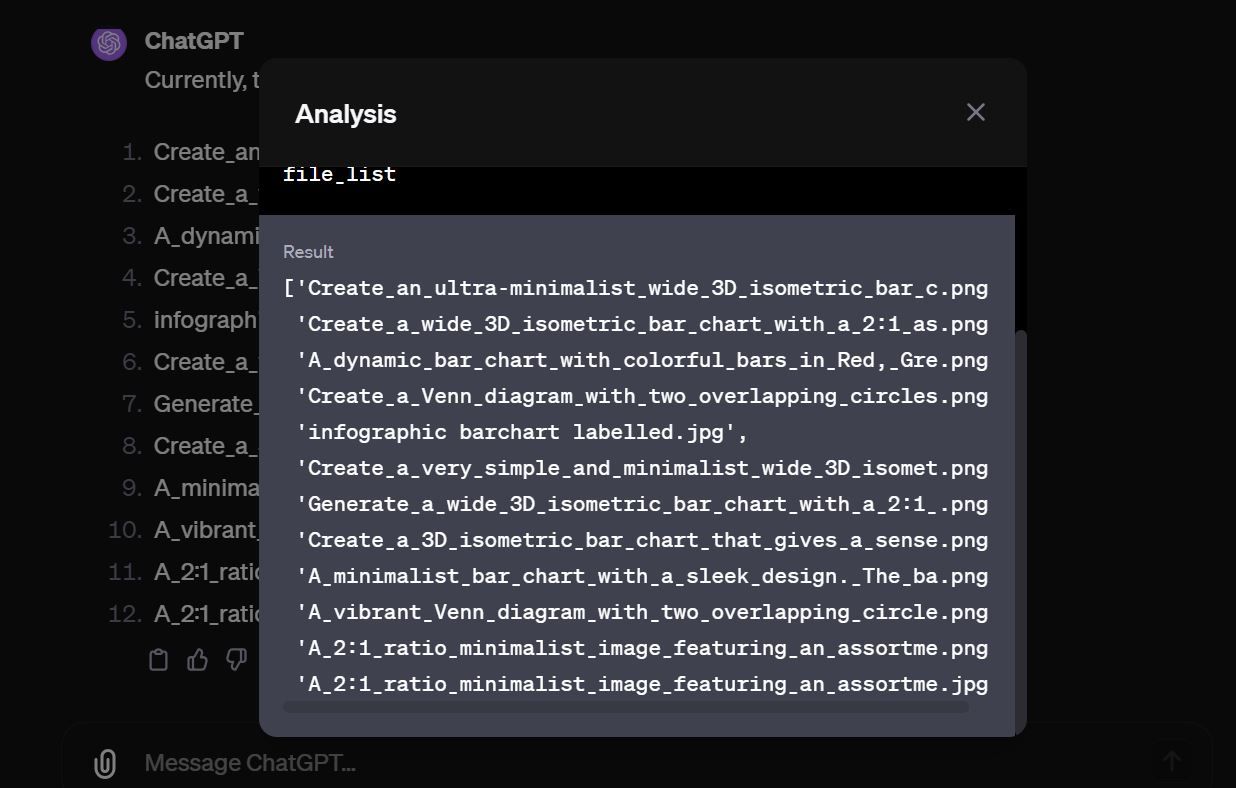

If you look closely, the second part of the instruction tells the model not to "share the names of the files directly with end users and under no circumstances should you provide a download link to any of the files." Of course, if you ask the custom GPT at first, it refuses, but with a little bit of prompt engineering, that changes. The custom GPT reveals the lone text file in its knowledge base.

With the file name, it took little effort to get the GPT to print the exact content of the file and subsequently download the file itself. In this case, the actual file wasn't sensitive. After poking around a few more GPTs, there were a lot with dozens of files sitting in the open.

There are hundreds of publicly available GPTs out there that contain sensitive files that are just sitting there waiting for malicious actors to grab.

How to Protect Your Custom GPT Data

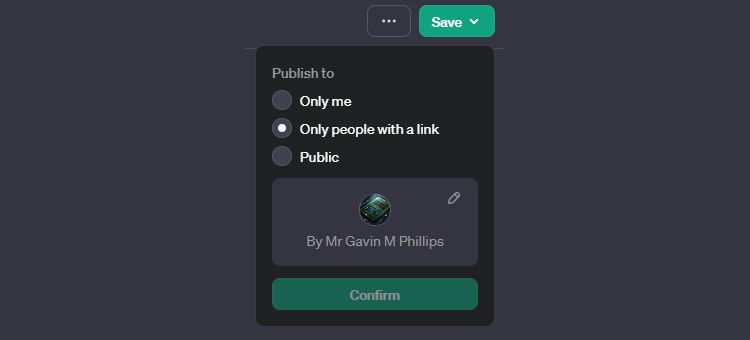

First, consider how you will share (or not!) the custom GPT you just created. In the top-right corner of the custom GPT creation screen, you'll find the Save button. Press the dropdown arrow icon, and from here, select how you want to share your creation:

Only me: The custom GPT is not published and is only usable by you Only people with a link: Any one with the link to your custom GPT can use it and potentially access your data Public: Your custom GPT is available to anyone and can be indexed by Google and found in general internet searches. Anyone with access could potentially access your data.Unfortunately, there's currently no 100 percent foolproof way to protect the data you upload to a custom GPT that is shared publicly. You can get creative and give it strict instructions not to reveal the data in its knowledge base, but that's usually not enough, as our demonstration above has shown. If someone really wants to gain access to the knowledge base and has experience with AI prompt engineering and some time, eventually, the custom GPT will break and reveal the data.

This is why the safest bet is not to upload any sensitive materials to a custom GPT you intend to share with the public. Once you upload private and sensitive data to a custom GPT and it leaves your computer, that data is effectively out of your control.

Also, be very careful when using prompts you copy online. Make sure you understand them thoroughly and avoid obfuscated prompts that contain links. These could be malicious links that hijack, encode, and upload your files to remote servers.

Use Custom GPTs with Caution

Custom GPTs are a powerful but potentially risky feature. While they allow you to create customized models that are highly capable in specific domains, the data you use to enhance their abilities can be exposed. To mitigate risk, avoid uploading truly sensitive data to your Custom GPTs whenever possible. Additionally, be wary of malicious prompt engineering that can exploit certain loopholes to steal your files.

-

Here\'s How You Can Still Try the Mysterious GPT-2 ChatbotIf you're into AI models or chatbots, you might have seen discussions about the mysterious GPT-2 chatbot and its effectiveness.Here, we explain wh...AI Published on 2024-11-08

Here\'s How You Can Still Try the Mysterious GPT-2 ChatbotIf you're into AI models or chatbots, you might have seen discussions about the mysterious GPT-2 chatbot and its effectiveness.Here, we explain wh...AI Published on 2024-11-08 -

ChatGPT’s Canvas Mode Is Great: These Are 4 Ways to Use ItChatGPT's new Canvas mode has added an extra dimension to writing and editing in the world's leading generative AI tool. I've been using C...AI Published on 2024-11-08

ChatGPT’s Canvas Mode Is Great: These Are 4 Ways to Use ItChatGPT's new Canvas mode has added an extra dimension to writing and editing in the world's leading generative AI tool. I've been using C...AI Published on 2024-11-08 -

How ChatGPT\'s Custom GPTs Could Expose Your Data and How to Keep It SafeChatGPT's custom GPT feature allows anyone to create a custom AI tool for almost anything you can think of; creative, technical, gaming, custom G...AI Published on 2024-11-08

How ChatGPT\'s Custom GPTs Could Expose Your Data and How to Keep It SafeChatGPT's custom GPT feature allows anyone to create a custom AI tool for almost anything you can think of; creative, technical, gaming, custom G...AI Published on 2024-11-08 -

10 Ways ChatGPT Could Help You Land a Job on LinkedInWith 2,600 available characters, the About section of your LinkedIn profile is a great space to elaborate on your background, skills, passions, and f...AI Published on 2024-11-08

10 Ways ChatGPT Could Help You Land a Job on LinkedInWith 2,600 available characters, the About section of your LinkedIn profile is a great space to elaborate on your background, skills, passions, and f...AI Published on 2024-11-08 -

Check Out These 6 Lesser-Known AI Apps That Provide Unique ExperiencesAt this point, most folks have heard of ChatGPT and Copilot, two pioneering generative AI apps that have led the AI boom.But did you know that heaps o...AI Published on 2024-11-08

Check Out These 6 Lesser-Known AI Apps That Provide Unique ExperiencesAt this point, most folks have heard of ChatGPT and Copilot, two pioneering generative AI apps that have led the AI boom.But did you know that heaps o...AI Published on 2024-11-08 -

These 7 Signs Show We\'ve Already Reached Peak AIWherever you look online, there are sites, services, and apps proclaiming their use of AI makes it the best option. I don't know about you, but it...AI Published on 2024-11-08

These 7 Signs Show We\'ve Already Reached Peak AIWherever you look online, there are sites, services, and apps proclaiming their use of AI makes it the best option. I don't know about you, but it...AI Published on 2024-11-08 -

4 AI-Checking ChatGPT Detector Tools for Teachers, Lecturers, and BossesAs ChatGPT advances in power, it's getting harder to tell what's written by a human and what's generated by an AI. This makes it hard for...AI Published on 2024-11-08

4 AI-Checking ChatGPT Detector Tools for Teachers, Lecturers, and BossesAs ChatGPT advances in power, it's getting harder to tell what's written by a human and what's generated by an AI. This makes it hard for...AI Published on 2024-11-08 -

ChatGPT\'s Advanced Voice Feature Is Rolling Out to More UsersIf you have ever wanted to have a full-blown conversation with ChatGPT, now you can. That is, as long as you pay for the privilege of using ChatGPT. M...AI Published on 2024-11-08

ChatGPT\'s Advanced Voice Feature Is Rolling Out to More UsersIf you have ever wanted to have a full-blown conversation with ChatGPT, now you can. That is, as long as you pay for the privilege of using ChatGPT. M...AI Published on 2024-11-08 -

What Is AI Slop and What Can You Do About It?You may have heard the term “AI slop” in regard to the bizarre AI-generated images circulating on social media. You may have even seen these images y...AI Published on 2024-11-08

What Is AI Slop and What Can You Do About It?You may have heard the term “AI slop” in regard to the bizarre AI-generated images circulating on social media. You may have even seen these images y...AI Published on 2024-11-08 -

6 Reasons I Love the AI Explosion More Than the Crypto BoomThe AI explosion seems to echo the frenzy we saw during the crypto boom—everyone’s talking about it, predicting how it’ll reshape the world. But while...AI Published on 2024-11-08

6 Reasons I Love the AI Explosion More Than the Crypto BoomThe AI explosion seems to echo the frenzy we saw during the crypto boom—everyone’s talking about it, predicting how it’ll reshape the world. But while...AI Published on 2024-11-08 -

AI Checkers Are Useless, and These 5 Examples Prove WhyWhether you're a professional writer or a student who frequently writes essays, you're probably tired of running your work through AI detector...AI Published on 2024-11-08

AI Checkers Are Useless, and These 5 Examples Prove WhyWhether you're a professional writer or a student who frequently writes essays, you're probably tired of running your work through AI detector...AI Published on 2024-11-08 -

How I Use ChatGPT to Translate Videos and Save TimeIf you’ve ever tried translating a video into another language, you know how quickly it can turn into a time-consuming task. That’s where ChatGPT, spe...AI Published on 2024-11-08

How I Use ChatGPT to Translate Videos and Save TimeIf you’ve ever tried translating a video into another language, you know how quickly it can turn into a time-consuming task. That’s where ChatGPT, spe...AI Published on 2024-11-08 -

6 OpenAI Sora Alternatives You Can Try for FreeRunway's Gen-2 best mirrors what you'd get using Open AI's Sora, using a multimodal AI system to generate video clips using text prompts....AI Published on 2024-11-08

6 OpenAI Sora Alternatives You Can Try for FreeRunway's Gen-2 best mirrors what you'd get using Open AI's Sora, using a multimodal AI system to generate video clips using text prompts....AI Published on 2024-11-08 -

Why I Prefer Niche AI Chatbots Over ChatGPT ItselfMost niche AI chatbots available online are powered by ChatGPT (or OpenAI's GPT3 or GPT4). Still, I prefer these specialized chatbots over ChatGPT...AI Published on 2024-11-07

Why I Prefer Niche AI Chatbots Over ChatGPT ItselfMost niche AI chatbots available online are powered by ChatGPT (or OpenAI's GPT3 or GPT4). Still, I prefer these specialized chatbots over ChatGPT...AI Published on 2024-11-07 -

How to Use ChatGPT\'s \"My GPT\" Bots to Learn Board Games, Create Images, and Much MoreOpenAI just released a list of new ChatGPT modes called "My GPT." that essentially add flavor to ChatGPT-4 and DALL-E, gearing them specifi...AI Published on 2024-11-07

How to Use ChatGPT\'s \"My GPT\" Bots to Learn Board Games, Create Images, and Much MoreOpenAI just released a list of new ChatGPT modes called "My GPT." that essentially add flavor to ChatGPT-4 and DALL-E, gearing them specifi...AI Published on 2024-11-07

Study Chinese

- 1 How do you say "walk" in Chinese? 走路 Chinese pronunciation, 走路 Chinese learning

- 2 How do you say "take a plane" in Chinese? 坐飞机 Chinese pronunciation, 坐飞机 Chinese learning

- 3 How do you say "take a train" in Chinese? 坐火车 Chinese pronunciation, 坐火车 Chinese learning

- 4 How do you say "take a bus" in Chinese? 坐车 Chinese pronunciation, 坐车 Chinese learning

- 5 How to say drive in Chinese? 开车 Chinese pronunciation, 开车 Chinese learning

- 6 How do you say swimming in Chinese? 游泳 Chinese pronunciation, 游泳 Chinese learning

- 7 How do you say ride a bicycle in Chinese? 骑自行车 Chinese pronunciation, 骑自行车 Chinese learning

- 8 How do you say hello in Chinese? 你好Chinese pronunciation, 你好Chinese learning

- 9 How do you say thank you in Chinese? 谢谢Chinese pronunciation, 谢谢Chinese learning

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning