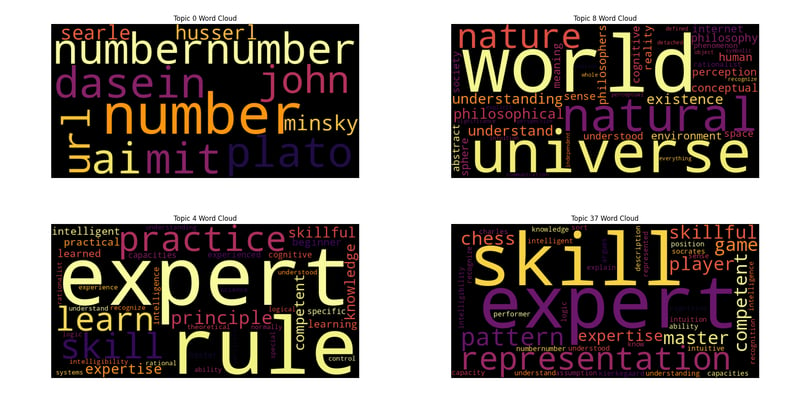

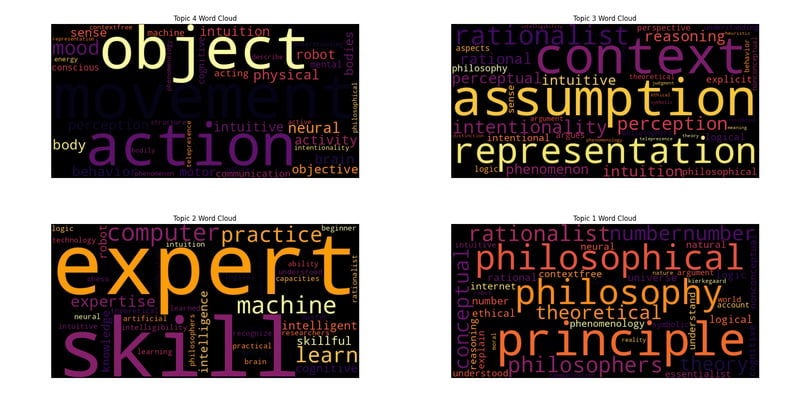

Modelagem de tópicos com Topc: Dreyfus, AI e Wordclouds

Publicado em 30/07/2024

Extraindo insights de PDFs com Python: um guia abrangente

Este script demonstra um fluxo de trabalho poderoso para processamento de PDFs, extração de texto, tokenização de frases e execução de modelagem de tópicos com visualização, adaptado para análises eficientes e criteriosas.

Visão geral das bibliotecas

- os: Fornece funções para interagir com o sistema operacional.

- matplotlib.pyplot: Usado para criar visualizações estáticas, animadas e interativas em Python.

- nltk: Natural Language Toolkit, um conjunto de bibliotecas e programas para processamento de linguagem natural.

- pandas: Biblioteca de manipulação e análise de dados.

- pdftotext: Biblioteca para conversão de documentos PDF em texto simples.

- re: Fornece operações de correspondência de expressões regulares.

- seaborn: Biblioteca de visualização de dados estatísticos baseada em matplotlib.

- nltk.tokenize.sent_tokenize: função NLTK para tokenizar uma string em frases.

- top2vec: Biblioteca para modelagem de tópicos e pesquisa semântica.

- wordcloud: Biblioteca para criar nuvens de palavras a partir de dados de texto.

Configuração inicial

Importar Módulos

import os import matplotlib.pyplot as plt import nltk import pandas as pd import pdftotext import re import seaborn as sns from nltk.tokenize import sent_tokenize from top2vec import Top2Vec from wordcloud import WordCloud from cleantext import clean

Em seguida, certifique-se de que o tokenizer punkt foi baixado:

nltk.download('punkt')

Normalização de texto

def normalize_text(text):

"""Normalize text by removing special characters and extra spaces,

and applying various other cleaning options."""

# Apply the clean function with specified parameters

cleaned_text = clean(

text,

fix_unicode=True, # fix various unicode errors

to_ascii=True, # transliterate to closest ASCII representation

lower=True, # lowercase text

no_line_breaks=False, # fully strip line breaks as opposed to only normalizing them

no_urls=True, # replace all URLs with a special token

no_emails=True, # replace all email addresses with a special token

no_phone_numbers=True, # replace all phone numbers with a special token

no_numbers=True, # replace all numbers with a special token

no_digits=True, # replace all digits with a special token

no_currency_symbols=True, # replace all currency symbols with a special token

no_punct=False, # remove punctuations

lang="en", # set to 'de' for German special handling

)

# Further clean the text by removing any remaining special characters except word characters, whitespace, and periods/commas

cleaned_text = re.sub(r"[^\w\s.,]", "", cleaned_text)

# Replace multiple whitespace characters with a single space and strip leading/trailing spaces

cleaned_text = re.sub(r"\s ", " ", cleaned_text).strip()

return cleaned_text

Extração de texto PDF

def extract_text_from_pdf(pdf_path):

with open(pdf_path, "rb") as f:

pdf = pdftotext.PDF(f)

all_text = "\n\n".join(pdf)

return normalize_text(all_text)

Tokenização de frases

def split_into_sentences(text):

return sent_tokenize(text)

Processando vários arquivos

def process_files(file_paths):

authors, titles, all_sentences = [], [], []

for file_path in file_paths:

file_name = os.path.basename(file_path)

parts = file_name.split(" - ", 2)

if len(parts) != 3 or not file_name.endswith(".pdf"):

print(f"Skipping file with incorrect format: {file_name}")

continue

year, author, title = parts

author, title = author.strip(), title.replace(".pdf", "").strip()

try:

text = extract_text_from_pdf(file_path)

except Exception as e:

print(f"Error extracting text from {file_name}: {e}")

continue

sentences = split_into_sentences(text)

authors.append(author)

titles.append(title)

all_sentences.extend(sentences)

print(f"Number of sentences for {file_name}: {len(sentences)}")

return authors, titles, all_sentences

Salvando dados em CSV

def save_data_to_csv(authors, titles, file_paths, output_file):

texts = []

for fp in file_paths:

try:

text = extract_text_from_pdf(fp)

sentences = split_into_sentences(text)

texts.append(" ".join(sentences))

except Exception as e:

print(f"Error processing file {fp}: {e}")

texts.append("")

data = pd.DataFrame({

"Author": authors,

"Title": titles,

"Text": texts

})

data.to_csv(output_file, index=False, quoting=1, encoding='utf-8')

print(f"Data has been written to {output_file}")

Carregando palavras irrelevantes

def load_stopwords(filepath):

with open(filepath, "r") as f:

stopwords = f.read().splitlines()

additional_stopwords = ["able", "according", "act", "actually", "after", "again", "age", "agree", "al", "all", "already", "also", "am", "among", "an", "and", "another", "any", "appropriate", "are", "argue", "as", "at", "avoid", "based", "basic", "basis", "be", "been", "begin", "best", "book", "both", "build", "but", "by", "call", "can", "cant", "case", "cases", "claim", "claims", "class", "clear", "clearly", "cope", "could", "course", "data", "de", "deal", "dec", "did", "do", "doesnt", "done", "dont", "each", "early", "ed", "either", "end", "etc", "even", "ever", "every", "far", "feel", "few", "field", "find", "first", "follow", "follows", "for", "found", "free", "fri", "fully", "get", "had", "hand", "has", "have", "he", "help", "her", "here", "him", "his", "how", "however", "httpsabout", "ibid", "if", "im", "in", "is", "it", "its", "jstor", "june", "large", "lead", "least", "less", "like", "long", "look", "man", "many", "may", "me", "money", "more", "most", "move", "moves", "my", "neither", "net", "never", "new", "no", "nor", "not", "notes", "notion", "now", "of", "on", "once", "one", "ones", "only", "open", "or", "order", "orgterms", "other", "our", "out", "own", "paper", "past", "place", "plan", "play", "point", "pp", "precisely", "press", "put", "rather", "real", "require", "right", "risk", "role", "said", "same", "says", "search", "second", "see", "seem", "seems", "seen", "sees", "set", "shall", "she", "should", "show", "shows", "since", "so", "step", "strange", "style", "such", "suggests", "talk", "tell", "tells", "term", "terms", "than", "that", "the", "their", "them", "then", "there", "therefore", "these", "they", "this", "those", "three", "thus", "to", "todes", "together", "too", "tradition", "trans", "true", "try", "trying", "turn", "turns", "two", "up", "us", "use", "used", "uses", "using", "very", "view", "vol", "was", "way", "ways", "we", "web", "well", "were", "what", "when", "whether", "which", "who", "why", "with", "within", "works", "would", "years", "york", "you", "your", "suggests", "without"]

stopwords.extend(additional_stopwords)

return set(stopwords)

Filtrando palavras irrelevantes de tópicos

def filter_stopwords_from_topics(topic_words, stopwords):

filtered_topics = []

for words in topic_words:

filtered_topics.append([word for word in words if word.lower() not in stopwords])

return filtered_topics

Geração de nuvem de palavras

def generate_wordcloud(topic_words, topic_num, palette='inferno'):

colors = sns.color_palette(palette, n_colors=256).as_hex()

def color_func(word, font_size, position, orientation, random_state=None, **kwargs):

return colors[random_state.randint(0, len(colors) - 1)]

wordcloud = WordCloud(width=800, height=400, background_color='black', color_func=color_func).generate(' '.join(topic_words))

plt.figure(figsize=(10, 5))

plt.imshow(wordcloud, interpolation='bilinear')

plt.axis('off')

plt.title(f'Topic {topic_num} Word Cloud')

plt.show()

Execução Principal

file_paths = [f"/home/roomal/Desktop/Dreyfus-Project/Dreyfus/{fname}" for fname in os.listdir("/home/roomal/Desktop/Dreyfus-Project/Dreyfus/") if fname.endswith(".pdf")]

authors, titles, all_sentences = process_files(file_paths)

output_file = "/home/roomal/Desktop/Dreyfus-Project/Dreyfus_Papers.csv"

save_data_to_csv(authors, titles, file_paths, output_file)

stopwords_filepath = "/home/roomal/Documents/Lists/stopwords.txt"

stopwords = load_stopwords(stopwords_filepath)

try:

topic_model = Top2Vec(

all_sentences,

embedding_model="distiluse-base-multilingual-cased",

speed="deep-learn",

workers=6

)

print("Top2Vec model created successfully.")

except ValueError as e:

print(f"Error initializing Top2Vec: {e}")

except Exception as e:

print(f"Unexpected error: {e}")

num_topics = topic_model.get_num_topics()

topic_words, word_scores, topic_nums = topic_model.get_topics(num_topics)

filtered_topic_words = filter_stopwords_from_topics(topic_words, stopwords)

for i, words in enumerate(filtered_topic_words):

print(f"Topic {i}: {', '.join(words)}")

keywords = ["heidegger"]

topic_words, word_scores, topic_scores, topic_nums = topic_model.search_topics(keywords=keywords, num_topics=num_topics)

filtered

_search_topic_words = filter_stopwords_from_topics(topic_words, stopwords)

for i, words in enumerate(filtered_search_topic_words):

generate_wordcloud(words, topic_nums[i])

for i in range(reduced_num_topics):

topic_words = topic_model.topic_words_reduced[i]

filtered_words = [word for word in topic_words if word.lower() not in stopwords]

print(f"Reduced Topic {i}: {', '.join(filtered_words)}")

generate_wordcloud(filtered_words, i)

Reduza o número de tópicos

reduced_num_topics = 5

topic_mapping = topic_model.hierarchical_topic_reduction(num_topics=reduced_num_topics)

# Print reduced topics and generate word clouds

for i in range(reduced_num_topics):

topic_words = topic_model.topic_words_reduced[i]

filtered_words = [word for word in topic_words if word.lower() not in stopwords]

print(f"Reduced Topic {i}: {', '.join(filtered_words)}")

generate_wordcloud(filtered_words, i)

Declaração de lançamento

Este artigo foi reproduzido em: https://dev.to/roomals/topic-modeling-with-top2vec-dreyfus-ai-and-wordclouds-1ggl?1 Se houver alguma violação, entre em contato com [email protected] para excluir isto

Tutorial mais recente

Mais>

-

Como posso selecionar programaticamente todo o texto dentro de uma div em mouse clique?selecionando programaticamente o texto div no mouse click question dado um elemento Div com conteúdo de texto, como o usuário pode selecionar ...Programação Postado em 2025-07-13

Como posso selecionar programaticamente todo o texto dentro de uma div em mouse clique?selecionando programaticamente o texto div no mouse click question dado um elemento Div com conteúdo de texto, como o usuário pode selecionar ...Programação Postado em 2025-07-13 -

Por que as expressões lambda exigem variáveis "final" ou "final válida" em Java?expressões lambda requerem "final" ou "efetivamente" variáveis a mensagem de erro "BEATILE Utilizada na expressão lam...Programação Postado em 2025-07-13

Por que as expressões lambda exigem variáveis "final" ou "final válida" em Java?expressões lambda requerem "final" ou "efetivamente" variáveis a mensagem de erro "BEATILE Utilizada na expressão lam...Programação Postado em 2025-07-13 -

Por que não é um pedido de solicitação de captura de entrada no PHP, apesar do código válido?abordando o mau funcionamento da solicitação de postagem em php no snippet de código apresentado: action='' Mantenha -se vigilante com a alo...Programação Postado em 2025-07-13

Por que não é um pedido de solicitação de captura de entrada no PHP, apesar do código válido?abordando o mau funcionamento da solicitação de postagem em php no snippet de código apresentado: action='' Mantenha -se vigilante com a alo...Programação Postado em 2025-07-13 -

Métodos de acesso e gerenciamento de variáveis de ambiente pythonAcessando variáveis de ambiente em python para acessar variáveis de ambiente em python, utilizar o os.envon objeto, que representa um ambien...Programação Postado em 2025-07-13

Métodos de acesso e gerenciamento de variáveis de ambiente pythonAcessando variáveis de ambiente em python para acessar variáveis de ambiente em python, utilizar o os.envon objeto, que representa um ambien...Programação Postado em 2025-07-13 -

Como posso ler com eficiência um arquivo grande em ordem inversa usando o Python?lendo um arquivo em ordem inversa em python se você estiver trabalhando com um arquivo grande e precisar ler seu conteúdo da última linha para...Programação Postado em 2025-07-13

Como posso ler com eficiência um arquivo grande em ordem inversa usando o Python?lendo um arquivo em ordem inversa em python se você estiver trabalhando com um arquivo grande e precisar ler seu conteúdo da última linha para...Programação Postado em 2025-07-13 -

Método de verificação eficaz para cordas Java que não são vazias e não nulaschecando se uma sequência não é nula e não é vazia para determinar se uma sequência não é nula e não é vazia, Java fornece vários métodos. 1.6...Programação Postado em 2025-07-13

Método de verificação eficaz para cordas Java que não são vazias e não nulaschecando se uma sequência não é nula e não é vazia para determinar se uma sequência não é nula e não é vazia, Java fornece vários métodos. 1.6...Programação Postado em 2025-07-13 -

Como modificar efetivamente o atributo CSS do pseudo-elemento ": depois" usando jQuery?Entendendo as limitações dos pseudo-elementos no jQuery: acessar o ": depois" seletor no desenvolvimento da web, pseudo-elementos co...Programação Postado em 2025-07-13

Como modificar efetivamente o atributo CSS do pseudo-elemento ": depois" usando jQuery?Entendendo as limitações dos pseudo-elementos no jQuery: acessar o ": depois" seletor no desenvolvimento da web, pseudo-elementos co...Programação Postado em 2025-07-13 -

O CSS pode localizar elementos HTML com base em qualquer valor de atributo?direcionando elementos html com qualquer valor de atributo no css em css, é possível alvo elementos baseados em atributos específicos, conform...Programação Postado em 2025-07-13

O CSS pode localizar elementos HTML com base em qualquer valor de atributo?direcionando elementos html com qualquer valor de atributo no css em css, é possível alvo elementos baseados em atributos específicos, conform...Programação Postado em 2025-07-13 -

Por que não `corpo {margem: 0; } `Sempre remova a margem superior no CSS?abordando a remoção da margem corporal em css para desenvolvedores da web iniciantes, remover a margem do elemento corporal pode ser uma taref...Programação Postado em 2025-07-13

Por que não `corpo {margem: 0; } `Sempre remova a margem superior no CSS?abordando a remoção da margem corporal em css para desenvolvedores da web iniciantes, remover a margem do elemento corporal pode ser uma taref...Programação Postado em 2025-07-13 -

Por que as imagens ainda têm fronteiras no Chrome? `Border: Nenhum;` Solução inválidaremovendo a borda da imagem em Chrome Uma questão frequente encontrada ao trabalhar com imagens em Chrome e IE9 é a aparência de uma borda fin...Programação Postado em 2025-07-13

Por que as imagens ainda têm fronteiras no Chrome? `Border: Nenhum;` Solução inválidaremovendo a borda da imagem em Chrome Uma questão frequente encontrada ao trabalhar com imagens em Chrome e IE9 é a aparência de uma borda fin...Programação Postado em 2025-07-13 -

\ "while (1) vs. para (;;): a otimização do compilador elimina as diferenças de desempenho? \"while (1) vs. for (;;): existe uma diferença de velocidade? loops? Resposta: Na maioria dos compiladores modernos, não há diferença de dese...Programação Postado em 2025-07-13

\ "while (1) vs. para (;;): a otimização do compilador elimina as diferenças de desempenho? \"while (1) vs. for (;;): existe uma diferença de velocidade? loops? Resposta: Na maioria dos compiladores modernos, não há diferença de dese...Programação Postado em 2025-07-13 -

Resolva a exceção \\ "String Value \\" quando o MySQL insere emojiResolvando a exceção do valor da string incorreta ao inserir emoji ao tentar inserir uma string contendo caracteres emoji em um banco de dados M...Programação Postado em 2025-07-13

Resolva a exceção \\ "String Value \\" quando o MySQL insere emojiResolvando a exceção do valor da string incorreta ao inserir emoji ao tentar inserir uma string contendo caracteres emoji em um banco de dados M...Programação Postado em 2025-07-13 -

Implementação dinâmica reflexiva da interface GO para exploração de método RPCreflexão para a implementação da interface dinâmica em go A reflexão em Go é uma ferramenta poderosa que permite a inspeção e manipulação do c...Programação Postado em 2025-07-13

Implementação dinâmica reflexiva da interface GO para exploração de método RPCreflexão para a implementação da interface dinâmica em go A reflexão em Go é uma ferramenta poderosa que permite a inspeção e manipulação do c...Programação Postado em 2025-07-13 -

Por que o Microsoft Visual C ++ falha ao implementar corretamente a instanciação do modelo bifásico?O mistério do modelo de duas fases "quebrado" bifásia instanciação no Microsoft Visual C Declaração de Problema: STRAGLES Expressa...Programação Postado em 2025-07-13

Por que o Microsoft Visual C ++ falha ao implementar corretamente a instanciação do modelo bifásico?O mistério do modelo de duas fases "quebrado" bifásia instanciação no Microsoft Visual C Declaração de Problema: STRAGLES Expressa...Programação Postado em 2025-07-13 -

Como criar uma animação CSS esquerda-direita suave para uma div em seu contêiner?Animação CSS genérica para o movimento esquerdo-direita Neste artigo, exploraremos a criação de uma animação CSS genérica para mover uma divis...Programação Postado em 2025-07-13

Como criar uma animação CSS esquerda-direita suave para uma div em seu contêiner?Animação CSS genérica para o movimento esquerdo-direita Neste artigo, exploraremos a criação de uma animação CSS genérica para mover uma divis...Programação Postado em 2025-07-13

Estude chinês

- 1 Como se diz “andar” em chinês? 走路 Pronúncia chinesa, 走路 aprendizagem chinesa

- 2 Como se diz “pegar um avião” em chinês? 坐飞机 Pronúncia chinesa, 坐飞机 aprendizagem chinesa

- 3 Como se diz “pegar um trem” em chinês? 坐火车 Pronúncia chinesa, 坐火车 aprendizagem chinesa

- 4 Como se diz “pegar um ônibus” em chinês? 坐车 Pronúncia chinesa, 坐车 aprendizagem chinesa

- 5 Como se diz dirigir em chinês? 开车 Pronúncia chinesa, 开车 aprendizagem chinesa

- 6 Como se diz nadar em chinês? 游泳 Pronúncia chinesa, 游泳 aprendizagem chinesa

- 7 Como se diz andar de bicicleta em chinês? 骑自行车 Pronúncia chinesa, 骑自行车 aprendizagem chinesa

- 8 Como você diz olá em chinês? 你好Pronúncia chinesa, 你好Aprendizagem chinesa

- 9 Como você agradece em chinês? 谢谢Pronúncia chinesa, 谢谢Aprendizagem chinesa

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning

Decodificação de imagem base64

pinyin chinês

Codificação Unicode

Compressão de criptografia de ofuscação JS

Ferramenta de criptografia hexadecimal de URL

Ferramenta de conversão de codificação UTF-8

Ferramentas online de codificação e decodificação Ascii

Ferramenta de criptografia MD5

Ferramenta on-line de criptografia e descriptografia de texto Hash/Hash

Criptografia SHA on-line