JIT 컴파일러를 사용하여 Python 루프를 느리게 만드시나요?

If you haven't heard, Python loops can be slow--especially when working with large datasets. If you're trying to make calculations across millions of data points, execution time can quickly become a bottleneck. Luckily for us, Numba has a Just-in-Time (JIT) compiler that we can use to help speed up our numerical computations and loops in Python.

The other day, I found myself in need of a simple exponential smoothing function in Python. This function needed to take in array and return an array of the same length with the smoothed values. Typically, I try and avoid loops where possible in Python (especially when dealing with Pandas DataFrames). At my current level of capability, I didn't see how to avoid using a loop to exponentially smooth an array of values.

I am going to walk through the process of creating this exponential smoothing function and testing it with and without the JIT compilation. I'll briefly touch on JIT and how I made sure to code the the loop in a manner that worked with the nopython mode.

What is JIT?

JIT compilers are particularly useful with higher-level languages like Python, JavaScript, and Java. These languages are known for their flexibility and ease of use, but they can suffer from slower execution speeds compared to lower-level languages like C or C . JIT compilation helps bridge this gap by optimizing the execution of code at runtime, making it faster without sacrificing the advantages of these higher-level languages.

When using the nopython=True mode in the Numba JIT compiler, the Python interpreter is bypassed entirely, forcing Numba to compile everything down to machine code. This results in even faster execution by eliminating the overhead associated with Python's dynamic typing and other interpreter-related operations.

Building the fast exponential smoothing function

Exponential smoothing is a technique used to smooth out data by applying a weighted average over past observations. The formula for exponential smoothing is:

where:

- St : Represents the smoothed value at time t .

- Vt : Represents the original value at time t from the values array.

- α : The smoothing factor, which determines the weight of the current value Vt in the smoothing process.

- St−1 : Represents the smoothed value at time t−1 , i.e., the previous smoothed value.

The formula applies exponential smoothing, where:

- The new smoothed value St is a weighted average of the current value Vt and the previous smoothed value St−1 .

- The factor α determines how much influence the current value Vt has on the smoothed value compared to the previous smoothed value St−1 .

To implement this in Python, and stick to functionality that works with nopython=True mode, we will pass in an array of data values and the alpha float. I default the alpha to 0.33333333 because that fits my current use case. We will initialize an empty array to store the smoothed values in, loop and calculate, and return smoothed values. This is what it looks like:

@jit(nopython=True)

def fast_exponential_smoothing(values, alpha=0.33333333):

smoothed_values = np.zeros_like(values) # Array of zeros the same length as values

smoothed_values[0] = values[0] # Initialize the first value

for i in range(1, len(values)):

smoothed_values[i] = alpha * values[i] (1 - alpha) * smoothed_values[i - 1]

return smoothed_values

Simple, right? Let's see if JIT is doing anything now. First, we need to create a large array of integers. Then, we call the function, time how long it took to compute, and print the results.

# Generate a large random array of a million integers

large_array = np.random.randint(1, 100, size=1_000_000)

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = fast_exponential_smoothing(large_array)

end_time = time.time()

print(f"Exponential Smoothing with JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

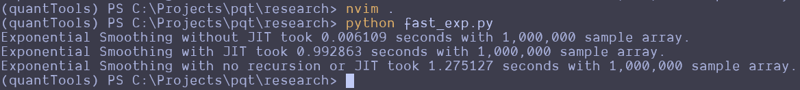

This can be repeated and altered just a bit to test the function without the JIT decorator. Here are the results that I got:

Wait, what the f***?

I thought JIT was supposed to speed it up. It looks like the standard Python function beat the JIT version and a version that attempts to use no recursion. That's strange. I guess you can't just slap the JIT decorator on something and make it go faster? Perhaps simple array loops and NumPy operations are already pretty efficient? Perhaps I don't understand the use case for JIT as well as I should? Maybe we should try this on a more complex loop?

Here is the entire code python file I created for testing:

import numpy as np

from numba import jit

import time

@jit(nopython=True)

def fast_exponential_smoothing(values, alpha=0.33333333):

smoothed_values = np.zeros_like(values) # Array of zeros the same length as values

smoothed_values[0] = values[0] # Initialize the first value

for i in range(1, len(values)):

smoothed_values[i] = alpha * values[i] (1 - alpha) * smoothed_values[i - 1]

return smoothed_values

def fast_exponential_smoothing_nojit(values, alpha=0.33333333):

smoothed_values = np.zeros_like(values) # Array of zeros the same length as values

smoothed_values[0] = values[0] # Initialize the first value

for i in range(1, len(values)):

smoothed_values[i] = alpha * values[i] (1 - alpha) * smoothed_values[i - 1]

return smoothed_values

def non_recursive_exponential_smoothing(values, alpha=0.33333333):

n = len(values)

smoothed_values = np.zeros(n)

# Initialize the first value

smoothed_values[0] = values[0]

# Calculate the rest of the smoothed values

decay_factors = (1 - alpha) ** np.arange(1, n)

cumulative_weights = alpha * decay_factors

smoothed_values[1:] = np.cumsum(values[1:] * np.flip(cumulative_weights)) (1 - alpha) ** np.arange(1, n) * values[0]

return smoothed_values

# Generate a large random array of a million integers

large_array = np.random.randint(1, 1000, size=10_000_000)

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = fast_exponential_smoothing_nojit(large_array)

end_time = time.time()

print(f"Exponential Smoothing without JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = fast_exponential_smoothing(large_array)

end_time = time.time()

print(f"Exponential Smoothing with JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = non_recursive_exponential_smoothing(large_array)

end_time = time.time()

print(f"Exponential Smoothing with no recursion or JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

I attempted to create the non-recursive version to see if vectorized operations across arrays would make it go faster, but it seems to be pretty damn fast as it is. These results remained the same all the way up until I didn't have enough memory to make the array of random integers.

Let me know what you think about this in the comments. I am by no means a professional developer, so I am accepting all comments, criticisms, or educational opportunities.

Until next time.

Happy coding!

-

Kubernetes에 MySQL을 만들고 Prometheus 및 Grafana로 모니터링하십시오.이 튜토리얼에서는 모니터링을 위해 Prometheus 및 Grafana를 통합하면서 Kubernetes (K8S) 클러스터에서 MySQL을 설정하는 방법을 살펴 보겠습니다. 우리는 MySQL, Prometheus 및 Grafana에 Bitnami Helm 차트를 사용하...프로그램 작성 2025-04-16에 게시되었습니다

Kubernetes에 MySQL을 만들고 Prometheus 및 Grafana로 모니터링하십시오.이 튜토리얼에서는 모니터링을 위해 Prometheus 및 Grafana를 통합하면서 Kubernetes (K8S) 클러스터에서 MySQL을 설정하는 방법을 살펴 보겠습니다. 우리는 MySQL, Prometheus 및 Grafana에 Bitnami Helm 차트를 사용하...프로그램 작성 2025-04-16에 게시되었습니다 -

자바 스크립트 객체의 키를 알파벳순으로 정렬하는 방법은 무엇입니까?object.keys (...) . .sort () . 정렬 된 속성을 보유 할 새 개체를 만듭니다. 정렬 된 키 어레이를 반복하고 리소셔 함수를 사용하여 원래 객체에서 새 객체에 해당 값과 함께 각 키를 추가합니다. 다음 코드는 프로세...프로그램 작성 2025-04-16에 게시되었습니다

자바 스크립트 객체의 키를 알파벳순으로 정렬하는 방법은 무엇입니까?object.keys (...) . .sort () . 정렬 된 속성을 보유 할 새 개체를 만듭니다. 정렬 된 키 어레이를 반복하고 리소셔 함수를 사용하여 원래 객체에서 새 객체에 해당 값과 함께 각 키를 추가합니다. 다음 코드는 프로세...프로그램 작성 2025-04-16에 게시되었습니다 -

Laravel Eloquent ORM Bengali 튜토리얼 : 모델 클래스 생성eloque : 모델 클래스 생성 은 Laravel의 데이터베이스 테이블을 사용하여 모델 클래스를 작성하는 프로세스입니다. Eloquent ORM (Object-Relational Mapper)을 사용하면 데이터베이스 테이블에서 데이터를 쉽게 읽고 쉽게 읽고, ...프로그램 작성 2025-04-16에 게시되었습니다

Laravel Eloquent ORM Bengali 튜토리얼 : 모델 클래스 생성eloque : 모델 클래스 생성 은 Laravel의 데이터베이스 테이블을 사용하여 모델 클래스를 작성하는 프로세스입니다. Eloquent ORM (Object-Relational Mapper)을 사용하면 데이터베이스 테이블에서 데이터를 쉽게 읽고 쉽게 읽고, ...프로그램 작성 2025-04-16에 게시되었습니다 -

Homebrew에서 GO를 설정하면 명령 줄 실행 문제가 발생하는 이유는 무엇입니까?발생하는 문제를 해결하려면 다음을 수행하십시오. 1. 필요한 디렉토리 만들기 mkdir $ home/go mkdir -p $ home/go/src/github.com/user 2. 환경 변수 구성프로그램 작성 2025-04-16에 게시되었습니다

Homebrew에서 GO를 설정하면 명령 줄 실행 문제가 발생하는 이유는 무엇입니까?발생하는 문제를 해결하려면 다음을 수행하십시오. 1. 필요한 디렉토리 만들기 mkdir $ home/go mkdir -p $ home/go/src/github.com/user 2. 환경 변수 구성프로그램 작성 2025-04-16에 게시되었습니다 -

예외 지정자는 현대 C ++에서 여전히 유용합니까?이러한 지정자들은 의도를 전달할 수 있지만, 몇 가지 요인으로 인해 실질적인 용도가 의심 스럽다. Ideal behavior would involve compile errors for violating specifications, but this is not gu...프로그램 작성 2025-04-16에 게시되었습니다

예외 지정자는 현대 C ++에서 여전히 유용합니까?이러한 지정자들은 의도를 전달할 수 있지만, 몇 가지 요인으로 인해 실질적인 용도가 의심 스럽다. Ideal behavior would involve compile errors for violating specifications, but this is not gu...프로그램 작성 2025-04-16에 게시되었습니다 -

AWS RDS 및 Spring Boot 연결 안내서소개 이 기사에서는 보안 그룹을 구성하고 Spring Boot 응용 프로그램에 연결하고 연결을 테스트 한 후 AWS RDS MySQL 인스턴스를 설정하는 프로세스를 진행합니다. 1 단계 : 새로운 보안 그룹을 만듭니다 RDS 인스턴스를...프로그램 작성 2025-04-16에 게시되었습니다

AWS RDS 및 Spring Boot 연결 안내서소개 이 기사에서는 보안 그룹을 구성하고 Spring Boot 응용 프로그램에 연결하고 연결을 테스트 한 후 AWS RDS MySQL 인스턴스를 설정하는 프로세스를 진행합니다. 1 단계 : 새로운 보안 그룹을 만듭니다 RDS 인스턴스를...프로그램 작성 2025-04-16에 게시되었습니다 -

JavaScript 객체에서 키를 동적으로 설정하는 방법은 무엇입니까?jsobj = 'example'1; jsObj['key' i] = 'example' 1; 배열은 특수한 유형의 객체입니다. 그것들은 숫자 특성 (인치) + 1의 수를 반영하는 길이 속성을 유지합니다. 이 특별한 동작은 표준 객체에...프로그램 작성 2025-04-16에 게시되었습니다

JavaScript 객체에서 키를 동적으로 설정하는 방법은 무엇입니까?jsobj = 'example'1; jsObj['key' i] = 'example' 1; 배열은 특수한 유형의 객체입니다. 그것들은 숫자 특성 (인치) + 1의 수를 반영하는 길이 속성을 유지합니다. 이 특별한 동작은 표준 객체에...프로그램 작성 2025-04-16에 게시되었습니다 -

Short & Direct : JavaScript 링크를 클릭 한 후 페이지가 스크롤하는 것을 방지하는 방법은 무엇입니까? 클릭 후 내 페이지가 상단으로 이동하는 이유는 무엇입니까?javaScript 링크에서 페이지 스크롤을 막는 방법을 클릭하십시오 : 앵커 태그에서 javaScript 이벤트를 트리거 할 때 이벤트가 끝난 후 페이지 점프 문제를 겪는 것이 일반적입니다. 화재. 솔루션 : 이 원치 않는 동작을 ...프로그램 작성 2025-04-16에 게시되었습니다

Short & Direct : JavaScript 링크를 클릭 한 후 페이지가 스크롤하는 것을 방지하는 방법은 무엇입니까? 클릭 후 내 페이지가 상단으로 이동하는 이유는 무엇입니까?javaScript 링크에서 페이지 스크롤을 막는 방법을 클릭하십시오 : 앵커 태그에서 javaScript 이벤트를 트리거 할 때 이벤트가 끝난 후 페이지 점프 문제를 겪는 것이 일반적입니다. 화재. 솔루션 : 이 원치 않는 동작을 ...프로그램 작성 2025-04-16에 게시되었습니다 -

오른쪽에서 CSS 배경 이미지를 찾는 방법은 무엇입니까?/ 오른쪽에서 10px 요소를 배치하려면 / 배경 위치 : 오른쪽 10px 상단; 이 CSS 상단 코너는 오른쪽 상단의 왼쪽에서 10 pixels가되어야합니다. 요소의 상단 에지. 이 기능은 Internet Explorer 8 또는 이...프로그램 작성 2025-04-16에 게시되었습니다

오른쪽에서 CSS 배경 이미지를 찾는 방법은 무엇입니까?/ 오른쪽에서 10px 요소를 배치하려면 / 배경 위치 : 오른쪽 10px 상단; 이 CSS 상단 코너는 오른쪽 상단의 왼쪽에서 10 pixels가되어야합니다. 요소의 상단 에지. 이 기능은 Internet Explorer 8 또는 이...프로그램 작성 2025-04-16에 게시되었습니다 -

파이썬에서 마임 유형을 찾는 방법과 기술Python은 Mime 유형을 얻기위한 다양한 옵션을 제공합니다. 파일 유형 및 관련 MIME 유형의 포괄적 인 데이터베이스를 제공합니다. 설치하려면 PIP 설치 Python-Magic을 사용하십시오. Magic import mime = magic.magic ...프로그램 작성 2025-04-16에 게시되었습니다

파이썬에서 마임 유형을 찾는 방법과 기술Python은 Mime 유형을 얻기위한 다양한 옵션을 제공합니다. 파일 유형 및 관련 MIME 유형의 포괄적 인 데이터베이스를 제공합니다. 설치하려면 PIP 설치 Python-Magic을 사용하십시오. Magic import mime = magic.magic ...프로그램 작성 2025-04-16에 게시되었습니다 -

입력 : "경고 : mysqli_query ()는 왜 매개 변수 1이 mysqli, 주어진 리소스"오류가 발생하고이를 수정하는 방법을 기대 하는가? 출력 : 오류를 해결하는 분석 및 수정 방법 "경고 : MySQLI_QUERY () 매개 변수는 리소스 대신 MySQLI 여야합니다."mysqli_query () mysqli_query ()는 매개 변수 1이 mysqli, 리소스가 주어진 리소스, mysqli_query () 함수를 사용하여 mysql query를 실행하려고 시도 할 때 "경고 : mysqli_query (...프로그램 작성 2025-04-16에 게시되었습니다

입력 : "경고 : mysqli_query ()는 왜 매개 변수 1이 mysqli, 주어진 리소스"오류가 발생하고이를 수정하는 방법을 기대 하는가? 출력 : 오류를 해결하는 분석 및 수정 방법 "경고 : MySQLI_QUERY () 매개 변수는 리소스 대신 MySQLI 여야합니다."mysqli_query () mysqli_query ()는 매개 변수 1이 mysqli, 리소스가 주어진 리소스, mysqli_query () 함수를 사용하여 mysql query를 실행하려고 시도 할 때 "경고 : mysqli_query (...프로그램 작성 2025-04-16에 게시되었습니다 -

문자열 양식의 목록을 목록 개체로 변환하는 메소드목록의 문자열 표현을 목록 개체로 변환하는 방법? 작동 방식은 다음과 같습니다. 과일 = "" " AST 가져 오기 과일 = ast.literal_eval (과일) ast.literal_eval ()을 사용하여 목록의 문자열 표...프로그램 작성 2025-04-16에 게시되었습니다

문자열 양식의 목록을 목록 개체로 변환하는 메소드목록의 문자열 표현을 목록 개체로 변환하는 방법? 작동 방식은 다음과 같습니다. 과일 = "" " AST 가져 오기 과일 = ast.literal_eval (과일) ast.literal_eval ()을 사용하여 목록의 문자열 표...프로그램 작성 2025-04-16에 게시되었습니다 -

열의 열이 다른 데이터베이스 테이블을 어떻게 통합하려면 어떻게해야합니까?다른 열이있는 결합 테이블 ] 는 데이터베이스 테이블을 다른 열로 병합하려고 할 때 도전에 직면 할 수 있습니다. 간단한 방법은 열이 적은 테이블의 누락 된 열에 null 값을 추가하는 것입니다. 예를 들어, 표 B보다 더 많은 열이있는 두 개의 테이블,...프로그램 작성 2025-04-16에 게시되었습니다

열의 열이 다른 데이터베이스 테이블을 어떻게 통합하려면 어떻게해야합니까?다른 열이있는 결합 테이블 ] 는 데이터베이스 테이블을 다른 열로 병합하려고 할 때 도전에 직면 할 수 있습니다. 간단한 방법은 열이 적은 테이블의 누락 된 열에 null 값을 추가하는 것입니다. 예를 들어, 표 B보다 더 많은 열이있는 두 개의 테이블,...프로그램 작성 2025-04-16에 게시되었습니다 -

JavaScript 이벤트 처리에서 인스턴스 범위를 보존하는 방법 : 변수 별칭을 통해 "this"를 캡처코드 스 니펫에 표시된대로 "self"변수 "alias"this "를 선언하고 이벤트 핸들러로 전달하는 기술은 일반적인 솔루션입니다. 그러나 전통적인 외관은 그 적합성에 대한 우려를 제기 할 수 있습니다. &q...프로그램 작성 2025-04-16에 게시되었습니다

JavaScript 이벤트 처리에서 인스턴스 범위를 보존하는 방법 : 변수 별칭을 통해 "this"를 캡처코드 스 니펫에 표시된대로 "self"변수 "alias"this "를 선언하고 이벤트 핸들러로 전달하는 기술은 일반적인 솔루션입니다. 그러나 전통적인 외관은 그 적합성에 대한 우려를 제기 할 수 있습니다. &q...프로그램 작성 2025-04-16에 게시되었습니다 -

.NET XML 직렬화에서 네임 스페이스 접두사를 제어하는 방법은 무엇입니까?. NET XML 시리얼 화 : 네임 스페이스 접두사 제어 . Net은 두 가지 주요 XML 직렬화 메커니즘을 제공합니다. 그러나 기본적으로 생성하는 네임 스페이스 접두사는 내부 메커니즘에 의해 관리되므로 사용자 정의 접두사의 필요성을 제한합니다. xm...프로그램 작성 2025-04-16에 게시되었습니다

.NET XML 직렬화에서 네임 스페이스 접두사를 제어하는 방법은 무엇입니까?. NET XML 시리얼 화 : 네임 스페이스 접두사 제어 . Net은 두 가지 주요 XML 직렬화 메커니즘을 제공합니다. 그러나 기본적으로 생성하는 네임 스페이스 접두사는 내부 메커니즘에 의해 관리되므로 사용자 정의 접두사의 필요성을 제한합니다. xm...프로그램 작성 2025-04-16에 게시되었습니다

중국어 공부

- 1 "걷다"를 중국어로 어떻게 말하나요? 走路 중국어 발음, 走路 중국어 학습

- 2 "비행기를 타다"를 중국어로 어떻게 말하나요? 坐飞机 중국어 발음, 坐飞机 중국어 학습

- 3 "기차를 타다"를 중국어로 어떻게 말하나요? 坐火车 중국어 발음, 坐火车 중국어 학습

- 4 "버스를 타다"를 중국어로 어떻게 말하나요? 坐车 중국어 발음, 坐车 중국어 학습

- 5 운전을 중국어로 어떻게 말하나요? 开车 중국어 발음, 开车 중국어 학습

- 6 수영을 중국어로 뭐라고 하나요? 游泳 중국어 발음, 游泳 중국어 학습

- 7 자전거를 타다 중국어로 뭐라고 하나요? 骑自行车 중국어 발음, 骑自行车 중국어 학습

- 8 중국어로 안녕하세요를 어떻게 말해요? 你好중국어 발음, 你好중국어 학습

- 9 감사합니다를 중국어로 어떻게 말하나요? 谢谢중국어 발음, 谢谢중국어 학습

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning