Titelseite > Programmierung > Verwenden Sie JIT-Compiler, um meine Python-Schleifen langsamer zu machen?

Titelseite > Programmierung > Verwenden Sie JIT-Compiler, um meine Python-Schleifen langsamer zu machen?

Verwenden Sie JIT-Compiler, um meine Python-Schleifen langsamer zu machen?

If you haven't heard, Python loops can be slow--especially when working with large datasets. If you're trying to make calculations across millions of data points, execution time can quickly become a bottleneck. Luckily for us, Numba has a Just-in-Time (JIT) compiler that we can use to help speed up our numerical computations and loops in Python.

The other day, I found myself in need of a simple exponential smoothing function in Python. This function needed to take in array and return an array of the same length with the smoothed values. Typically, I try and avoid loops where possible in Python (especially when dealing with Pandas DataFrames). At my current level of capability, I didn't see how to avoid using a loop to exponentially smooth an array of values.

I am going to walk through the process of creating this exponential smoothing function and testing it with and without the JIT compilation. I'll briefly touch on JIT and how I made sure to code the the loop in a manner that worked with the nopython mode.

What is JIT?

JIT compilers are particularly useful with higher-level languages like Python, JavaScript, and Java. These languages are known for their flexibility and ease of use, but they can suffer from slower execution speeds compared to lower-level languages like C or C . JIT compilation helps bridge this gap by optimizing the execution of code at runtime, making it faster without sacrificing the advantages of these higher-level languages.

When using the nopython=True mode in the Numba JIT compiler, the Python interpreter is bypassed entirely, forcing Numba to compile everything down to machine code. This results in even faster execution by eliminating the overhead associated with Python's dynamic typing and other interpreter-related operations.

Building the fast exponential smoothing function

Exponential smoothing is a technique used to smooth out data by applying a weighted average over past observations. The formula for exponential smoothing is:

where:

- St : Represents the smoothed value at time t .

- Vt : Represents the original value at time t from the values array.

- α : The smoothing factor, which determines the weight of the current value Vt in the smoothing process.

- St−1 : Represents the smoothed value at time t−1 , i.e., the previous smoothed value.

The formula applies exponential smoothing, where:

- The new smoothed value St is a weighted average of the current value Vt and the previous smoothed value St−1 .

- The factor α determines how much influence the current value Vt has on the smoothed value compared to the previous smoothed value St−1 .

To implement this in Python, and stick to functionality that works with nopython=True mode, we will pass in an array of data values and the alpha float. I default the alpha to 0.33333333 because that fits my current use case. We will initialize an empty array to store the smoothed values in, loop and calculate, and return smoothed values. This is what it looks like:

@jit(nopython=True)

def fast_exponential_smoothing(values, alpha=0.33333333):

smoothed_values = np.zeros_like(values) # Array of zeros the same length as values

smoothed_values[0] = values[0] # Initialize the first value

for i in range(1, len(values)):

smoothed_values[i] = alpha * values[i] (1 - alpha) * smoothed_values[i - 1]

return smoothed_values

Simple, right? Let's see if JIT is doing anything now. First, we need to create a large array of integers. Then, we call the function, time how long it took to compute, and print the results.

# Generate a large random array of a million integers

large_array = np.random.randint(1, 100, size=1_000_000)

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = fast_exponential_smoothing(large_array)

end_time = time.time()

print(f"Exponential Smoothing with JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

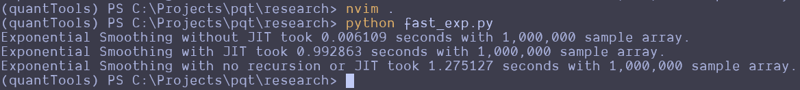

This can be repeated and altered just a bit to test the function without the JIT decorator. Here are the results that I got:

Wait, what the f***?

I thought JIT was supposed to speed it up. It looks like the standard Python function beat the JIT version and a version that attempts to use no recursion. That's strange. I guess you can't just slap the JIT decorator on something and make it go faster? Perhaps simple array loops and NumPy operations are already pretty efficient? Perhaps I don't understand the use case for JIT as well as I should? Maybe we should try this on a more complex loop?

Here is the entire code python file I created for testing:

import numpy as np

from numba import jit

import time

@jit(nopython=True)

def fast_exponential_smoothing(values, alpha=0.33333333):

smoothed_values = np.zeros_like(values) # Array of zeros the same length as values

smoothed_values[0] = values[0] # Initialize the first value

for i in range(1, len(values)):

smoothed_values[i] = alpha * values[i] (1 - alpha) * smoothed_values[i - 1]

return smoothed_values

def fast_exponential_smoothing_nojit(values, alpha=0.33333333):

smoothed_values = np.zeros_like(values) # Array of zeros the same length as values

smoothed_values[0] = values[0] # Initialize the first value

for i in range(1, len(values)):

smoothed_values[i] = alpha * values[i] (1 - alpha) * smoothed_values[i - 1]

return smoothed_values

def non_recursive_exponential_smoothing(values, alpha=0.33333333):

n = len(values)

smoothed_values = np.zeros(n)

# Initialize the first value

smoothed_values[0] = values[0]

# Calculate the rest of the smoothed values

decay_factors = (1 - alpha) ** np.arange(1, n)

cumulative_weights = alpha * decay_factors

smoothed_values[1:] = np.cumsum(values[1:] * np.flip(cumulative_weights)) (1 - alpha) ** np.arange(1, n) * values[0]

return smoothed_values

# Generate a large random array of a million integers

large_array = np.random.randint(1, 1000, size=10_000_000)

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = fast_exponential_smoothing_nojit(large_array)

end_time = time.time()

print(f"Exponential Smoothing without JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = fast_exponential_smoothing(large_array)

end_time = time.time()

print(f"Exponential Smoothing with JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

# Test the speed of fast_exponential_smoothing

start_time = time.time()

smoothed_result = non_recursive_exponential_smoothing(large_array)

end_time = time.time()

print(f"Exponential Smoothing with no recursion or JIT took {end_time - start_time:.6f} seconds with 1,000,000 sample array.")

I attempted to create the non-recursive version to see if vectorized operations across arrays would make it go faster, but it seems to be pretty damn fast as it is. These results remained the same all the way up until I didn't have enough memory to make the array of random integers.

Let me know what you think about this in the comments. I am by no means a professional developer, so I am accepting all comments, criticisms, or educational opportunities.

Until next time.

Happy coding!

-

Wie setze ich Tasten in JavaScript -Objekten dynamisch ein?wie man einen dynamischen Schlüssel für eine JavaScript -Objektvariable erstellt beim Versuch, einen dynamischen Schlüssel für ein JavaScript -O...Programmierung Gepostet am 2025-04-16

Wie setze ich Tasten in JavaScript -Objekten dynamisch ein?wie man einen dynamischen Schlüssel für eine JavaScript -Objektvariable erstellt beim Versuch, einen dynamischen Schlüssel für ein JavaScript -O...Programmierung Gepostet am 2025-04-16 -

MacBook Connect Docker MySQL Container -Befehlszeilenhandbuchwie man sich mit docker mysql container aus der Host-Befehlszeile verbindet. 127.0.0.1:3306 aus dem Host selbst. mit Docker-compose run Bei Ve...Programmierung Gepostet am 2025-04-16

MacBook Connect Docker MySQL Container -Befehlszeilenhandbuchwie man sich mit docker mysql container aus der Host-Befehlszeile verbindet. 127.0.0.1:3306 aus dem Host selbst. mit Docker-compose run Bei Ve...Programmierung Gepostet am 2025-04-16 -

ArrayMethoden sind fns, die auf Objekte aufgerufen werden können Arrays sind Objekte, daher haben sie auch Methoden in js. Slice (Beginn): Ex...Programmierung Gepostet am 2025-04-16

ArrayMethoden sind fns, die auf Objekte aufgerufen werden können Arrays sind Objekte, daher haben sie auch Methoden in js. Slice (Beginn): Ex...Programmierung Gepostet am 2025-04-16 -

Wann kann "Versuch" statt "if" verwendet werden, um variable Werte in Python zu erkennen?verwenden "try" vs. "if", um den variablen Wert in Python in Python zu testen, es gibt Situationen, in denen Sie möglicherwe...Programmierung Gepostet am 2025-04-16

Wann kann "Versuch" statt "if" verwendet werden, um variable Werte in Python zu erkennen?verwenden "try" vs. "if", um den variablen Wert in Python in Python zu testen, es gibt Situationen, in denen Sie möglicherwe...Programmierung Gepostet am 2025-04-16 -

Warum zeigt keine Firefox -Bilder mithilfe der CSS `Content` -Eigenschaft an?Bilder mit Inhalts -URL in Firefox Es wurde ein Problem aufgenommen, an dem bestimmte Browser, speziell Firefox, nicht die Bilder mit der Inha...Programmierung Gepostet am 2025-04-16

Warum zeigt keine Firefox -Bilder mithilfe der CSS `Content` -Eigenschaft an?Bilder mit Inhalts -URL in Firefox Es wurde ein Problem aufgenommen, an dem bestimmte Browser, speziell Firefox, nicht die Bilder mit der Inha...Programmierung Gepostet am 2025-04-16 -

Wie kann ich mehrere SQL-Anweisungen in einer einzelnen Abfrage mit Node-Mysql ausführen?Multi-Statement-Abfrageunterstützung in node-mysql In Node.js entstehen die Frage, wenn mehrere SQL-Anweisungen in einem einzigen Abfragelemen...Programmierung Gepostet am 2025-04-16

Wie kann ich mehrere SQL-Anweisungen in einer einzelnen Abfrage mit Node-Mysql ausführen?Multi-Statement-Abfrageunterstützung in node-mysql In Node.js entstehen die Frage, wenn mehrere SQL-Anweisungen in einem einzigen Abfragelemen...Programmierung Gepostet am 2025-04-16 -

Wie style SVG mit externen CSS?stylen svgs mit externem css: ein umfassender leitfaden , um die farben der svg grafiken durch externe style boets zu ändern. Svgs css wirkt...Programmierung Gepostet am 2025-04-16

Wie style SVG mit externen CSS?stylen svgs mit externem css: ein umfassender leitfaden , um die farben der svg grafiken durch externe style boets zu ändern. Svgs css wirkt...Programmierung Gepostet am 2025-04-16 -

Ursachen und Lösungen für den Ausfall der Gesichtserkennung: Fehler -215Fehlerbehandlung: Auflösen "Fehler: (-215)! Leere () In Funktion DESTECTMULTICALS" In opencv , wenn Sie versuchen, das Erstellen der ...Programmierung Gepostet am 2025-04-16

Ursachen und Lösungen für den Ausfall der Gesichtserkennung: Fehler -215Fehlerbehandlung: Auflösen "Fehler: (-215)! Leere () In Funktion DESTECTMULTICALS" In opencv , wenn Sie versuchen, das Erstellen der ...Programmierung Gepostet am 2025-04-16 -

Können Sie CSS verwenden, um die Konsolenausgabe in Chrom und Firefox zu färben?Farben in JavaScript console Ist es möglich, Chromes Konsole zu verwenden, um farbigen Text wie rot für Fehler, orange für Kriege und grün für...Programmierung Gepostet am 2025-04-16

Können Sie CSS verwenden, um die Konsolenausgabe in Chrom und Firefox zu färben?Farben in JavaScript console Ist es möglich, Chromes Konsole zu verwenden, um farbigen Text wie rot für Fehler, orange für Kriege und grün für...Programmierung Gepostet am 2025-04-16 -

Warum erfordern Lambda -Ausdrücke in Java "endgültige" oder "gültige endgültige" Variablen?Lambda Expressions Require "Final" or "Effectively Final" VariablesThe error message "Variable used in lambda expression shou...Programmierung Gepostet am 2025-04-16

Warum erfordern Lambda -Ausdrücke in Java "endgültige" oder "gültige endgültige" Variablen?Lambda Expressions Require "Final" or "Effectively Final" VariablesThe error message "Variable used in lambda expression shou...Programmierung Gepostet am 2025-04-16 -

Effektive Überprüfungsmethode für Java-Zeichenfolgen, die nicht leer und nicht null sindprüfen, ob ein String nicht null ist und nicht leer , ob ein String nicht null und nicht leer ist, Java bietet verschiedene Methoden. 1.6 and l...Programmierung Gepostet am 2025-04-16

Effektive Überprüfungsmethode für Java-Zeichenfolgen, die nicht leer und nicht null sindprüfen, ob ein String nicht null ist und nicht leer , ob ein String nicht null und nicht leer ist, Java bietet verschiedene Methoden. 1.6 and l...Programmierung Gepostet am 2025-04-16 -

Wie erstelle ich eine reibungslose CSS-Animation für linksgerechte für einen DIV in seinem Container?generische CSS-Animation für linksgerechte Bewegung In diesem Artikel werden wir untersuchen, eine generische CSS-Animation zu erstellen, um e...Programmierung Gepostet am 2025-04-16

Wie erstelle ich eine reibungslose CSS-Animation für linksgerechte für einen DIV in seinem Container?generische CSS-Animation für linksgerechte Bewegung In diesem Artikel werden wir untersuchen, eine generische CSS-Animation zu erstellen, um e...Programmierung Gepostet am 2025-04-16 -

Wie erstelle ich in Python dynamische Variablen?dynamische variable Erstellung in Python Die Fähigkeit, dynamisch Variablen zu erstellen, kann ein leistungsstarkes Tool sein, insbesondere we...Programmierung Gepostet am 2025-04-16

Wie erstelle ich in Python dynamische Variablen?dynamische variable Erstellung in Python Die Fähigkeit, dynamisch Variablen zu erstellen, kann ein leistungsstarkes Tool sein, insbesondere we...Programmierung Gepostet am 2025-04-16 -

Wie kann ich mit Python eine große Datei in umgekehrter Reihenfolge effizient lesen?eine Datei in umgekehrter Reihenfolge in Python Wenn Sie mit einer großen Datei arbeiten und ihren Inhalt von der letzten Zeile zum ersten, Py...Programmierung Gepostet am 2025-04-16

Wie kann ich mit Python eine große Datei in umgekehrter Reihenfolge effizient lesen?eine Datei in umgekehrter Reihenfolge in Python Wenn Sie mit einer großen Datei arbeiten und ihren Inhalt von der letzten Zeile zum ersten, Py...Programmierung Gepostet am 2025-04-16 -

Wie vereinfachte ich JSON-Parsen in PHP für mehrdimensionale Arrays?JSON mit PHP versuchen, JSON-Daten in PHP zu analysieren, kann eine Herausforderung sein, insbesondere im Umgang mit mehrdimensionalen Arrays. U...Programmierung Gepostet am 2025-04-16

Wie vereinfachte ich JSON-Parsen in PHP für mehrdimensionale Arrays?JSON mit PHP versuchen, JSON-Daten in PHP zu analysieren, kann eine Herausforderung sein, insbesondere im Umgang mit mehrdimensionalen Arrays. U...Programmierung Gepostet am 2025-04-16

Chinesisch lernen

- 1 Wie sagt man „gehen“ auf Chinesisch? 走路 Chinesische Aussprache, 走路 Chinesisch lernen

- 2 Wie sagt man auf Chinesisch „Flugzeug nehmen“? 坐飞机 Chinesische Aussprache, 坐飞机 Chinesisch lernen

- 3 Wie sagt man auf Chinesisch „einen Zug nehmen“? 坐火车 Chinesische Aussprache, 坐火车 Chinesisch lernen

- 4 Wie sagt man auf Chinesisch „Bus nehmen“? 坐车 Chinesische Aussprache, 坐车 Chinesisch lernen

- 5 Wie sagt man „Fahren“ auf Chinesisch? 开车 Chinesische Aussprache, 开车 Chinesisch lernen

- 6 Wie sagt man Schwimmen auf Chinesisch? 游泳 Chinesische Aussprache, 游泳 Chinesisch lernen

- 7 Wie sagt man auf Chinesisch „Fahrrad fahren“? 骑自行车 Chinesische Aussprache, 骑自行车 Chinesisch lernen

- 8 Wie sagt man auf Chinesisch Hallo? 你好Chinesische Aussprache, 你好Chinesisch lernen

- 9 Wie sagt man „Danke“ auf Chinesisch? 谢谢Chinesische Aussprache, 谢谢Chinesisch lernen

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning