ResNet Vs EfficientNet vs VGG Vs NN

As a student, I've witnessed firsthand the frustration caused by our university's inefficient lost and found system. The current process, reliant on individual emails for each found item, often leads to delays and missed connections between lost belongings and their owners.

Driven by a desire to improve this experience for myself and my fellow students, I've embarked on a project to explore the potential of deep learning in revolutionizing our lost and found system. In this blog post, I'll share my journey of evaluating pretrained models - ResNet, EfficientNet, VGG, and NasNet - to automate the identification and categorization of lost items.

Through a comparative analysis, I aim to pinpoint the most suitable model for integrating into our system, ultimately creating a faster, more accurate, and user-friendly lost and found experience for everyone on campus.

ResNet

Inception-ResNet V2 is a powerful convolutional neural network architecture available in Keras, combining the Inception architecture's strengths with residual connections from ResNet. This hybrid model aims to achieve high accuracy in image classification tasks while maintaining computational efficiency.

Training Dataset: ImageNet

Image Format: 299 x 299

Preprocessing function

def readyForResNet(fileName):

pic = load_img(fileName, target_size=(299, 299))

pic_array = img_to_array(pic)

expanded = np.expand_dims(pic_array, axis=0)

return preprocess_input_resnet(expanded)

Predicting

data1 = readyForResNet(test_file) prediction = inception_model_resnet.predict(data1) res1 = decode_predictions_resnet(prediction, top=2)

VGG (Visual Geometry Group)

VGG (Visual Geometry Group) is a family of deep convolutional neural network architectures known for their simplicity and effectiveness in image classification tasks. These models, particularly VGG16 and VGG19, gained popularity due to their strong performance in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2014.

Training Dataset: ImageNet

Image Format: 224 x 224

Preprocessing function

def readyForVGG(fileName):

pic = load_img(fileName, target_size=(224, 224))

pic_array = img_to_array(pic)

expanded = np.expand_dims(pic_array, axis=0)

return preprocess_input_vgg19(expanded)

Predicting

data2 = readyForVGG(test_file) prediction = inception_model_vgg19.predict(data2) res2 = decode_predictions_vgg19(prediction, top=2)

EfficientNet

EfficientNet is a family of convolutional neural network architectures that achieve state-of-the-art accuracy on image classification tasks while being significantly smaller and faster than previous models. This efficiency is achieved through a novel compound scaling method that balances network depth, width, and resolution.

Training Dataset: ImageNet

Image Format: 480 x 480

Preprocessing function

def readyForEF(fileName):

pic = load_img(fileName, target_size=(480, 480))

pic_array = img_to_array(pic)

expanded = np.expand_dims(pic_array, axis=0)

return preprocess_input_EF(expanded)

Predicting

data3 = readyForEF(test_file) prediction = inception_model_EF.predict(data3) res3 = decode_predictions_EF(prediction, top=2)

NasNet

NasNet (Neural Architecture Search Network) represents a groundbreaking approach in deep learning where the architecture of the neural network itself is discovered through an automated search process. This search process aims to find the optimal combination of layers and connections to achieve high performance on a given task.

Training Dataset: ImageNet

Image Format: 224 x 224

Preprocessing function

def readyForNN(fileName):

pic = load_img(fileName, target_size=(224, 224))

pic_array = img_to_array(pic)

expanded = np.expand_dims(pic_array, axis=0)

return preprocess_input_NN(expanded)

Predicting

data4 = readyForNN(test_file) prediction = inception_model_NN.predict(data4) res4 = decode_predictions_NN(prediction, top=2)

Showdown

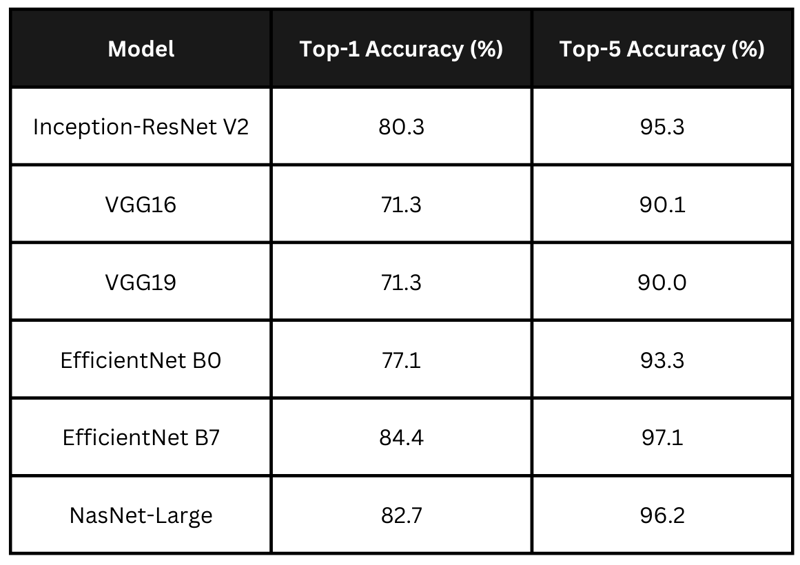

Accuracy

The table summarizes the claimed accuracy scores of the models above. EfficientNet B7 leads with the highest accuracy, followed closely by NasNet-Large and Inception-ResNet V2. VGG models exhibit lower accuracies. For my application I want to choose a model which has a balance between processing time and accuracy.

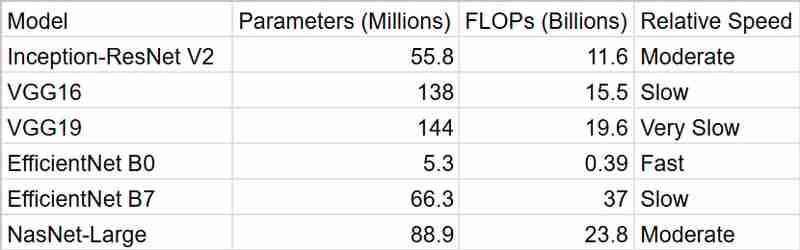

Time

As we can see, EfficientNetB0 provides us the fastest results, but InceptionResNetV2 is a better package when taken accuracy in account

Summary

For my smart lost and found system, I decided to go with InceptionResNetV2. While EfficientNet B7 looked tempting with its top-notch accuracy, I was concerned about its computational demands. In a university setting, where resources might be limited and real-time performance is often desirable, I felt it was important to strike a balance between accuracy and efficiency. InceptionResNetV2 seemed like the perfect fit - it offers strong performance without being overly computationally intensive.

Plus, the fact that it's pretrained on ImageNet gives me confidence that it can handle the diverse range of objects people might lose. And let's not forget how easy it is to work with in Keras! That definitely made my decision easier.

Overall, I believe InceptionResNetV2 provides the right mix of accuracy, efficiency, and practicality for my project. I'm excited to see how it performs in helping reunite lost items with their owners!

-

Why Does Microsoft Visual C++ Fail to Correctly Implement Two-Phase Template Instantiation?The Mystery of "Broken" Two-Phase Template Instantiation in Microsoft Visual C Problem Statement:Users commonly express concerns that Micro...Programming Posted on 2025-03-12

Why Does Microsoft Visual C++ Fail to Correctly Implement Two-Phase Template Instantiation?The Mystery of "Broken" Two-Phase Template Instantiation in Microsoft Visual C Problem Statement:Users commonly express concerns that Micro...Programming Posted on 2025-03-12 -

UTF-8 vs. Latin-1: The secret of character encoding!Distinguishing UTF-8 and Latin1When dealing with encoding, two prominent choices emerge: UTF-8 and Latin1. Amidst their applications, a fundamental qu...Programming Posted on 2025-03-12

UTF-8 vs. Latin-1: The secret of character encoding!Distinguishing UTF-8 and Latin1When dealing with encoding, two prominent choices emerge: UTF-8 and Latin1. Amidst their applications, a fundamental qu...Programming Posted on 2025-03-12 -

Part SQL injection series: Detailed explanation of advanced SQL injection techniquesAuthor: Trix Cyrus Waymap Pentesting tool: Click Here TrixSec Github: Click Here TrixSec Telegram: Click Here Advanced SQL Injection Exploits ...Programming Posted on 2025-03-12

Part SQL injection series: Detailed explanation of advanced SQL injection techniquesAuthor: Trix Cyrus Waymap Pentesting tool: Click Here TrixSec Github: Click Here TrixSec Telegram: Click Here Advanced SQL Injection Exploits ...Programming Posted on 2025-03-12 -

How Can We Secure File Uploads Against Malicious Content?Security Concerns with File UploadsUploading files to a server can introduce significant security risks due to the potentially malicious content that ...Programming Posted on 2025-03-12

How Can We Secure File Uploads Against Malicious Content?Security Concerns with File UploadsUploading files to a server can introduce significant security risks due to the potentially malicious content that ...Programming Posted on 2025-03-12 -

How to Remove Line Breaks from Strings using Regular Expressions in JavaScript?Removing Line Breaks from StringsIn this code scenario, the goal is to eliminate line breaks from a text string read from a textarea using the .value ...Programming Posted on 2025-03-12

How to Remove Line Breaks from Strings using Regular Expressions in JavaScript?Removing Line Breaks from StringsIn this code scenario, the goal is to eliminate line breaks from a text string read from a textarea using the .value ...Programming Posted on 2025-03-12 -

Is There a Performance Difference Between Using a For-Each Loop and an Iterator for Collection Traversal in Java?For Each Loop vs. Iterator: Efficiency in Collection TraversalIntroductionWhen traversing a collection in Java, the choice arises between using a for-...Programming Posted on 2025-03-12

Is There a Performance Difference Between Using a For-Each Loop and an Iterator for Collection Traversal in Java?For Each Loop vs. Iterator: Efficiency in Collection TraversalIntroductionWhen traversing a collection in Java, the choice arises between using a for-...Programming Posted on 2025-03-12 -

How to Check if an Object Has a Specific Attribute in Python?Method to Determine Object Attribute ExistenceThis inquiry seeks a method to verify the presence of a specific attribute within an object. Consider th...Programming Posted on 2025-03-12

How to Check if an Object Has a Specific Attribute in Python?Method to Determine Object Attribute ExistenceThis inquiry seeks a method to verify the presence of a specific attribute within an object. Consider th...Programming Posted on 2025-03-12 -

Detailed explanation of Java HashSet/LinkedHashSet random element acquisition methodFinding a Random Element in a SetIn programming, it can be useful to select a random element from a collection, such as a set. Java provides multiple ...Programming Posted on 2025-03-12

Detailed explanation of Java HashSet/LinkedHashSet random element acquisition methodFinding a Random Element in a SetIn programming, it can be useful to select a random element from a collection, such as a set. Java provides multiple ...Programming Posted on 2025-03-12 -

When Do CSS Attributes Fallback to Pixels (px) Without Units?Fallback for CSS Attributes Without Units: A Case StudyCSS attributes often require units (e.g., px, em, %) to specify their values. However, in certa...Programming Posted on 2025-03-12

When Do CSS Attributes Fallback to Pixels (px) Without Units?Fallback for CSS Attributes Without Units: A Case StudyCSS attributes often require units (e.g., px, em, %) to specify their values. However, in certa...Programming Posted on 2025-03-12 -

Why Isn\'t My CSS Background Image Appearing?Troubleshoot: CSS Background Image Not AppearingYou've encountered an issue where your background image fails to load despite following tutorial i...Programming Posted on 2025-03-12

Why Isn\'t My CSS Background Image Appearing?Troubleshoot: CSS Background Image Not AppearingYou've encountered an issue where your background image fails to load despite following tutorial i...Programming Posted on 2025-03-12 -

How to upload files with additional parameters using java.net.URLConnection and multipart/form-data encoding?Uploading Files with HTTP RequestsTo upload files to an HTTP server while also submitting additional parameters, java.net.URLConnection and multipart/...Programming Posted on 2025-03-12

How to upload files with additional parameters using java.net.URLConnection and multipart/form-data encoding?Uploading Files with HTTP RequestsTo upload files to an HTTP server while also submitting additional parameters, java.net.URLConnection and multipart/...Programming Posted on 2025-03-12 -

How can I merge two images in C#/.NET, centering a smaller image over a larger one while preserving transparency?Merging Images in C#/.NET: A Comprehensive GuideIntroductionCreating captivating visuals by combining multiple images is a common task in various doma...Programming Posted on 2025-03-12

How can I merge two images in C#/.NET, centering a smaller image over a larger one while preserving transparency?Merging Images in C#/.NET: A Comprehensive GuideIntroductionCreating captivating visuals by combining multiple images is a common task in various doma...Programming Posted on 2025-03-12 -

How Can I UNION Database Tables with Different Numbers of Columns?Combined tables with different columns] Can encounter challenges when trying to merge database tables with different columns. A straightforward way i...Programming Posted on 2025-03-12

How Can I UNION Database Tables with Different Numbers of Columns?Combined tables with different columns] Can encounter challenges when trying to merge database tables with different columns. A straightforward way i...Programming Posted on 2025-03-12 -

Python Read CSV File UnicodeDecodeError Ultimate SolutionUnicode Decode Error in CSV File ReadingWhen attempting to read a CSV file into Python using the built-in csv module, you may encounter an error stati...Programming Posted on 2025-03-12

Python Read CSV File UnicodeDecodeError Ultimate SolutionUnicode Decode Error in CSV File ReadingWhen attempting to read a CSV file into Python using the built-in csv module, you may encounter an error stati...Programming Posted on 2025-03-12 -

Why Doesn\'t Firefox Display Images Using the CSS `content` Property?Displaying Images with Content URL in FirefoxAn issue has been encountered where certain browsers, specifically Firefox, fail to display images when r...Programming Posted on 2025-03-12

Why Doesn\'t Firefox Display Images Using the CSS `content` Property?Displaying Images with Content URL in FirefoxAn issue has been encountered where certain browsers, specifically Firefox, fail to display images when r...Programming Posted on 2025-03-12

Study Chinese

- 1 How do you say "walk" in Chinese? 走路 Chinese pronunciation, 走路 Chinese learning

- 2 How do you say "take a plane" in Chinese? 坐飞机 Chinese pronunciation, 坐飞机 Chinese learning

- 3 How do you say "take a train" in Chinese? 坐火车 Chinese pronunciation, 坐火车 Chinese learning

- 4 How do you say "take a bus" in Chinese? 坐车 Chinese pronunciation, 坐车 Chinese learning

- 5 How to say drive in Chinese? 开车 Chinese pronunciation, 开车 Chinese learning

- 6 How do you say swimming in Chinese? 游泳 Chinese pronunciation, 游泳 Chinese learning

- 7 How do you say ride a bicycle in Chinese? 骑自行车 Chinese pronunciation, 骑自行车 Chinese learning

- 8 How do you say hello in Chinese? 你好Chinese pronunciation, 你好Chinese learning

- 9 How do you say thank you in Chinese? 谢谢Chinese pronunciation, 谢谢Chinese learning

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning