使用 Pandas 进行 JIRA 分析

Problem

It's hard to argue Atlassian JIRA is one of the most popular issue trackers and project management solutions. You can love it, you can hate it, but if you were hired as a software engineer for some company, there is a high probability of meeting JIRA.

If the project you are working on is very active, there can be thousands of JIRA issues of various types. If you are leading a team of engineers, you can be interested in analytical tools that can help you understand what is going on in the project based on data stored in JIRA. JIRA has some reporting facilities integrated, as well as 3rd party plugins. But most of them are pretty basic. For example, it's hard to find rather flexible "forecasting" tools.

The bigger the project, the less satisfied you are with integrated reporting tools. At some point, you will end up using an API to extract, manipulate, and visualize the data. During the last 15 years of JIRA usage, I saw dozens of such scripts and services in various programming languages around this domain.

Many day-to-day tasks may require one-time data analysis, so writing services every time doesn't pay off. You can treat JIRA as a data source and use a typical data analytics tool belt. For example, you may take Jupyter, fetch the list of recent bugs in the project, prepare a list of "features" (attributes valuable for analysis), utilize pandas to calculate the statistics, and try to forecast trends using scikit-learn. In this article, I would like to explain how to do it.

Preparation

JIRA API Access

Here, we will talk about the cloud version of JIRA. But if you are using a self-hosted version, the main concepts are almost the same.

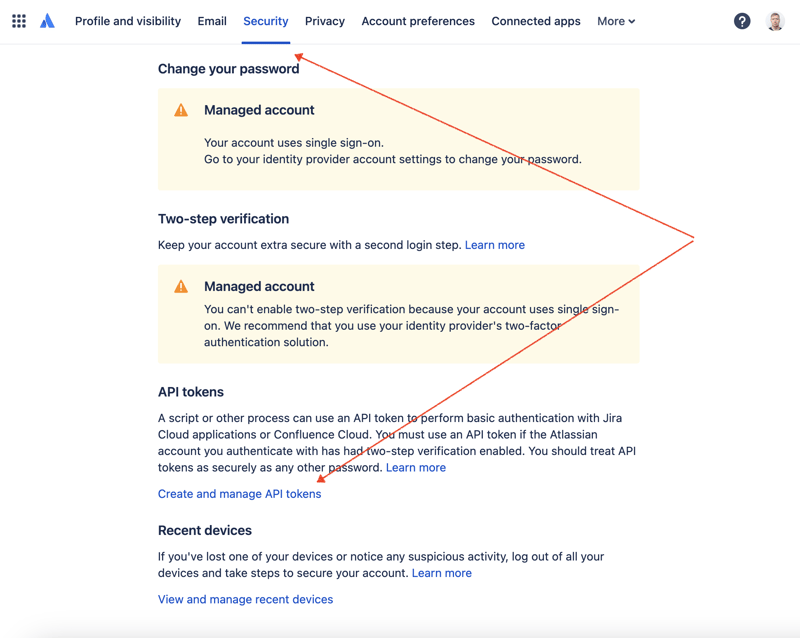

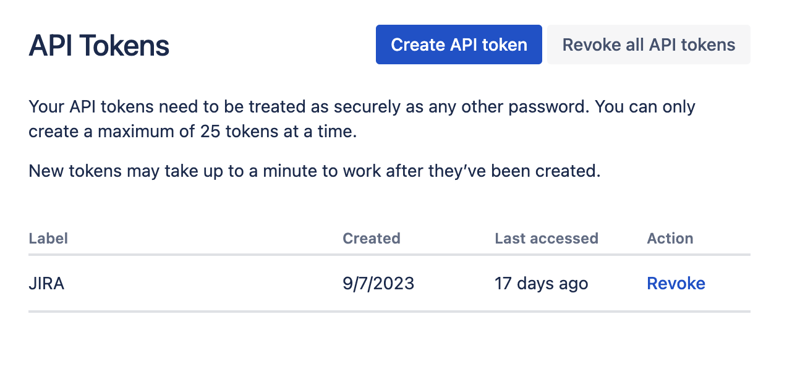

First of all, we need to create a secret key to access JIRA via REST API. To do so, go to profile management - https://id.atlassian.com/manage-profile/profile-and-visibility If you select the "Security" tab, you will find the "Create and manage API tokens" link:

Create a new API token here and store it securely. We will use this token later.

Jupyter Notebooks

One of the most convenient ways to play with datasets is to utilize Jupyter. If you are not familiar with this tool, do not worry. I will show how to use it to solve our problem. For local experiments, I like to use DataSpell by JetBrains, but there are services available online and for free. One of the most well-known services among data scientists is Kaggle. However, their notebooks don't allow you to make external connections to access JIRA via API. Another very popular service is Colab by Google. It allows you to make remote connections and install additional Python modules.

JIRA has a pretty easy-to-use REST API. You can make API calls using your favorite way of doing HTTP requests and parse the response manually. However, we will utilize an excellent and very popular jira module for that purpose.

Tools in Action

Data Analysis

Let's combine all the parts to come up with the solution.

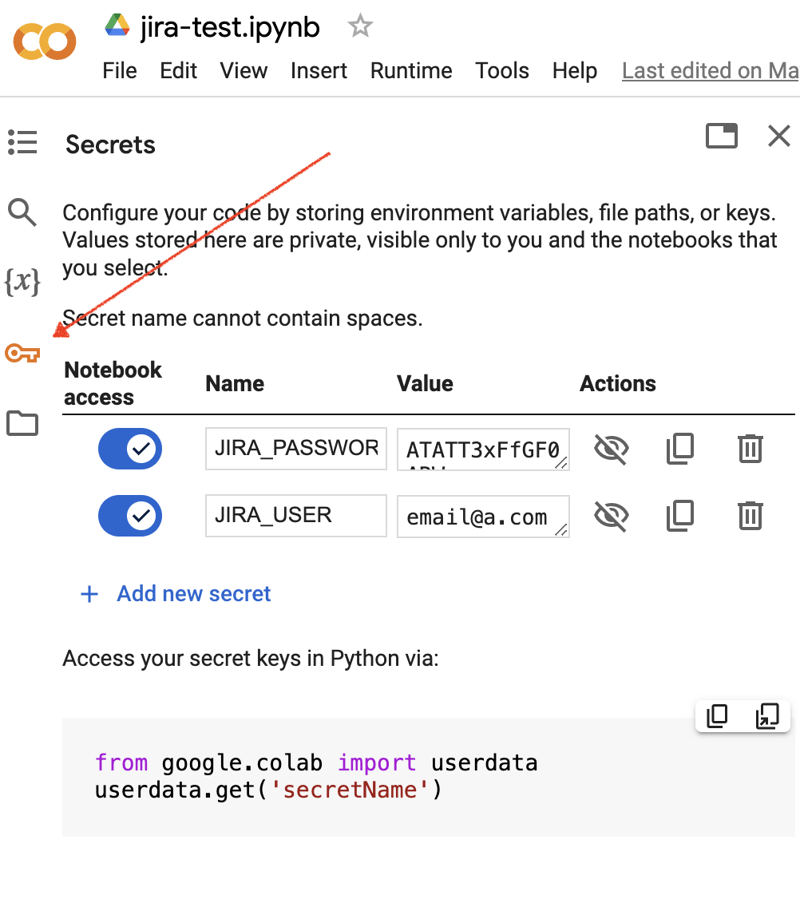

Go to the Google Colab interface and create a new notebook. After the notebook creation, we need to store previously obtained JIRA credentials as "secrets." Click the "Key" icon in the left toolbar to open the appropriate dialog and add two "secrets" with the following names: JIRA_USER and JIRA_PASSWORD. At the bottom of the screen, you can see the way how to access these "secrets":

The next thing is to install an additional Python module for JIRA integration. We can do it by executing the shell command in the scope of the notebook cell:

!pip install jira

The output should look something like the following:

Collecting jira

Downloading jira-3.8.0-py3-none-any.whl (77 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 77.5/77.5 kB 1.3 MB/s eta 0:00:00

Requirement already satisfied: defusedxml in /usr/local/lib/python3.10/dist-packages (from jira) (0.7.1)

...

Installing collected packages: requests-toolbelt, jira

Successfully installed jira-3.8.0 requests-toolbelt-1.0.0

We need to fetch the "secrets"/credentials:

from google.colab import userdata

JIRA_URL = 'https://******.atlassian.net'

JIRA_USER = userdata.get('JIRA_USER')

JIRA_PASSWORD = userdata.get('JIRA_PASSWORD')

And validate the connection to the JIRA Cloud:

from jira import JIRA jira = JIRA(JIRA_URL, basic_auth=(JIRA_USER, JIRA_PASSWORD)) projects = jira.projects() projects

If the connection is ok and the credentials are valid, you should see a non-empty list of your projects:

[, , , ...

So we can connect and fetch data from JIRA. The next step is to fetch some data for analysis with pandas. Let’s try to fetch the list of solved problems during the last several weeks for some project:

JIRA_FILTER = 19762

issues = jira.search_issues(

f'filter={JIRA_FILTER}',

maxResults=False,

fields='summary,issuetype,assignee,reporter,aggregatetimespent',

)

We need to transform the dataset into the pandas data frame:

import pandas as pd

df = pd.DataFrame([{

'key': issue.key,

'assignee': issue.fields.assignee and issue.fields.assignee.displayName or issue.fields.reporter.displayName,

'time': issue.fields.aggregatetimespent,

'summary': issue.fields.summary,

} for issue in issues])

df.set_index('key', inplace=True)

df

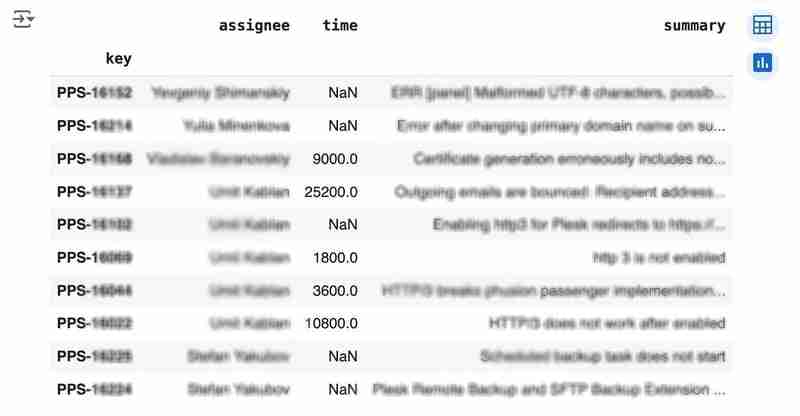

The output may look like the following:

We would like to analyze how much time it usually takes to solve the issue. People are not ideal, so sometimes they forget to log the work. It brings a headache if you try to analyze such data using JIRA built-in tools. But it's not a problem for us to make some adjustments using pandas. For example, we can transform the "time" field from seconds into hours and replace the absent values with the median value (beware, dropna can be more suitable if there are a lot of gaps):

df['time'].fillna(df['time'].median(), inplace=True) df['time'] = df['time'] / 3600

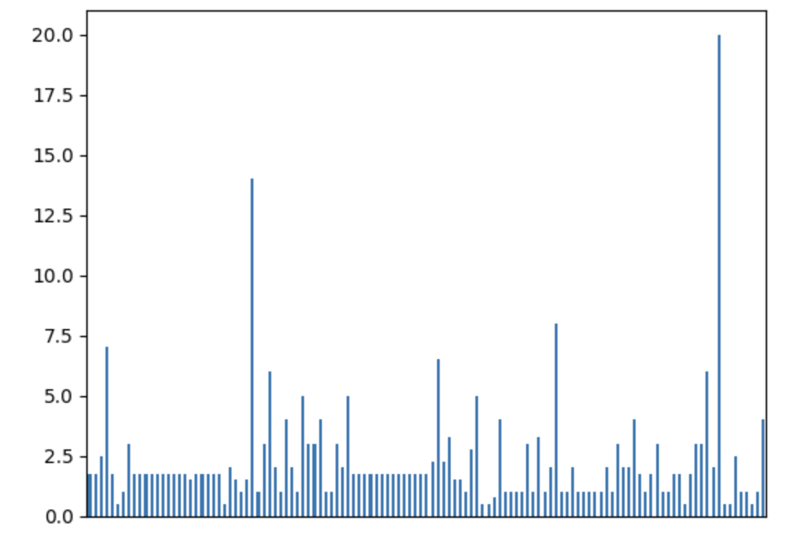

We can easily visualize the distribution to find out anomalies:

df['time'].plot.bar(xlabel='', xticks=[])

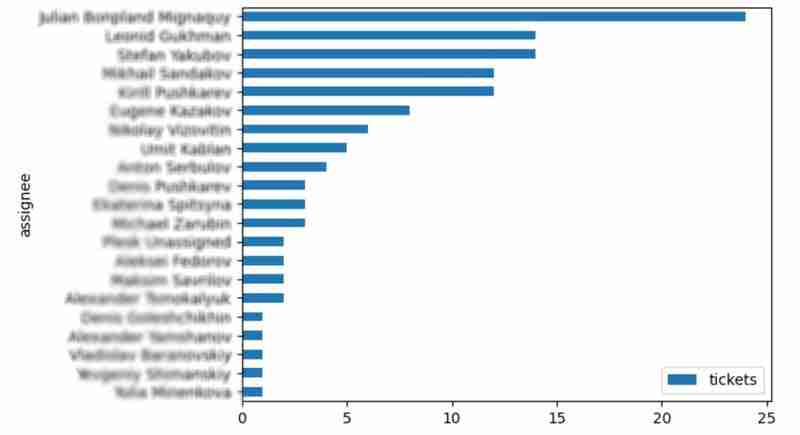

It is also interesting to see the distribution of solved problems by the assignee:

top_solvers = df.groupby('assignee').count()[['time']]

top_solvers.rename(columns={'time': 'tickets'}, inplace=True)

top_solvers.sort_values('tickets', ascending=False, inplace=True)

top_solvers.plot.barh().invert_yaxis()

It may look like the following:

Predictions

Let's try to predict the amount of time required to finish all open issues. Of course, we can do it without machine learning by using simple approximation and the average time to resolve the issue. So the predicted amount of required time is the number of open issues multiplied by the average time to resolve one. For example, the median time to solve one issue is 2 hours, and we have 9 open issues, so the time required to solve them all is 18 hours (approximation). It's a good enough forecast, but we might know the speed of solving depends on the product, team, and other attributes of the issue. If we want to improve the prediction, we can utilize machine learning to solve this task.

The high-level approach looks the following:

- Obtain the dataset for “learning”

- Clean up the data

- Prepare the "features" aka "feature engineering"

- Train the model

- Use the model to predict some value of the target dataset

For the first step, we will use a dataset of tickets for the last 30 weeks. Some parts here are simplified for illustrative purposes. In real life, the amount of data for learning should be big enough to make a useful model (e.g., in our case, we need thousands of issues to be analyzed).

issues = jira.search_issues(

f'project = PPS AND status IN (Resolved) AND created >= -30w',

maxResults=False,

fields='summary,issuetype,customfield_10718,customfield_10674,aggregatetimespent',

)

closed_tickets = pd.DataFrame([{

'key': issue.key,

'team': issue.fields.customfield_10718,

'product': issue.fields.customfield_10674,

'time': issue.fields.aggregatetimespent,

} for issue in issues])

closed_tickets.set_index('key', inplace=True)

closed_tickets['time'].fillna(closed_tickets['time'].median(), inplace=True)

closed_tickets

In my case, it's something around 800 tickets and only two fields for "learning": "team" and "product."

The next step is to obtain our target dataset. Why do I do it so early? I want to clean up and do "feature engineering" in one shot for both datasets. Otherwise, the mismatch between the structures can cause problems.

issues = jira.search_issues(

f'project = PPS AND status IN (Open, Reopened)',

maxResults=False,

fields='summary,issuetype,customfield_10718,customfield_10674',

)

open_tickets = pd.DataFrame([{

'key': issue.key,

'team': issue.fields.customfield_10718,

'product': issue.fields.customfield_10674,

} for issue in issues])

open_tickets.set_index('key', inplace=True)

open_tickets

Please notice we have no "time" column here because we want to predict it. Let's nullify it and combine both datasets to prepare the "features."

open_tickets['time'] = 0 tickets = pd.concat([closed_tickets, open_tickets]) tickets

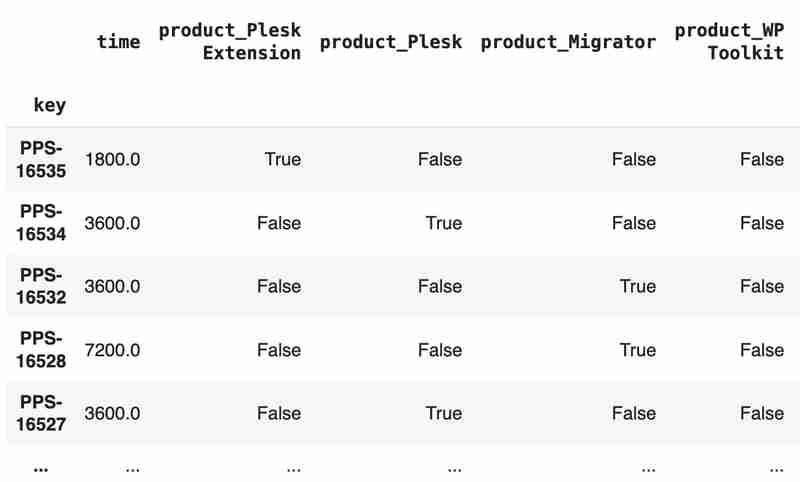

Columns "team" and "product" contain string values. One of the ways of dealing with that is to transform each value into separate fields with boolean flags.

products = pd.get_dummies(tickets['product'], prefix='product')

tickets = pd.concat([tickets, products], axis=1)

tickets.drop('product', axis=1, inplace=True)

teams = pd.get_dummies(tickets['team'], prefix='team')

tickets = pd.concat([tickets, teams], axis=1)

tickets.drop('team', axis=1, inplace=True)

tickets

The result may look like the following:

After the combined dataset preparation, we can split it back into two parts:

closed_tickets = tickets[:len(closed_tickets)] open_tickets = tickets[len(closed_tickets):][:]

Now it's time to train our model:

from sklearn.model_selection import train_test_split from sklearn.tree import DecisionTreeRegressor features = closed_tickets.drop(['time'], axis=1) labels = closed_tickets['time'] features_train, features_val, labels_train, labels_val = train_test_split(features, labels, test_size=0.2) model = DecisionTreeRegressor() model.fit(features_train, labels_train) model.score(features_val, labels_val)

And the final step is to use our model to make a prediction:

open_tickets['time'] = model.predict(open_tickets.drop('time', axis=1, errors='ignore'))

open_tickets['time'].sum() / 3600

The final output, in my case, is 25 hours, which is higher than our initial rough estimation. This was a basic example. However, by using ML tools, you can significantly expand your abilities to analyze JIRA data.

Conclusion

Sometimes, JIRA built-in tools and plugins are not sufficient for effective analysis. Moreover, many 3rd party plugins are rather expensive, costing thousands of dollars per year, and you will still struggle to make them work the way you want. However, you can easily utilize well-known data analysis tools by fetching necessary information via JIRA API and go beyond these limitations. I spent so many hours playing with various JIRA plugins in attempts to create good reports for projects, but they often missed some important parts. Building a tool or a full-featured service on top of JIRA API also often looks like overkill. That's why typical data analysis and ML tools like Jupiter, pandas, matplotlib, scikit-learn, and others may work better here.

-

为什么我会收到MySQL错误#1089:错误的前缀密钥?mySQL错误#1089:错误的前缀键错误descript [#1089-不正确的前缀键在尝试在表中创建一个prefix键时会出现。前缀键旨在索引字符串列的特定前缀长度长度,可以更快地搜索这些前缀。了解prefix keys `这将在整个Movie_ID列上创建标准主键。主密钥对于唯一识别...编程 发布于2025-07-14

为什么我会收到MySQL错误#1089:错误的前缀密钥?mySQL错误#1089:错误的前缀键错误descript [#1089-不正确的前缀键在尝试在表中创建一个prefix键时会出现。前缀键旨在索引字符串列的特定前缀长度长度,可以更快地搜索这些前缀。了解prefix keys `这将在整个Movie_ID列上创建标准主键。主密钥对于唯一识别...编程 发布于2025-07-14 -

如何高效地在一个事务中插入数据到多个MySQL表?mySQL插入到多个表中,该数据可能会产生意外的结果。虽然似乎有多个查询可以解决问题,但将从用户表的自动信息ID与配置文件表的手动用户ID相关联提出了挑战。使用Transactions和last_insert_id() 插入用户(用户名,密码)值('test','test...编程 发布于2025-07-14

如何高效地在一个事务中插入数据到多个MySQL表?mySQL插入到多个表中,该数据可能会产生意外的结果。虽然似乎有多个查询可以解决问题,但将从用户表的自动信息ID与配置文件表的手动用户ID相关联提出了挑战。使用Transactions和last_insert_id() 插入用户(用户名,密码)值('test','test...编程 发布于2025-07-14 -

哪种方法更有效地用于点 - 填点检测:射线跟踪或matplotlib \的路径contains_points?在Python Matplotlib's path.contains_points FunctionMatplotlib's path.contains_points function employs a path object to represent the polygon.它...编程 发布于2025-07-14

哪种方法更有效地用于点 - 填点检测:射线跟踪或matplotlib \的路径contains_points?在Python Matplotlib's path.contains_points FunctionMatplotlib's path.contains_points function employs a path object to represent the polygon.它...编程 发布于2025-07-14 -

eval()vs. ast.literal_eval():对于用户输入,哪个Python函数更安全?称量()和ast.literal_eval()中的Python Security 在使用用户输入时,必须优先确保安全性。强大的python功能eval()通常是作为潜在解决方案而出现的,但担心其潜在风险。本文深入研究了eval()和ast.literal_eval()之间的差异,突出显示其安全性含义...编程 发布于2025-07-14

eval()vs. ast.literal_eval():对于用户输入,哪个Python函数更安全?称量()和ast.literal_eval()中的Python Security 在使用用户输入时,必须优先确保安全性。强大的python功能eval()通常是作为潜在解决方案而出现的,但担心其潜在风险。本文深入研究了eval()和ast.literal_eval()之间的差异,突出显示其安全性含义...编程 发布于2025-07-14 -

如何从PHP中的Unicode字符串中有效地产生对URL友好的sl。为有效的slug生成首先,该函数用指定的分隔符替换所有非字母或数字字符。此步骤可确保slug遵守URL惯例。随后,它采用ICONV函数将文本简化为us-ascii兼容格式,从而允许更广泛的字符集合兼容性。接下来,该函数使用正则表达式删除了不需要的字符,例如特殊字符和空格。此步骤可确保slug仅包含...编程 发布于2025-07-14

如何从PHP中的Unicode字符串中有效地产生对URL友好的sl。为有效的slug生成首先,该函数用指定的分隔符替换所有非字母或数字字符。此步骤可确保slug遵守URL惯例。随后,它采用ICONV函数将文本简化为us-ascii兼容格式,从而允许更广泛的字符集合兼容性。接下来,该函数使用正则表达式删除了不需要的字符,例如特殊字符和空格。此步骤可确保slug仅包含...编程 发布于2025-07-14 -

如何处理PHP文件系统功能中的UTF-8文件名?在PHP的Filesystem functions中处理UTF-8 FileNames 在使用PHP的MKDIR函数中含有UTF-8字符的文件很多flusf-8字符时,您可能会在Windows Explorer中遇到comploreer grounder grounder grounder gro...编程 发布于2025-07-14

如何处理PHP文件系统功能中的UTF-8文件名?在PHP的Filesystem functions中处理UTF-8 FileNames 在使用PHP的MKDIR函数中含有UTF-8字符的文件很多flusf-8字符时,您可能会在Windows Explorer中遇到comploreer grounder grounder grounder gro...编程 发布于2025-07-14 -

如何使用Python的请求和假用户代理绕过网站块?如何使用Python的请求模拟浏览器行为,以及伪造的用户代理提供了一个用户 - 代理标头一个有效方法是提供有效的用户式header,以提供有效的用户 - 设置,该标题可以通过browser和Acterner Systems the equestersystermery和操作系统。通过模仿像Chro...编程 发布于2025-07-14

如何使用Python的请求和假用户代理绕过网站块?如何使用Python的请求模拟浏览器行为,以及伪造的用户代理提供了一个用户 - 代理标头一个有效方法是提供有效的用户式header,以提供有效的用户 - 设置,该标题可以通过browser和Acterner Systems the equestersystermery和操作系统。通过模仿像Chro...编程 发布于2025-07-14 -

如何有效地转换PHP中的时区?在PHP 利用dateTime对象和functions DateTime对象及其相应的功能别名为时区转换提供方便的方法。例如: //定义用户的时区 date_default_timezone_set('欧洲/伦敦'); //创建DateTime对象 $ dateTime = ne...编程 发布于2025-07-14

如何有效地转换PHP中的时区?在PHP 利用dateTime对象和functions DateTime对象及其相应的功能别名为时区转换提供方便的方法。例如: //定义用户的时区 date_default_timezone_set('欧洲/伦敦'); //创建DateTime对象 $ dateTime = ne...编程 发布于2025-07-14 -

如何使用PHP将斑点(图像)正确插入MySQL?essue VALUES('$this->image_id','file_get_contents($tmp_image)')";This code builds a string in PHP, but the function call ...编程 发布于2025-07-14

如何使用PHP将斑点(图像)正确插入MySQL?essue VALUES('$this->image_id','file_get_contents($tmp_image)')";This code builds a string in PHP, but the function call ...编程 发布于2025-07-14 -

Python中嵌套函数与闭包的区别是什么嵌套函数与python 在python中的嵌套函数不被考虑闭合,因为它们不符合以下要求:不访问局部范围scliables to incling scliables在封装范围外执行范围的局部范围。 make_printer(msg): DEF打印机(): 打印(味精) ...编程 发布于2025-07-14

Python中嵌套函数与闭包的区别是什么嵌套函数与python 在python中的嵌套函数不被考虑闭合,因为它们不符合以下要求:不访问局部范围scliables to incling scliables在封装范围外执行范围的局部范围。 make_printer(msg): DEF打印机(): 打印(味精) ...编程 发布于2025-07-14 -

Python元类工作原理及类创建与定制python中的metaclasses是什么? Metaclasses负责在Python中创建类对象。就像类创建实例一样,元类也创建类。他们提供了对类创建过程的控制层,允许自定义类行为和属性。在Python中理解类作为对象的概念,类是描述用于创建新实例或对象的蓝图的对象。这意味着类本身是使用类关...编程 发布于2025-07-14

Python元类工作原理及类创建与定制python中的metaclasses是什么? Metaclasses负责在Python中创建类对象。就像类创建实例一样,元类也创建类。他们提供了对类创建过程的控制层,允许自定义类行为和属性。在Python中理解类作为对象的概念,类是描述用于创建新实例或对象的蓝图的对象。这意味着类本身是使用类关...编程 发布于2025-07-14 -

PHP阵列键值异常:了解07和08的好奇情况PHP数组键值问题,使用07&08 在给定数月的数组中,键值07和08呈现令人困惑的行为时,就会出现一个不寻常的问题。运行print_r($月)返回意外结果:键“ 07”丢失,而键“ 08”分配给了9月的值。此问题源于PHP对领先零的解释。当一个数字带有0(例如07或08)的前缀时,PHP将其...编程 发布于2025-07-14

PHP阵列键值异常:了解07和08的好奇情况PHP数组键值问题,使用07&08 在给定数月的数组中,键值07和08呈现令人困惑的行为时,就会出现一个不寻常的问题。运行print_r($月)返回意外结果:键“ 07”丢失,而键“ 08”分配给了9月的值。此问题源于PHP对领先零的解释。当一个数字带有0(例如07或08)的前缀时,PHP将其...编程 发布于2025-07-14 -

在C#中如何高效重复字符串字符用于缩进?在基于项目的深度下固定字符串时,重复一个字符串以进行凹痕,很方便有效地有一种有效的方法来返回字符串重复指定的次数的字符串。使用指定的次数。 constructor 这将返回字符串“ -----”。 字符串凹痕= new String(' - ',depth); console.Wr...编程 发布于2025-07-14

在C#中如何高效重复字符串字符用于缩进?在基于项目的深度下固定字符串时,重复一个字符串以进行凹痕,很方便有效地有一种有效的方法来返回字符串重复指定的次数的字符串。使用指定的次数。 constructor 这将返回字符串“ -----”。 字符串凹痕= new String(' - ',depth); console.Wr...编程 发布于2025-07-14 -

C++中如何将独占指针作为函数或构造函数参数传递?在构造函数和函数中将唯一的指数管理为参数 unique pointers( unique_ptr [2启示。通过值: base(std :: simelor_ptr n) :next(std :: move(n)){} 此方法将唯一指针的所有权转移到函数/对象。指针的内容被移至功能中,在操作...编程 发布于2025-07-14

C++中如何将独占指针作为函数或构造函数参数传递?在构造函数和函数中将唯一的指数管理为参数 unique pointers( unique_ptr [2启示。通过值: base(std :: simelor_ptr n) :next(std :: move(n)){} 此方法将唯一指针的所有权转移到函数/对象。指针的内容被移至功能中,在操作...编程 发布于2025-07-14 -

表单刷新后如何防止重复提交?在Web开发中预防重复提交 在表格提交后刷新页面时,遇到重复提交的问题是常见的。要解决这个问题,请考虑以下方法: 想象一下具有这样的代码段,看起来像这样的代码段:)){ //数据库操作... 回声“操作完成”; 死(); } ?> ...编程 发布于2025-07-14

表单刷新后如何防止重复提交?在Web开发中预防重复提交 在表格提交后刷新页面时,遇到重复提交的问题是常见的。要解决这个问题,请考虑以下方法: 想象一下具有这样的代码段,看起来像这样的代码段:)){ //数据库操作... 回声“操作完成”; 死(); } ?> ...编程 发布于2025-07-14

学习中文

- 1 走路用中文怎么说?走路中文发音,走路中文学习

- 2 坐飞机用中文怎么说?坐飞机中文发音,坐飞机中文学习

- 3 坐火车用中文怎么说?坐火车中文发音,坐火车中文学习

- 4 坐车用中文怎么说?坐车中文发音,坐车中文学习

- 5 开车用中文怎么说?开车中文发音,开车中文学习

- 6 游泳用中文怎么说?游泳中文发音,游泳中文学习

- 7 骑自行车用中文怎么说?骑自行车中文发音,骑自行车中文学习

- 8 你好用中文怎么说?你好中文发音,你好中文学习

- 9 谢谢用中文怎么说?谢谢中文发音,谢谢中文学习

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning