使用 Topc 進行主題建模:Dreyfus、AI 和 Wordclouds

發佈於2024-07-30

使用 Python 从 PDF 中提取见解:综合指南

该脚本演示了用于处理 PDF、提取文本、标记句子以及通过可视化执行主题建模的强大工作流程,专为高效且富有洞察力的分析而定制。

库概述

- os:提供与操作系统交互的功能。

- matplotlib.pyplot:用于在 Python 中创建静态、动画和交互式可视化。

- nltk:自然语言工具包,一套用于自然语言处理的库和程序。

- pandas:数据操作和分析库。

- pdftotext:用于将 PDF 文档转换为纯文本的库。

- re:提供正则表达式匹配操作。

- seaborn:基于matplotlib的统计数据可视化库。

- nltk.tokenize.sent_tokenize:NLTK 函数将字符串标记为句子。

- top2vec:主题建模和语义搜索库。

- wordcloud:用于从文本数据创建词云的库。

初始设置

导入模块

import os import matplotlib.pyplot as plt import nltk import pandas as pd import pdftotext import re import seaborn as sns from nltk.tokenize import sent_tokenize from top2vec import Top2Vec from wordcloud import WordCloud from cleantext import clean

接下来,确保下载 punkt tokenizer:

nltk.download('punkt')

文本规范化

def normalize_text(text):

"""Normalize text by removing special characters and extra spaces,

and applying various other cleaning options."""

# Apply the clean function with specified parameters

cleaned_text = clean(

text,

fix_unicode=True, # fix various unicode errors

to_ascii=True, # transliterate to closest ASCII representation

lower=True, # lowercase text

no_line_breaks=False, # fully strip line breaks as opposed to only normalizing them

no_urls=True, # replace all URLs with a special token

no_emails=True, # replace all email addresses with a special token

no_phone_numbers=True, # replace all phone numbers with a special token

no_numbers=True, # replace all numbers with a special token

no_digits=True, # replace all digits with a special token

no_currency_symbols=True, # replace all currency symbols with a special token

no_punct=False, # remove punctuations

lang="en", # set to 'de' for German special handling

)

# Further clean the text by removing any remaining special characters except word characters, whitespace, and periods/commas

cleaned_text = re.sub(r"[^\w\s.,]", "", cleaned_text)

# Replace multiple whitespace characters with a single space and strip leading/trailing spaces

cleaned_text = re.sub(r"\s ", " ", cleaned_text).strip()

return cleaned_text

PDF文本提取

def extract_text_from_pdf(pdf_path):

with open(pdf_path, "rb") as f:

pdf = pdftotext.PDF(f)

all_text = "\n\n".join(pdf)

return normalize_text(all_text)

句子标记化

def split_into_sentences(text):

return sent_tokenize(text)

处理多个文件

def process_files(file_paths):

authors, titles, all_sentences = [], [], []

for file_path in file_paths:

file_name = os.path.basename(file_path)

parts = file_name.split(" - ", 2)

if len(parts) != 3 or not file_name.endswith(".pdf"):

print(f"Skipping file with incorrect format: {file_name}")

continue

year, author, title = parts

author, title = author.strip(), title.replace(".pdf", "").strip()

try:

text = extract_text_from_pdf(file_path)

except Exception as e:

print(f"Error extracting text from {file_name}: {e}")

continue

sentences = split_into_sentences(text)

authors.append(author)

titles.append(title)

all_sentences.extend(sentences)

print(f"Number of sentences for {file_name}: {len(sentences)}")

return authors, titles, all_sentences

将数据保存到 CSV

def save_data_to_csv(authors, titles, file_paths, output_file):

texts = []

for fp in file_paths:

try:

text = extract_text_from_pdf(fp)

sentences = split_into_sentences(text)

texts.append(" ".join(sentences))

except Exception as e:

print(f"Error processing file {fp}: {e}")

texts.append("")

data = pd.DataFrame({

"Author": authors,

"Title": titles,

"Text": texts

})

data.to_csv(output_file, index=False, quoting=1, encoding='utf-8')

print(f"Data has been written to {output_file}")

加载停用词

def load_stopwords(filepath):

with open(filepath, "r") as f:

stopwords = f.read().splitlines()

additional_stopwords = ["able", "according", "act", "actually", "after", "again", "age", "agree", "al", "all", "already", "also", "am", "among", "an", "and", "another", "any", "appropriate", "are", "argue", "as", "at", "avoid", "based", "basic", "basis", "be", "been", "begin", "best", "book", "both", "build", "but", "by", "call", "can", "cant", "case", "cases", "claim", "claims", "class", "clear", "clearly", "cope", "could", "course", "data", "de", "deal", "dec", "did", "do", "doesnt", "done", "dont", "each", "early", "ed", "either", "end", "etc", "even", "ever", "every", "far", "feel", "few", "field", "find", "first", "follow", "follows", "for", "found", "free", "fri", "fully", "get", "had", "hand", "has", "have", "he", "help", "her", "here", "him", "his", "how", "however", "httpsabout", "ibid", "if", "im", "in", "is", "it", "its", "jstor", "june", "large", "lead", "least", "less", "like", "long", "look", "man", "many", "may", "me", "money", "more", "most", "move", "moves", "my", "neither", "net", "never", "new", "no", "nor", "not", "notes", "notion", "now", "of", "on", "once", "one", "ones", "only", "open", "or", "order", "orgterms", "other", "our", "out", "own", "paper", "past", "place", "plan", "play", "point", "pp", "precisely", "press", "put", "rather", "real", "require", "right", "risk", "role", "said", "same", "says", "search", "second", "see", "seem", "seems", "seen", "sees", "set", "shall", "she", "should", "show", "shows", "since", "so", "step", "strange", "style", "such", "suggests", "talk", "tell", "tells", "term", "terms", "than", "that", "the", "their", "them", "then", "there", "therefore", "these", "they", "this", "those", "three", "thus", "to", "todes", "together", "too", "tradition", "trans", "true", "try", "trying", "turn", "turns", "two", "up", "us", "use", "used", "uses", "using", "very", "view", "vol", "was", "way", "ways", "we", "web", "well", "were", "what", "when", "whether", "which", "who", "why", "with", "within", "works", "would", "years", "york", "you", "your", "suggests", "without"]

stopwords.extend(additional_stopwords)

return set(stopwords)

从主题中过滤停用词

def filter_stopwords_from_topics(topic_words, stopwords):

filtered_topics = []

for words in topic_words:

filtered_topics.append([word for word in words if word.lower() not in stopwords])

return filtered_topics

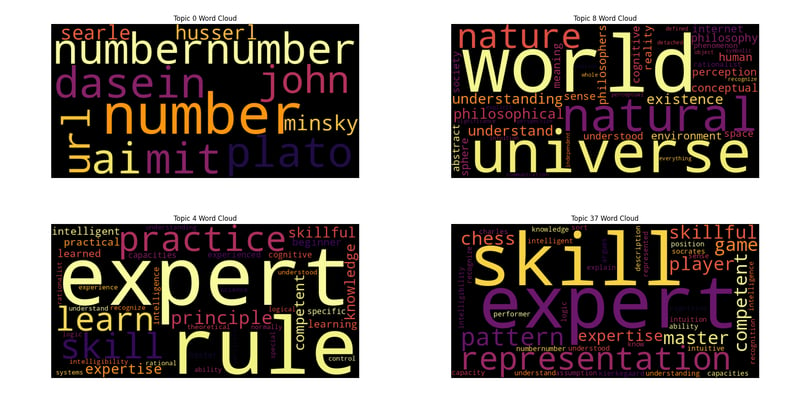

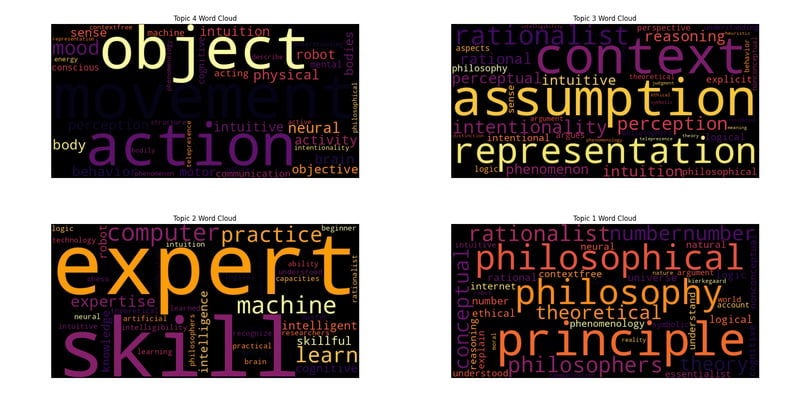

词云生成

def generate_wordcloud(topic_words, topic_num, palette='inferno'):

colors = sns.color_palette(palette, n_colors=256).as_hex()

def color_func(word, font_size, position, orientation, random_state=None, **kwargs):

return colors[random_state.randint(0, len(colors) - 1)]

wordcloud = WordCloud(width=800, height=400, background_color='black', color_func=color_func).generate(' '.join(topic_words))

plt.figure(figsize=(10, 5))

plt.imshow(wordcloud, interpolation='bilinear')

plt.axis('off')

plt.title(f'Topic {topic_num} Word Cloud')

plt.show()

主要执行

file_paths = [f"/home/roomal/Desktop/Dreyfus-Project/Dreyfus/{fname}" for fname in os.listdir("/home/roomal/Desktop/Dreyfus-Project/Dreyfus/") if fname.endswith(".pdf")]

authors, titles, all_sentences = process_files(file_paths)

output_file = "/home/roomal/Desktop/Dreyfus-Project/Dreyfus_Papers.csv"

save_data_to_csv(authors, titles, file_paths, output_file)

stopwords_filepath = "/home/roomal/Documents/Lists/stopwords.txt"

stopwords = load_stopwords(stopwords_filepath)

try:

topic_model = Top2Vec(

all_sentences,

embedding_model="distiluse-base-multilingual-cased",

speed="deep-learn",

workers=6

)

print("Top2Vec model created successfully.")

except ValueError as e:

print(f"Error initializing Top2Vec: {e}")

except Exception as e:

print(f"Unexpected error: {e}")

num_topics = topic_model.get_num_topics()

topic_words, word_scores, topic_nums = topic_model.get_topics(num_topics)

filtered_topic_words = filter_stopwords_from_topics(topic_words, stopwords)

for i, words in enumerate(filtered_topic_words):

print(f"Topic {i}: {', '.join(words)}")

keywords = ["heidegger"]

topic_words, word_scores, topic_scores, topic_nums = topic_model.search_topics(keywords=keywords, num_topics=num_topics)

filtered

_search_topic_words = filter_stopwords_from_topics(topic_words, stopwords)

for i, words in enumerate(filtered_search_topic_words):

generate_wordcloud(words, topic_nums[i])

for i in range(reduced_num_topics):

topic_words = topic_model.topic_words_reduced[i]

filtered_words = [word for word in topic_words if word.lower() not in stopwords]

print(f"Reduced Topic {i}: {', '.join(filtered_words)}")

generate_wordcloud(filtered_words, i)

减少主题数量

reduced_num_topics = 5

topic_mapping = topic_model.hierarchical_topic_reduction(num_topics=reduced_num_topics)

# Print reduced topics and generate word clouds

for i in range(reduced_num_topics):

topic_words = topic_model.topic_words_reduced[i]

filtered_words = [word for word in topic_words if word.lower() not in stopwords]

print(f"Reduced Topic {i}: {', '.join(filtered_words)}")

generate_wordcloud(filtered_words, i)

版本聲明

本文轉載於:https://dev.to/roomals/topic-modeling-with-top2vec-dreyfus-ai-and-wordclouds-1ggl?1如有侵犯,請聯絡[email protected]刪除

最新教學

更多>

-

如何配置Pytesseract以使用數字輸出的單位數字識別?Pytesseract OCR具有單位數字識別和僅數字約束 在pytesseract的上下文中,在配置tesseract以識別單位數字和限制單個數字和限制輸出對數字可能會提出質疑。 To address this issue, we delve into the specifics of Te...程式設計 發佈於2025-04-01

如何配置Pytesseract以使用數字輸出的單位數字識別?Pytesseract OCR具有單位數字識別和僅數字約束 在pytesseract的上下文中,在配置tesseract以識別單位數字和限制單個數字和限制輸出對數字可能會提出質疑。 To address this issue, we delve into the specifics of Te...程式設計 發佈於2025-04-01 -

如何使用Java.net.urlConnection和Multipart/form-data編碼使用其他參數上傳文件?使用http request 上傳文件上傳到http server,同時也提交其他參數,java.net.net.urlconnection and Multipart/form-data Encoding是普遍的。 Here's a breakdown of the process:Mu...程式設計 發佈於2025-04-01

如何使用Java.net.urlConnection和Multipart/form-data編碼使用其他參數上傳文件?使用http request 上傳文件上傳到http server,同時也提交其他參數,java.net.net.urlconnection and Multipart/form-data Encoding是普遍的。 Here's a breakdown of the process:Mu...程式設計 發佈於2025-04-01 -

如何干淨地刪除匿名JavaScript事件處理程序?刪除匿名事件偵聽器將匿名事件偵聽器添加到元素中會提供靈活性和簡單性,但是當要刪除它們時,可以構成挑戰,而無需替換元素本身就可以替換一個問題。 element? element.addeventlistener(event,function(){/在這里工作/},false); 要解決此問題,請考...程式設計 發佈於2025-04-01

如何干淨地刪除匿名JavaScript事件處理程序?刪除匿名事件偵聽器將匿名事件偵聽器添加到元素中會提供靈活性和簡單性,但是當要刪除它們時,可以構成挑戰,而無需替換元素本身就可以替換一個問題。 element? element.addeventlistener(event,function(){/在這里工作/},false); 要解決此問題,請考...程式設計 發佈於2025-04-01 -

如何檢查對像是否具有Python中的特定屬性?方法來確定對象屬性存在尋求一種方法來驗證對像中特定屬性的存在。考慮以下示例,其中嘗試訪問不確定屬性會引起錯誤: >>> a = someClass() >>> A.property Trackback(最近的最新電話): 文件“ ”,第1行, attributeError:SomeClass實...程式設計 發佈於2025-04-01

如何檢查對像是否具有Python中的特定屬性?方法來確定對象屬性存在尋求一種方法來驗證對像中特定屬性的存在。考慮以下示例,其中嘗試訪問不確定屬性會引起錯誤: >>> a = someClass() >>> A.property Trackback(最近的最新電話): 文件“ ”,第1行, attributeError:SomeClass實...程式設計 發佈於2025-04-01 -

如何從Google API中檢索最新的jQuery庫?從Google APIS 問題中提供的jQuery URL是版本1.2.6。對於檢索最新版本,以前有一種使用特定版本編號的替代方法,它是使用以下語法:獲取最新版本:未壓縮)While these legacy URLs still remain in use, it is recommended ...程式設計 發佈於2025-04-01

如何從Google API中檢索最新的jQuery庫?從Google APIS 問題中提供的jQuery URL是版本1.2.6。對於檢索最新版本,以前有一種使用特定版本編號的替代方法,它是使用以下語法:獲取最新版本:未壓縮)While these legacy URLs still remain in use, it is recommended ...程式設計 發佈於2025-04-01 -

如何在JavaScript對像中動態設置鍵?在嘗試為JavaScript對象創建動態鍵時,如何使用此Syntax jsObj['key' i] = 'example' 1;不工作。正確的方法採用方括號: jsobj ['key''i] ='example'1; 在JavaScript中,數組是一...程式設計 發佈於2025-04-01

如何在JavaScript對像中動態設置鍵?在嘗試為JavaScript對象創建動態鍵時,如何使用此Syntax jsObj['key' i] = 'example' 1;不工作。正確的方法採用方括號: jsobj ['key''i] ='example'1; 在JavaScript中,數組是一...程式設計 發佈於2025-04-01 -

為什麼PYTZ最初顯示出意外的時區偏移?與pytz 最初從pytz獲得特定的偏移。例如,亞洲/hong_kong最初顯示一個七個小時37分鐘的偏移: 差異源利用本地化將時區分配給日期,使用了適當的時區名稱和偏移量。但是,直接使用DateTime構造器分配時區不允許進行正確的調整。 example pytz.timezone(&#...程式設計 發佈於2025-04-01

為什麼PYTZ最初顯示出意外的時區偏移?與pytz 最初從pytz獲得特定的偏移。例如,亞洲/hong_kong最初顯示一個七個小時37分鐘的偏移: 差異源利用本地化將時區分配給日期,使用了適當的時區名稱和偏移量。但是,直接使用DateTime構造器分配時區不允許進行正確的調整。 example pytz.timezone(&#...程式設計 發佈於2025-04-01 -

如何正確使用與PDO參數的查詢一樣?在pdo 中使用類似QUERIES在PDO中的Queries時,您可能會遇到類似疑問中描述的問題:此查詢也可能不會返回結果,即使$ var1和$ var2包含有效的搜索詞。錯誤在於不正確包含%符號。 通過將變量包含在$ params數組中的%符號中,您確保將%字符正確替換到查詢中。沒有此修改,PD...程式設計 發佈於2025-04-01

如何正確使用與PDO參數的查詢一樣?在pdo 中使用類似QUERIES在PDO中的Queries時,您可能會遇到類似疑問中描述的問題:此查詢也可能不會返回結果,即使$ var1和$ var2包含有效的搜索詞。錯誤在於不正確包含%符號。 通過將變量包含在$ params數組中的%符號中,您確保將%字符正確替換到查詢中。沒有此修改,PD...程式設計 發佈於2025-04-01 -

如何從PHP中的Unicode字符串中有效地產生對URL友好的sl。為有效的slug生成首先,該函數用指定的分隔符替換所有非字母或數字字符。此步驟可確保slug遵守URL慣例。隨後,它採用ICONV函數將文本簡化為us-ascii兼容格式,從而允許更廣泛的字符集合兼容性。 接下來,該函數使用正則表達式刪除了不需要的字符,例如特殊字符和空格。此步驟可確保slug僅包...程式設計 發佈於2025-04-01

如何從PHP中的Unicode字符串中有效地產生對URL友好的sl。為有效的slug生成首先,該函數用指定的分隔符替換所有非字母或數字字符。此步驟可確保slug遵守URL慣例。隨後,它採用ICONV函數將文本簡化為us-ascii兼容格式,從而允許更廣泛的字符集合兼容性。 接下來,該函數使用正則表達式刪除了不需要的字符,例如特殊字符和空格。此步驟可確保slug僅包...程式設計 發佈於2025-04-01 -

eval()vs. ast.literal_eval():對於用戶輸入,哪個Python函數更安全?稱量()和ast.literal_eval()中的Python Security 在使用用戶輸入時,必須優先確保安全性。強大的python功能eval()通常是作為潛在解決方案而出現的,但擔心其潛在風險。 This article delves into the differences betwee...程式設計 發佈於2025-04-01

eval()vs. ast.literal_eval():對於用戶輸入,哪個Python函數更安全?稱量()和ast.literal_eval()中的Python Security 在使用用戶輸入時,必須優先確保安全性。強大的python功能eval()通常是作為潛在解決方案而出現的,但擔心其潛在風險。 This article delves into the differences betwee...程式設計 發佈於2025-04-01 -

如何使用Regex在PHP中有效地提取括號內的文本php:在括號內提取文本在處理括號內的文本時,找到最有效的解決方案是必不可少的。一種方法是利用PHP的字符串操作函數,如下所示: 作為替代 $ text ='忽略除此之外的一切(text)'; preg_match('#((。 &&& [Regex使用模式來搜索特...程式設計 發佈於2025-04-01

如何使用Regex在PHP中有效地提取括號內的文本php:在括號內提取文本在處理括號內的文本時,找到最有效的解決方案是必不可少的。一種方法是利用PHP的字符串操作函數,如下所示: 作為替代 $ text ='忽略除此之外的一切(text)'; preg_match('#((。 &&& [Regex使用模式來搜索特...程式設計 發佈於2025-04-01 -

如何在php中使用捲髮發送原始帖子請求?如何使用php 創建請求來發送原始帖子請求,開始使用curl_init()開始初始化curl session。然後,配置以下選項: curlopt_url:請求 [要發送的原始數據指定內容類型,為原始的帖子請求指定身體的內容類型很重要。在這種情況下,它是文本/平原。要執行此操作,請使用包含以下標頭...程式設計 發佈於2025-04-01

如何在php中使用捲髮發送原始帖子請求?如何使用php 創建請求來發送原始帖子請求,開始使用curl_init()開始初始化curl session。然後,配置以下選項: curlopt_url:請求 [要發送的原始數據指定內容類型,為原始的帖子請求指定身體的內容類型很重要。在這種情況下,它是文本/平原。要執行此操作,請使用包含以下標頭...程式設計 發佈於2025-04-01 -

如何在GO編譯器中自定義編譯優化?在GO編譯器中自定義編譯優化 GO中的默認編譯過程遵循特定的優化策略。 However, users may need to adjust these optimizations for specific requirements.Optimization Control in Go Compi...程式設計 發佈於2025-04-01

如何在GO編譯器中自定義編譯優化?在GO編譯器中自定義編譯優化 GO中的默認編譯過程遵循特定的優化策略。 However, users may need to adjust these optimizations for specific requirements.Optimization Control in Go Compi...程式設計 發佈於2025-04-01 -

如何將來自三個MySQL表的數據組合到新表中?mysql:從三個表和列的新表創建新表 答案:為了實現這一目標,您可以利用一個3-way Join。 選擇p。 *,d.content作為年齡 來自人為p的人 加入d.person_id = p.id上的d的詳細信息 加入T.Id = d.detail_id的分類法 其中t.taxonomy ...程式設計 發佈於2025-04-01

如何將來自三個MySQL表的數據組合到新表中?mysql:從三個表和列的新表創建新表 答案:為了實現這一目標,您可以利用一個3-way Join。 選擇p。 *,d.content作為年齡 來自人為p的人 加入d.person_id = p.id上的d的詳細信息 加入T.Id = d.detail_id的分類法 其中t.taxonomy ...程式設計 發佈於2025-04-01 -

為什麼Microsoft Visual C ++無法正確實現兩台模板的實例?在Microsoft Visual C 中,Microsoft consions用戶strate strate strate strate strate strate strate strate strate strate strate strate strate strate strate st...程式設計 發佈於2025-04-01

為什麼Microsoft Visual C ++無法正確實現兩台模板的實例?在Microsoft Visual C 中,Microsoft consions用戶strate strate strate strate strate strate strate strate strate strate strate strate strate strate strate st...程式設計 發佈於2025-04-01

學習中文

- 1 走路用中文怎麼說? 走路中文發音,走路中文學習

- 2 坐飛機用中文怎麼說? 坐飞机中文發音,坐飞机中文學習

- 3 坐火車用中文怎麼說? 坐火车中文發音,坐火车中文學習

- 4 坐車用中文怎麼說? 坐车中文發音,坐车中文學習

- 5 開車用中文怎麼說? 开车中文發音,开车中文學習

- 6 游泳用中文怎麼說? 游泳中文發音,游泳中文學習

- 7 騎自行車用中文怎麼說? 骑自行车中文發音,骑自行车中文學習

- 8 你好用中文怎麼說? 你好中文發音,你好中文學習

- 9 謝謝用中文怎麼說? 谢谢中文發音,谢谢中文學習

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning