데이터베이스 성능 향상을 위한 주요 ySQL 스키마 검사

A database schema defines the logical structure of your database, including tables, columns, relationships, indexes, and constraints that shape how data is organized and accessed. It’s not just about how the data is stored but also how it interacts with queries, transactions, and other operations.

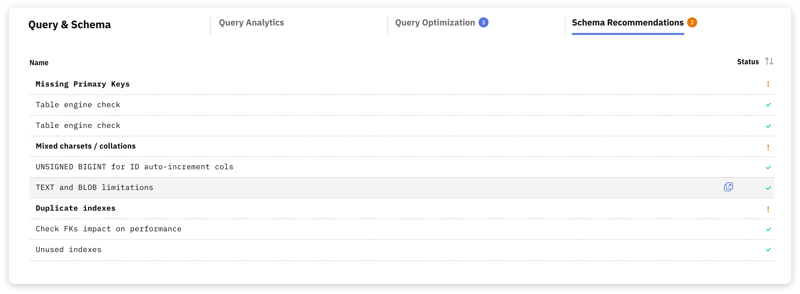

These checks can help you stay on top of any new or lingering problems before they snowball into bigger issues. You can dive deeper into these schema checks below and find out exactly how to fix any issues if your database doesn't pass. Just remember, before you make any schema changes, always backup your data to protect against potential risks that might occur during modifications.

1. Primary Key Check (Missing Primary Keys)

The primary key is a critical part of any table, uniquely identifying each row and enabling efficient queries. Without a primary key, tables may experience performance issues, and certain tools like replication and schema change utilities may not function properly.

There are several issues you can avoid by defining a primary key when designing schemas:

- If no primary or unique key is specified, MySQL creates an internal one, which is inaccessible for usage.

- The lack of a primary key could slow down replication performance, especially with row-based or mixed replication.

- Primary keys allow scalable data archiving and purging. Tools like pt-online-schema-change require a primary or unique key.

- Primary keys uniquely identify rows, which is crucial from an application perspective.

Example

To create a PRIMARY KEY constraint on the "ID" column when the table is already created, use the following SQL:

ALTER TABLE Persons ADD PRIMARY KEY (ID);

To define a primary key on multiple columns:

ALTER TABLE Persons ADD CONSTRAINT PK_Person PRIMARY KEY (ID, LastName);

Note: If you use the ALTER TABLE command, then the primary key column(s) must have been declared to not contain NULL values when the table was first created.

2. Table Engine Check(Deprecated Table Engine)

The MyISAM storage engine is deprecated, and tables still using it should be migrated to InnoDB. InnoDB is the default and recommended engine for most use cases due to its superior performance, data recovery capabilities, and transaction support. Migrating from MyISAM to InnoDB can dramatically improve performance in write-heavy applications, provide better fault tolerance, and allow for more advanced MySQL features such as full-text search and foreign keys.

Why InnoDB is preferred:

- Crash recovery capabilities allow it to recover automatically from database server or host crashes without data corruption.

- Only locks the rows affected by a query, allowing for much better performance in high-concurrency environments.

- Caches both data and indexes in memory, which is preferred for read-heavy workloads.

- Fully ACID-compliant, ensuring data integrity and supporting transactions.

- The InnoDB engine receives the majority of the focus from the MySQL development community, making it the most up-to-date and well-supported engine.

How to Migrate to InnoDB

ALTER TABLEENGINE=InnoDB;

3. Table Collation Check (Mixed Collations)

Using different collations across tables or even within a table can lead to performance problems, particularly during string comparisons and joins. If the collations of two string columns differ, MySQL might need to convert the strings at runtime, which can prevent indexes from being used and slow down your queries.

When you make changes to mixed collations tables, a few problems can surface:

- Collations can differ at the column level, so mismatches at the table level won’t cause issues if the relevant columns in a join have matching collations.

- Changing a table's collation, especially with a charset switch, isn't always simple. Data conversion might be needed, and unsupported characters could turn into corrupted data.

- If you don’t specify a collation or charset when creating a table, it inherits the database defaults. If none are set at the database level, server defaults will apply. To avoid these issues, it’s important to standardize the collation across your entire dataset, especially for columns that are frequently used in join operations.

How to Change Collation Settings

Before making any changes to your database's collation settings, test your approach in a non-production environment to avoid unintended consequences. If you're unsure about anything, it’s best to consult with a DBA.

Retrieve the default charset and collation for all databases:

SELECT SCHEMA_NAME, DEFAULT_CHARACTER_SET_NAME, DEFAULT_COLLATION_NAME FROM INFORMATION_SCHEMA.SCHEMATA;

Check the collation of specific tables:

SELECT TABLE_SCHEMA, TABLE_NAME, TABLE_COLLATION FROM information_schema.TABLES WHERE TABLE_COLLATION IS NOT NULL ORDER BY TABLE_SCHEMA, TABLE_COLLATION;

Find the server's default charset:

SELECT @@GLOBAL.character_set_server;

Find the server's default collation:

SELECT @@GLOBAL.collation_server;

Update the collation for a specific database:

ALTER DATABASECOLLATE= ;

Update the collation for a specific table:

ALTER TABLECOLLATE= ;

4. Table Character Set Check (Mixed Character Set)

Mixed character sets are similar to mixed collations in that they can lead to performance and compatibility issues. A mixed character set occurs when different columns or tables use different encoding formats for storing data.

- Mixed character sets can hurt join performance on string columns by preventing index use or requiring value conversions.

- Character sets can be defined at the column level, and as long as the columns involved in a join have matching character sets, performance won’t be impacted by mismatches at the table level.

- Changing a table’s character set may involve data conversion, which can lead to corrupted data if unsupported characters are encountered.

- If no character set or collation is specified, tables inherit the database's defaults, and databases inherit the server's default charset and collation.

How to Change Character Settings

Before adjusting your database's character settings, be sure to test the changes in a staging environment to prevent any unexpected issues. If you're uncertain about any steps, consult a DBA for guidance.

Retrieve the default charset and collation for all databases:

SELECT SCHEMA_NAME,DEFAULT_CHARACTER_SET_NAME, DEFAULT_COLLATION_NAME FROM INFORMATION_SCHEMA.SCHEMATA;

Get the character set of a column:

SELECT TABLE_SCHEMA, TABLE_NAME, COLUMN_NAME, CHARACTER_SET_NAME FROM information_schema.COLUMNS WHERE CHARACTER_SET_NAME is not NULL ORDER BY TABLE_SCHEMA, CHARACTER_SET_NAME;

Find the server's default charset:

SELECT @@GLOBAL.character_set_server;

Find the server's default collation:

SELECT @@GLOBAL.collation_server;

To view the structure of a table:

show create table

-

PHP의 Elvis 연산자는 무엇이며 어떻게 작동합니까?PHP에서 Elvis 연산자(?:) 길들이기신비한 ?: 연산자가 일부 PHP 코드를 우아하게 만들어 여러분을 당황하게 만듭니다. 이 간결한 기사는 그 수수께끼의 성격을 파헤쳐 그 진정한 목적을 밝혀냅니다.?: 연산자 공개'Elvis 연산자라고도 알려진 ?: 연산...프로그램 작성 2024년 11월 19일에 게시됨

PHP의 Elvis 연산자는 무엇이며 어떻게 작동합니까?PHP에서 Elvis 연산자(?:) 길들이기신비한 ?: 연산자가 일부 PHP 코드를 우아하게 만들어 여러분을 당황하게 만듭니다. 이 간결한 기사는 그 수수께끼의 성격을 파헤쳐 그 진정한 목적을 밝혀냅니다.?: 연산자 공개'Elvis 연산자라고도 알려진 ?: 연산...프로그램 작성 2024년 11월 19일에 게시됨 -

Java에서 밀리초 정밀도로 현재 시간을 추출하는 방법은 무엇입니까?Java에서 밀리초 정밀도로 현재 시간 추출YYYY-MM-DD HH:MI:Sec 형식으로 현재 시간을 얻으려면 .Millisecond, 제공된 코드에 대한 확장이 필요합니다. 수정의 핵심은 SimpleDateFormat 패턴을 개선하는 것입니다.제공된 코드는 밀리초 정...프로그램 작성 2024년 11월 19일에 게시됨

Java에서 밀리초 정밀도로 현재 시간을 추출하는 방법은 무엇입니까?Java에서 밀리초 정밀도로 현재 시간 추출YYYY-MM-DD HH:MI:Sec 형식으로 현재 시간을 얻으려면 .Millisecond, 제공된 코드에 대한 확장이 필요합니다. 수정의 핵심은 SimpleDateFormat 패턴을 개선하는 것입니다.제공된 코드는 밀리초 정...프로그램 작성 2024년 11월 19일에 게시됨 -

MongoDB의 배열에서 객체를 어떻게 제거합니까?MongoDB: 배열에서 객체 제거MongoDB에서는 $를 사용하여 문서에 포함된 배열에서 객체를 제거할 수 있습니다. 풀 오퍼레이터. 배열에서 특정 개체를 제거하려면 정확한 개체와 일치하는 쿼리를 제공해야 합니다.다음 문서를 고려하세요.{ _id: 5150a11...프로그램 작성 2024년 11월 19일에 게시됨

MongoDB의 배열에서 객체를 어떻게 제거합니까?MongoDB: 배열에서 객체 제거MongoDB에서는 $를 사용하여 문서에 포함된 배열에서 객체를 제거할 수 있습니다. 풀 오퍼레이터. 배열에서 특정 개체를 제거하려면 정확한 개체와 일치하는 쿼리를 제공해야 합니다.다음 문서를 고려하세요.{ _id: 5150a11...프로그램 작성 2024년 11월 19일에 게시됨 -

PHP 애플리케이션에서 전면 컨트롤러 디자인 패턴의 역할은 무엇입니까?전면 컨트롤러 디자인 패턴 이해PHP의 세계에 입문한 초보자로서 "전면 컨트롤러"라는 용어를 접했을 것입니다. " 이 패턴은 애플리케이션 구조를 구성하고 유지하는 데 필수적입니다. 기능과 구현을 자세히 살펴보겠습니다.프런트 컨트롤러란 무엇입니...프로그램 작성 2024년 11월 19일에 게시됨

PHP 애플리케이션에서 전면 컨트롤러 디자인 패턴의 역할은 무엇입니까?전면 컨트롤러 디자인 패턴 이해PHP의 세계에 입문한 초보자로서 "전면 컨트롤러"라는 용어를 접했을 것입니다. " 이 패턴은 애플리케이션 구조를 구성하고 유지하는 데 필수적입니다. 기능과 구현을 자세히 살펴보겠습니다.프런트 컨트롤러란 무엇입니...프로그램 작성 2024년 11월 19일에 게시됨 -

고유 ID를 유지하고 중복 이름을 처리하면서 PHP에서 두 개의 연관 배열을 어떻게 결합합니까?PHP에서 연관 배열 결합PHP에서는 두 개의 연관 배열을 단일 배열로 결합하는 것이 일반적인 작업입니다. 다음 요청을 고려하십시오.문제 설명:제공된 코드는 두 개의 연관 배열 $array1 및 $array2를 정의합니다. 목표는 두 배열의 모든 키-값 쌍을 통합하는 ...프로그램 작성 2024년 11월 19일에 게시됨

고유 ID를 유지하고 중복 이름을 처리하면서 PHP에서 두 개의 연관 배열을 어떻게 결합합니까?PHP에서 연관 배열 결합PHP에서는 두 개의 연관 배열을 단일 배열로 결합하는 것이 일반적인 작업입니다. 다음 요청을 고려하십시오.문제 설명:제공된 코드는 두 개의 연관 배열 $array1 및 $array2를 정의합니다. 목표는 두 배열의 모든 키-값 쌍을 통합하는 ...프로그램 작성 2024년 11월 19일에 게시됨 -

mysqldb를 사용하여 Python 테이블을 Python의 사전 목록으로 변환하는 방법은 무엇입니까?Python: mysqldb를 사용하여 MySQL 테이블을 사전 개체 목록으로 변환MySQL 테이블을 사전 개체 목록으로 변환하려면 Python에서는 mysqldb 라이브러리에서 제공하는 DictCursor 클래스를 활용할 수 있습니다. 이 커서 클래스를 활용하면 테이...프로그램 작성 2024년 11월 19일에 게시됨

mysqldb를 사용하여 Python 테이블을 Python의 사전 목록으로 변환하는 방법은 무엇입니까?Python: mysqldb를 사용하여 MySQL 테이블을 사전 개체 목록으로 변환MySQL 테이블을 사전 개체 목록으로 변환하려면 Python에서는 mysqldb 라이브러리에서 제공하는 DictCursor 클래스를 활용할 수 있습니다. 이 커서 클래스를 활용하면 테이...프로그램 작성 2024년 11월 19일에 게시됨 -

웹 사이트에서 표시 숨기기 버튼을 사용하려면 사용자가 두 번 클릭해야 하는 이유는 무엇입니까?두 번 클릭 딜레마: 표시 숨기기 버튼 지연에 대한 간단한 수정웹 사이트에 표시 숨기기 버튼을 구현할 때 예상치 못한 문제가 발생할 수 있습니다. 사용자는 숨겨진 요소를 전환하려면 처음으로 버튼을 두 번 클릭해야 합니다. 이 동작은 실망스러울 수 있으므로 한 번의 클릭...프로그램 작성 2024년 11월 19일에 게시됨

웹 사이트에서 표시 숨기기 버튼을 사용하려면 사용자가 두 번 클릭해야 하는 이유는 무엇입니까?두 번 클릭 딜레마: 표시 숨기기 버튼 지연에 대한 간단한 수정웹 사이트에 표시 숨기기 버튼을 구현할 때 예상치 못한 문제가 발생할 수 있습니다. 사용자는 숨겨진 요소를 전환하려면 처음으로 버튼을 두 번 클릭해야 합니다. 이 동작은 실망스러울 수 있으므로 한 번의 클릭...프로그램 작성 2024년 11월 19일에 게시됨 -

macOS의 Django에서 \"부적절하게 구성됨: MySQLdb 모듈 로드 오류\"를 수정하는 방법은 무엇입니까?MySQL이 잘못 구성됨: 상대 경로 문제Django에서 python prepare.py runserver를 실행할 때 다음 오류가 발생할 수 있습니다:ImproperlyConfigured: Error loading MySQLdb module: dlopen(/Libra...프로그램 작성 2024년 11월 19일에 게시됨

macOS의 Django에서 \"부적절하게 구성됨: MySQLdb 모듈 로드 오류\"를 수정하는 방법은 무엇입니까?MySQL이 잘못 구성됨: 상대 경로 문제Django에서 python prepare.py runserver를 실행할 때 다음 오류가 발생할 수 있습니다:ImproperlyConfigured: Error loading MySQLdb module: dlopen(/Libra...프로그램 작성 2024년 11월 19일에 게시됨 -

재귀 매크로를 사용하여 매크로 인수를 어떻게 반복할 수 있나요?매크로 인수의 Foreach 매크로프로그래밍 세계에서 매크로는 반복 작업을 수행하는 편리한 방법을 제공합니다. 그러나 다른 매크로의 인수를 반복하는 매크로를 만들려고 하면 문제가 발생할 수 있습니다. 이 장애물을 극복하는 방법을 살펴보고 재귀 매크로의 영역을 자세히 살...프로그램 작성 2024년 11월 19일에 게시됨

재귀 매크로를 사용하여 매크로 인수를 어떻게 반복할 수 있나요?매크로 인수의 Foreach 매크로프로그래밍 세계에서 매크로는 반복 작업을 수행하는 편리한 방법을 제공합니다. 그러나 다른 매크로의 인수를 반복하는 매크로를 만들려고 하면 문제가 발생할 수 있습니다. 이 장애물을 극복하는 방법을 살펴보고 재귀 매크로의 영역을 자세히 살...프로그램 작성 2024년 11월 19일에 게시됨 -

MySQL을 사용하여 오늘 생일을 가진 사용자를 어떻게 찾을 수 있습니까?MySQL을 사용하여 오늘 생일이 있는 사용자를 식별하는 방법MySQL을 사용하여 오늘이 사용자의 생일인지 확인하려면 생일이 일치하는 모든 행을 찾는 것이 필요합니다. 오늘 날짜. 이는 UNIX 타임스탬프로 저장된 생일을 오늘 날짜와 비교하는 간단한 MySQL 쿼리를 ...프로그램 작성 2024년 11월 19일에 게시됨

MySQL을 사용하여 오늘 생일을 가진 사용자를 어떻게 찾을 수 있습니까?MySQL을 사용하여 오늘 생일이 있는 사용자를 식별하는 방법MySQL을 사용하여 오늘이 사용자의 생일인지 확인하려면 생일이 일치하는 모든 행을 찾는 것이 필요합니다. 오늘 날짜. 이는 UNIX 타임스탬프로 저장된 생일을 오늘 날짜와 비교하는 간단한 MySQL 쿼리를 ...프로그램 작성 2024년 11월 19일에 게시됨 -

코딩을 배우시나요? AI 도구의 남용을 피하세요코딩을 이제 막 시작했다면 AI를 사용하여 코드를 생성하는 것이 성공의 지름길처럼 들릴 수 있습니다. 그러나 실제로 그것은 당신이 깨닫지 못하는 방식으로 당신을 방해할 수 있습니다. 문제는 다음과 같습니다. 새로운 프로그래머로서 귀하의 주요 초점은 기본을 배우고 강력한...프로그램 작성 2024년 11월 19일에 게시됨

코딩을 배우시나요? AI 도구의 남용을 피하세요코딩을 이제 막 시작했다면 AI를 사용하여 코드를 생성하는 것이 성공의 지름길처럼 들릴 수 있습니다. 그러나 실제로 그것은 당신이 깨닫지 못하는 방식으로 당신을 방해할 수 있습니다. 문제는 다음과 같습니다. 새로운 프로그래머로서 귀하의 주요 초점은 기본을 배우고 강력한...프로그램 작성 2024년 11월 19일에 게시됨 -

C++에서 모든 Variadic 템플릿 인수에 대해 함수를 호출하는 방법은 무엇입니까?C 가변 템플릿: 모든 템플릿 인수에 대해 함수 호출C에서는 가변 템플릿 인수를 반복하고 호출과 같은 특정 작업을 수행하는 것이 종종 바람직합니다. 기능. 이는 다음 중 하나를 사용하여 달성할 수 있습니다:C 17 Fold Expression(f(args), ...);...프로그램 작성 2024년 11월 19일에 게시됨

C++에서 모든 Variadic 템플릿 인수에 대해 함수를 호출하는 방법은 무엇입니까?C 가변 템플릿: 모든 템플릿 인수에 대해 함수 호출C에서는 가변 템플릿 인수를 반복하고 호출과 같은 특정 작업을 수행하는 것이 종종 바람직합니다. 기능. 이는 다음 중 하나를 사용하여 달성할 수 있습니다:C 17 Fold Expression(f(args), ...);...프로그램 작성 2024년 11월 19일에 게시됨 -

`if` 문 너머: 명시적인 `bool` 변환이 있는 유형을 형변환 없이 사용할 수 있는 다른 곳은 어디입니까?형변환 없이 허용되는 bool로의 상황별 변환귀하의 클래스는 bool로의 명시적인 변환을 정의하여 해당 인스턴스 't'를 조건문에서 직접 사용할 수 있도록 합니다. 그러나 이 명시적인 변환은 다음과 같은 질문을 제기합니다. 캐스트 없이 't'...프로그램 작성 2024년 11월 19일에 게시됨

`if` 문 너머: 명시적인 `bool` 변환이 있는 유형을 형변환 없이 사용할 수 있는 다른 곳은 어디입니까?형변환 없이 허용되는 bool로의 상황별 변환귀하의 클래스는 bool로의 명시적인 변환을 정의하여 해당 인스턴스 't'를 조건문에서 직접 사용할 수 있도록 합니다. 그러나 이 명시적인 변환은 다음과 같은 질문을 제기합니다. 캐스트 없이 't'...프로그램 작성 2024년 11월 19일에 게시됨 -

HTML CSS와 자바스크립트를 사용하는 Navbar Drawer https://www.instagram.com/webstreet_code/인스타그램에서 우리를 팔로우하세요: https://www.instagram.com/webstreet_code/ 서랍 *{ 여백: 0; 패딩: 0; 상자 크기 조정: 테두리 상자;...프로그램 작성 2024년 11월 19일에 게시됨

HTML CSS와 자바스크립트를 사용하는 Navbar Drawer https://www.instagram.com/webstreet_code/인스타그램에서 우리를 팔로우하세요: https://www.instagram.com/webstreet_code/ 서랍 *{ 여백: 0; 패딩: 0; 상자 크기 조정: 테두리 상자;...프로그램 작성 2024년 11월 19일에 게시됨 -

Python `pytz` 라이브러리에서 사용 가능한 모든 시간대 목록에 어떻게 액세스합니까?Pytz 시간대를 나열하는 방법Python의 pytz 라이브러리는 시간 데이터 처리를 위한 광범위한 시간대를 제공합니다. 시간대 인수에 가능한 모든 값을 탐색하려면 다음 단계를 따르세요.pytz.all_timezones 사용사용 가능한 모든 시간대의 전체 목록을 얻으려...프로그램 작성 2024년 11월 19일에 게시됨

Python `pytz` 라이브러리에서 사용 가능한 모든 시간대 목록에 어떻게 액세스합니까?Pytz 시간대를 나열하는 방법Python의 pytz 라이브러리는 시간 데이터 처리를 위한 광범위한 시간대를 제공합니다. 시간대 인수에 가능한 모든 값을 탐색하려면 다음 단계를 따르세요.pytz.all_timezones 사용사용 가능한 모든 시간대의 전체 목록을 얻으려...프로그램 작성 2024년 11월 19일에 게시됨

중국어 공부

- 1 "걷다"를 중국어로 어떻게 말하나요? 走路 중국어 발음, 走路 중국어 학습

- 2 "비행기를 타다"를 중국어로 어떻게 말하나요? 坐飞机 중국어 발음, 坐飞机 중국어 학습

- 3 "기차를 타다"를 중국어로 어떻게 말하나요? 坐火车 중국어 발음, 坐火车 중국어 학습

- 4 "버스를 타다"를 중국어로 어떻게 말하나요? 坐车 중국어 발음, 坐车 중국어 학습

- 5 운전을 중국어로 어떻게 말하나요? 开车 중국어 발음, 开车 중국어 학습

- 6 수영을 중국어로 뭐라고 하나요? 游泳 중국어 발음, 游泳 중국어 학습

- 7 자전거를 타다 중국어로 뭐라고 하나요? 骑自行车 중국어 발음, 骑自行车 중국어 학습

- 8 중국어로 안녕하세요를 어떻게 말해요? 你好중국어 발음, 你好중국어 학습

- 9 감사합니다를 중국어로 어떻게 말하나요? 谢谢중국어 발음, 谢谢중국어 학습

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning