方法: Docker を使用して Django および Postgres アプリをコンテナー化する

This article was originally published on the Shipyard Blog.

As you’re building out your Django and PostgreSQL app, you’re probably thinking about a few boxes you’d like it to check:

- Portable: can I distribute this between machines or team members?

- Scalable: is this app able to handle an increase in number of users, requests, or general workload?

- Cloud native: can I host this app in development, ephemeral, staging, and/or production cloud environments?

Using Docker and Docker Compose can help get your app ready for every stage of the development life cycle, from local to production. In this post, we’ll be covering some of the basics for customizing a Dockerfile and Compose file for a Django app with a Postgres database.

TLDR: Shipyard maintains a Django / Postgres starter application, set up to build and run with Docker and Docker Compose. Fork it here. You can use it as a project template or as a reference for your existing app.

What is Django?

Django is an open source Python-based web framework. It’s primarily used as a backend for web apps. Django follows the “batteries included” philosophy — it comes complete with routing support, a login framework, a web server, database tools, and much more. Django is often compared to Flask, and scores more favorably on almost all fronts.

You’re using Django apps every day. Spotify, Doordash, Instagram, Eventbrite, and Pinterest all have Django in their stacks — which speaks volumes to how extensible and scalable it can be.

Benefits of Dockerizing a Django App

Running a Django app with Docker containers unlocks several new use cases. Right off the bat, it’s an improvement to your local development workflows — making setup cleaner and more straightforward.

If you want to cloud-host your project, you’ll typically need it containerized. The great thing about Docker containers is that they can be used throughout every stage of development, from local to production. You can also distribute your Docker image so others can run it instantly without any installation or building.

At the very least, if you’re including a Dockerfile with your project, you can ensure it builds and runs identically every single time, on every system.

Choosing a Python package manager

Our Python app needs a package manager to track, version, and install its dependencies. This helps us manage dependency inits/updates, instead of executing them individually, and preserve package versions across machines.

Both Pip and Poetry are popular dependency managers for Python, although there are quite a few others circulating around (e.g. uv, Conda, Rye).

Pip is incredibly straightforward. Users can list their packages in a requirements.txt file with their respective versions, and run pip install to set them up. Users can capture existing dependencies and their versions by running pip freeze > requirements.txt in a project’s root.

Poetry a highly-capable package manager for apps of any scale, but it’s slightly less straightforward to configure than Pip (it uses a TOML file with tables, metadata, and scripts). Poetry also uses a lockfile (poetry.lock) to “lock” dependencies at their current versions (and their dependencies by version). This way, if your project works at a certain point in time on a particular machine, this state will be preserved. Running poetry init prompts users with a series of options to generate a pyproject.toml file.

Writing a Dockerfile for a Django app

To Dockerize your Django app, you'll follow the classic Dockerfile structure (set the base image, set the working directory, etc.) and then modify it with the project-specific installation instructions, likely found in the README.

Selecting a base image

We can choose a lightweight Python image to act as our base for this Dockerfile. To browse versions by tag, check out the Python page on Docker Hub. I chose Alpine here since it’ll keep our image small:

FROM python:3.8.8-alpine3.13

Setting a working directory

Here, we will define the working directory within the Docker container. All paths mentioned after will be relative to this.

WORKDIR /srv

Installing system dependencies

There are a few libraries we need to add before we can get Poetry configured:

RUN apk add --update --no-cache \ gcc \ libc-dev \ libffi-dev \ openssl-dev \ bash \ git \ libtool \ m4 \ g \ autoconf \ automake \ build-base \ postgresql-dev

Installing Poetry

Next, we’ll ensure we’re using the latest version of Pip, and then use that to install Poetry inside our container:

RUN pip install --upgrade pip RUN pip install poetry

Installing our project’s dependencies

This app’s dependencies are defined in our pyproject.toml and poetry.lock files. Let’s bring them over to the container’s working directory, and then install from their declarations:

ADD pyproject.toml poetry.lock ./ RUN poetry install

Adding and installing the project itself

Now, we’ll copy over the rest of the project, and install the Django project itself within the container:

ADD src ./src RUN poetry install

Executing the start command

Finally, we’ll run our project’s start command. In this particular app, it’ll be the command that uses Poetry to start the Django development server:

CMD ["poetry", "run", "python", "src/manage.py", "runserver", "0:8080"]

The complete Dockerfile

When we combine the snippets from above, we’ll get this Dockerfile:

FROM python:3.8.8-alpine3.13 WORKDIR /srv RUN apk add --update --no-cache \ gcc \ libc-dev \ libffi-dev \ openssl-dev \ bash \ git \ libtool \ m4 \ g \ autoconf \ automake \ build-base \ postgresql-dev RUN pip install --upgrade pip RUN pip install poetry ADD pyproject.toml poetry.lock ./ RUN poetry install ADD src ./src RUN poetry install CMD ["poetry", "run", "python", "src/manage.py", "runserver", "0:8080"]

Writing a Docker Compose service definition for Django

We’re going to split this app into two services: django and postgres. Our django service will be built from our Dockerfile, containing all of our app’s local files.

Setting the build context

For this app, we want to build the django service from our single Dockerfile and use the entire root directory as our build context path. We can set our build label accordingly:

django: build: .

Setting host and container ports

We can map port 8080 on our host machine to 8080 within the container. This will also be the port we use to access our Django app — which will soon be live at http://localhost:8080.

ports: - '8080:8080'

Adding a service dependency

Since our Django app is connecting to a database, we want to instruct Compose to spin up our database container (postgres) first. We’ll use the depends_on label to make sure that service is ready and running before our django service starts:

depends_on: - postgres

Creating a bind mount

Since we’ll be sharing files between our host and this container, we can define a bind mount by using the volumes label. To set the volume, we’ll provide a local path, followed by a colon, followed by a path within the container. The ro flag gives the container read-only permissions for these files:

volumes: - './src:/srv/src:ro'

The end result

Combining all the options/configurations from above, our django service should look like this:

django:

build: .

ports:

- '8080:8080'

depends_on:

- postgres

volumes:

- './src:/srv/src:ro'

Adding a PostgreSQL database

Our Django app is configured to connect to a PostgreSQL database. This is defined in our settings.py:

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.postgresql',

'NAME': 'app',

'USER': 'obscure-user',

'PASSWORD': 'obscure-password',

'HOST': 'postgres',

'PORT': 5432,

}

}

Pulling the Postgres image

We can migrate our existing database to its own Docker container to isolate it from the base Django app. First, let’s define a postgres service in our Compose file and pull the latest lightweight Postgres image from Docker Hub:

postgres: image: 'postgres:14.13-alpine3.20'

Passing in env vars

To configure our PostgreSQL database, we can pass in a few environment variables to set credentials and paths. You may want to consider using a Secrets Manager for this.

environment: - POSTGRES_DB=app - POSTGRES_USER=obscure-user - POSTGRES_PASSWORD=obscure-password - PGDATA=/var/lib/postgresql/data/pgdata

Setting host and container ports

We can expose our container port by setting it to the default Postgres port: 5432. For this service, we’re only specifying a single port, which means that the host port will be randomized. This avoids collisions if you’re running multiple Postgres instances.

ports: - '5432'

Adding a named data volume

In our postgres definition, we can add a named volume. This is different from the bind mount that we created for the django service. This will persist our data after the Postgres container spins down.

volumes: - 'postgres:/var/lib/postgresql/data'

Outside of the service definitions and at the bottom of the Compose file, we’ll declare the named postgres volume again. By doing so, we can reference it from our other services if needed.

volumes: postgres:

Putting it all together

And here’s the resulting PostgreSQL definition in our Compose file:

postgres:

image: 'postgres:14.13-alpine3.20'

environment:

- POSTGRES_DB=app

- POSTGRES_USER=obscure-user

- POSTGRES_PASSWORD=obscure-password

- PGDATA=/var/lib/postgresql/data/pgdata

ports:

- '5432'

volumes:

- 'postgres:/var/lib/postgresql/data'

volumes:

postgres:

Deploying our app in a Shipyard ephemeral environment

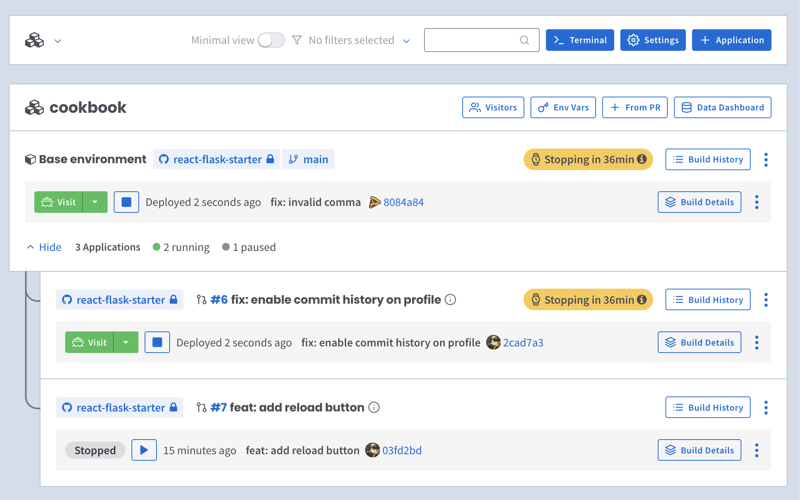

We can get our app production-ready by deploying it in a Shipyard application — this means we’ll get an ephemeral environment for our base branch, as well as environments for every PR we open.

Adding Docker Compose labels

Shipyard transpiles Compose files to Kubernetes manifests, so we’ll add some labels to make it Kubernetes-compatible.

Under our django service, we can add two custom Shipyard labels:

labels: shipyard.init: 'poetry run python src/manage.py migrate' shipyard.route: '/'

- The shipyard.init label will run a database migration before our django service starts

- The shipyard.route label will send HTTP requests to this service’s port

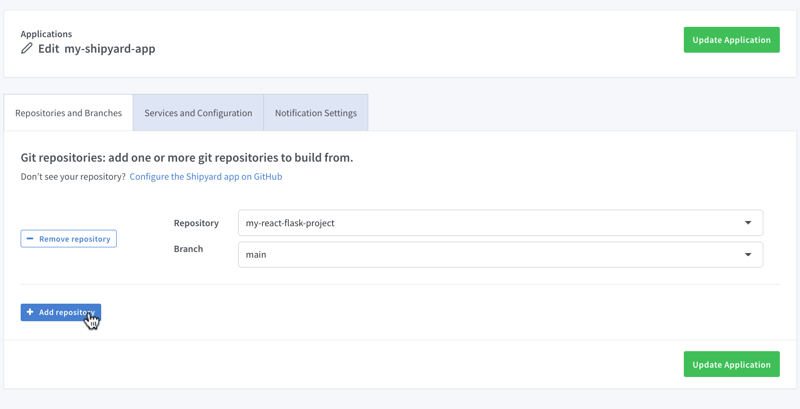

Creating a Shipyard app

Next, you can go to your Shipyard dashboard. If you haven’t already, sign up for a 30-day free trial.

Click the Application button, then select your repo, services, and import your env vars.

Visiting the app

Once it finishes building, you can click the green Visit button to access your short-lived ephemeral environment. What comes next?

- View your app’s build, deploy, and run logs

- Use our GitHub Action or CircleCI orb to integrate with your CI/CD

- Add SSO visitors to your app

- Create a PR to see your code changes in a new environment

And that’s all!

Now you have a fully containerized Django app with a database! You can run it locally with Docker Compose, preview and test it in an ephemeral environment, and iterate until it’s production-ready.

Want to Dockerize a Yarn app next? Check out our guide!

-

Bootstrap 4 ベータ版の列オフセットはどうなりましたか?Bootstrap 4 ベータ: 列オフセットの削除と復元Bootstrap 4 は、ベータ 1 リリースで、その方法に大幅な変更を導入しました。列がオフセットされました。ただし、その後の Beta 2 リリースでは、これらの変更は元に戻されました。offset-md-* から ml-autoBoo...プログラミング 2024 年 11 月 17 日に公開

Bootstrap 4 ベータ版の列オフセットはどうなりましたか?Bootstrap 4 ベータ: 列オフセットの削除と復元Bootstrap 4 は、ベータ 1 リリースで、その方法に大幅な変更を導入しました。列がオフセットされました。ただし、その後の Beta 2 リリースでは、これらの変更は元に戻されました。offset-md-* から ml-autoBoo...プログラミング 2024 年 11 月 17 日に公開 -

「if」ステートメントを超えて: 明示的な「bool」変換を伴う型をキャストせずに使用できる場所は他にありますか?キャストなしで bool へのコンテキスト変換が可能クラスは bool への明示的な変換を定義し、そのインスタンス 't' を条件文で直接使用できるようにします。ただし、この明示的な変換では、キャストなしで bool として 't' を使用できる場所はどこですか?コン...プログラミング 2024 年 11 月 17 日に公開

「if」ステートメントを超えて: 明示的な「bool」変換を伴う型をキャストせずに使用できる場所は他にありますか?キャストなしで bool へのコンテキスト変換が可能クラスは bool への明示的な変換を定義し、そのインスタンス 't' を条件文で直接使用できるようにします。ただし、この明示的な変換では、キャストなしで bool として 't' を使用できる場所はどこですか?コン...プログラミング 2024 年 11 月 17 日に公開 -

MySQL のシャーディングは本当に大規模なデータセットにとって最良のアプローチなのでしょうか?MySQL のシャーディング: 重要なアプローチMySQL データベースの最適化に関して言えば、大規模なデータセットを処理するための潜在的なソリューションとしてシャーディングが浮上します。ただし、シャーディングを実装する前に、シャーディングに関連するトレードオフと潜在的な落とし穴を理解することが重要...プログラミング 2024 年 11 月 17 日に公開

MySQL のシャーディングは本当に大規模なデータセットにとって最良のアプローチなのでしょうか?MySQL のシャーディング: 重要なアプローチMySQL データベースの最適化に関して言えば、大規模なデータセットを処理するための潜在的なソリューションとしてシャーディングが浮上します。ただし、シャーディングを実装する前に、シャーディングに関連するトレードオフと潜在的な落とし穴を理解することが重要...プログラミング 2024 年 11 月 17 日に公開 -

MySQL SELECT クエリで IF ステートメントを使用する方法: 構文とベスト プラクティスのガイドMySQL SELECT クエリでの IF ステートメントの使用法MySQL SELECT クエリ内で IF ステートメントを実装しようとすると、次のような問題が発生する可能性があります。特定の構文要件。 MySQL では、従来の IF/THEN/ELSE 構造はストアド プロシージャと関数内でのみ...プログラミング 2024 年 11 月 17 日に公開

MySQL SELECT クエリで IF ステートメントを使用する方法: 構文とベスト プラクティスのガイドMySQL SELECT クエリでの IF ステートメントの使用法MySQL SELECT クエリ内で IF ステートメントを実装しようとすると、次のような問題が発生する可能性があります。特定の構文要件。 MySQL では、従来の IF/THEN/ELSE 構造はストアド プロシージャと関数内でのみ...プログラミング 2024 年 11 月 17 日に公開 -

Pythonで特定の範囲内で一意の乱数を生成するにはどうすればよいですか?範囲内で一意の乱数を生成する乱数を生成する場合、各数値が一意であることを確認するのが難しい場合があります。条件付きステートメントを使用して重複をチェックすることは可能ですが、このアプローチは、広い範囲や大きな数値を扱う場合には面倒になります。一意の乱数のリストを生成する簡単な方法の 1 つは、Pyt...プログラミング 2024 年 11 月 17 日に公開

Pythonで特定の範囲内で一意の乱数を生成するにはどうすればよいですか?範囲内で一意の乱数を生成する乱数を生成する場合、各数値が一意であることを確認するのが難しい場合があります。条件付きステートメントを使用して重複をチェックすることは可能ですが、このアプローチは、広い範囲や大きな数値を扱う場合には面倒になります。一意の乱数のリストを生成する簡単な方法の 1 つは、Pyt...プログラミング 2024 年 11 月 17 日に公開 -

プレースホルダーとパラメーターを使用して「WHERE... IN」で PDO クエリを実行する方法「WHERE... IN」を使用した PDO クエリPDO によるデータベース アクセスを強化する取り組みの中で、多くの開発者が、特に「WHERE... IN」に関する課題に直面しています。 IN」のクエリ。複雑な点を詳しく調べて、PDO プリペアド ステートメント内で項目のリストを使用するための適...プログラミング 2024 年 11 月 17 日に公開

プレースホルダーとパラメーターを使用して「WHERE... IN」で PDO クエリを実行する方法「WHERE... IN」を使用した PDO クエリPDO によるデータベース アクセスを強化する取り組みの中で、多くの開発者が、特に「WHERE... IN」に関する課題に直面しています。 IN」のクエリ。複雑な点を詳しく調べて、PDO プリペアド ステートメント内で項目のリストを使用するための適...プログラミング 2024 年 11 月 17 日に公開 -

Python 辞書内の任意の要素に効率的にアクセスして削除するにはどうすればよいですか?Python ディクショナリ内の任意の要素へのアクセスPython では、ディクショナリはキーと値のペアを順序付けされていないコレクションに格納します。辞書が空でない場合は、次の構文を使用して任意の (ランダムな) 要素にアクセスできます:mydict[list(mydict.keys())[0]]...プログラミング 2024 年 11 月 17 日に公開

Python 辞書内の任意の要素に効率的にアクセスして削除するにはどうすればよいですか?Python ディクショナリ内の任意の要素へのアクセスPython では、ディクショナリはキーと値のペアを順序付けされていないコレクションに格納します。辞書が空でない場合は、次の構文を使用して任意の (ランダムな) 要素にアクセスできます:mydict[list(mydict.keys())[0]]...プログラミング 2024 年 11 月 17 日に公開 -

jQuery を使用して背景色をアニメーション化するにはどうすればよいですか?jQuery を使用した背景色のフェードアウト注意を引く Web サイト要素には、フェードインやフェードアウトなどの微妙なアニメーションが必要になることがよくあります。 jQuery はテキスト コンテンツのアニメーション化に広く使用されていますが、背景色を動的に強化するためにも使用できます。jQu...プログラミング 2024 年 11 月 17 日に公開

jQuery を使用して背景色をアニメーション化するにはどうすればよいですか?jQuery を使用した背景色のフェードアウト注意を引く Web サイト要素には、フェードインやフェードアウトなどの微妙なアニメーションが必要になることがよくあります。 jQuery はテキスト コンテンツのアニメーション化に広く使用されていますが、背景色を動的に強化するためにも使用できます。jQu...プログラミング 2024 年 11 月 17 日に公開 -

macOS 上の Django で「ImproperlyConfigured: MySQLdb モジュールのロード中にエラーが発生しました」を修正する方法?MySQL の不適切な構成: 相対パスの問題Django で python manage.py runserver を実行すると、次のエラーが発生する場合があります:ImproperlyConfigured: Error loading MySQLdb module: dlopen(/Library...プログラミング 2024 年 11 月 17 日に公開

macOS 上の Django で「ImproperlyConfigured: MySQLdb モジュールのロード中にエラーが発生しました」を修正する方法?MySQL の不適切な構成: 相対パスの問題Django で python manage.py runserver を実行すると、次のエラーが発生する場合があります:ImproperlyConfigured: Error loading MySQLdb module: dlopen(/Library...プログラミング 2024 年 11 月 17 日に公開 -

一意の ID を保持し、重複した名前を処理しながら、PHP で 2 つの連想配列を結合するにはどうすればよいですか?PHP での連想配列の結合PHP では、2 つの連想配列を 1 つの配列に結合するのが一般的なタスクです。次のリクエストを考えてみましょう:問題の説明:提供されたコードは 2 つの連想配列 $array1 と $array2 を定義します。目標は、両方の配列のすべてのキーと値のペアを統合する新しい配...プログラミング 2024 年 11 月 17 日に公開

一意の ID を保持し、重複した名前を処理しながら、PHP で 2 つの連想配列を結合するにはどうすればよいですか?PHP での連想配列の結合PHP では、2 つの連想配列を 1 つの配列に結合するのが一般的なタスクです。次のリクエストを考えてみましょう:問題の説明:提供されたコードは 2 つの連想配列 $array1 と $array2 を定義します。目標は、両方の配列のすべてのキーと値のペアを統合する新しい配...プログラミング 2024 年 11 月 17 日に公開 -

Go での構造体の埋め込み: ポインタか値か?いつどちらを使用するか?Go での構造体の埋め込み: ポインターを使用する場合ある構造体を別の構造体内に埋め込むことを検討する場合、ポインターを使用するかどうかの決定または、埋め込みフィールドの値が発生します。この記事では、この実装の選択の微妙な違いを検討し、潜在的な利点と影響を説明する例を示します。ポインターによる埋め込...プログラミング 2024 年 11 月 17 日に公開

Go での構造体の埋め込み: ポインタか値か?いつどちらを使用するか?Go での構造体の埋め込み: ポインターを使用する場合ある構造体を別の構造体内に埋め込むことを検討する場合、ポインターを使用するかどうかの決定または、埋め込みフィールドの値が発生します。この記事では、この実装の選択の微妙な違いを検討し、潜在的な利点と影響を説明する例を示します。ポインターによる埋め込...プログラミング 2024 年 11 月 17 日に公開 -

MySQL で多態性抽象スーパークラスで @GeneratedValue GenerationType.TABLE を使用するときに発生する「Unknown columns 'sequence_name' in 'where quote'」エラーを解決する方法@GeneratedValue MySQL 上の多態性抽象スーパークラスHibernate と MySQL を利用する Spring MVC アプリケーションでは、抽象スーパークラス BaseEntity のサブクラスを永続化しようとすることが観察されています。 、「テーブル 'docbd....プログラミング 2024 年 11 月 17 日に公開

MySQL で多態性抽象スーパークラスで @GeneratedValue GenerationType.TABLE を使用するときに発生する「Unknown columns 'sequence_name' in 'where quote'」エラーを解決する方法@GeneratedValue MySQL 上の多態性抽象スーパークラスHibernate と MySQL を利用する Spring MVC アプリケーションでは、抽象スーパークラス BaseEntity のサブクラスを永続化しようとすることが観察されています。 、「テーブル 'docbd....プログラミング 2024 年 11 月 17 日に公開 -

PHP で継承せずにクラスメソッドを変更できますか?継承を使用せずにクラスにモンキー パッチを適用できますか?通常の継承のオプションを使用せずにクラスまたはそのメソッドを変更する必要がある状況に遭遇することがあります。たとえば、次のクラスを考えてみましょう:class third_party_library { function buggy_...プログラミング 2024 年 11 月 17 日に公開

PHP で継承せずにクラスメソッドを変更できますか?継承を使用せずにクラスにモンキー パッチを適用できますか?通常の継承のオプションを使用せずにクラスまたはそのメソッドを変更する必要がある状況に遭遇することがあります。たとえば、次のクラスを考えてみましょう:class third_party_library { function buggy_...プログラミング 2024 年 11 月 17 日に公開 -

Pure JavaScript で Textarea のサイズを自動調整するにはどうすればよいですか?テキストエリアの自動高さこの質問は、テキストエリアのスクロールバーを削除し、テキストエリア内のコンテンツに一致するように高さを調整することを目的としています。純粋な JavaScript コードを使用したソリューションが提供されています。function auto_grow(element) { ...プログラミング 2024 年 11 月 17 日に公開

Pure JavaScript で Textarea のサイズを自動調整するにはどうすればよいですか?テキストエリアの自動高さこの質問は、テキストエリアのスクロールバーを削除し、テキストエリア内のコンテンツに一致するように高さを調整することを目的としています。純粋な JavaScript コードを使用したソリューションが提供されています。function auto_grow(element) { ...プログラミング 2024 年 11 月 17 日に公開 -

デストラクターを手動で呼び出すことが正当な行為となるのはどのような場合ですか?デストラクターを手動で呼び出すのはどのような場合に正当化されますか?デストラクターを手動で呼び出すことは設計に欠陥があることを示すという考えがよく主張されます。ただし、これには疑問が生じます: このルールには例外はありますか?反例: 手動デストラクター呼び出しが必要なケース確かに、それが必要になる状...プログラミング 2024 年 11 月 17 日に公開

デストラクターを手動で呼び出すことが正当な行為となるのはどのような場合ですか?デストラクターを手動で呼び出すのはどのような場合に正当化されますか?デストラクターを手動で呼び出すことは設計に欠陥があることを示すという考えがよく主張されます。ただし、これには疑問が生じます: このルールには例外はありますか?反例: 手動デストラクター呼び出しが必要なケース確かに、それが必要になる状...プログラミング 2024 年 11 月 17 日に公開

中国語を勉強する

- 1 「歩く」は中国語で何と言いますか? 走路 中国語の発音、走路 中国語学習

- 2 「飛行機に乗る」は中国語で何と言いますか? 坐飞机 中国語の発音、坐飞机 中国語学習

- 3 「電車に乗る」は中国語で何と言いますか? 坐火车 中国語の発音、坐火车 中国語学習

- 4 「バスに乗る」は中国語で何と言いますか? 坐车 中国語の発音、坐车 中国語学習

- 5 中国語でドライブは何と言うでしょう? 开车 中国語の発音、开车 中国語学習

- 6 水泳は中国語で何と言うでしょう? 游泳 中国語の発音、游泳 中国語学習

- 7 中国語で自転車に乗るってなんて言うの? 骑自行车 中国語の発音、骑自行车 中国語学習

- 8 中国語で挨拶はなんて言うの? 你好中国語の発音、你好中国語学習

- 9 中国語でありがとうってなんて言うの? 谢谢中国語の発音、谢谢中国語学習

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning