टॉपसी के साथ विषय मॉडलिंग: ड्रेफस, एआई और वर्डक्लाउड

2024-07-30 को प्रकाशित

पायथन के साथ पीडीएफ से अंतर्दृष्टि निकालना: एक व्यापक मार्गदर्शिका

यह स्क्रिप्ट पीडीएफ को संसाधित करने, टेक्स्ट निकालने, वाक्यों को टोकनाइज़ करने और कुशल और व्यावहारिक विश्लेषण के लिए विज़ुअलाइज़ेशन के साथ विषय मॉडलिंग करने के लिए एक शक्तिशाली वर्कफ़्लो प्रदर्शित करती है।

पुस्तकालय अवलोकन

- os: ऑपरेटिंग सिस्टम के साथ इंटरैक्ट करने के लिए फ़ंक्शन प्रदान करता है।

- matplotlib.pyplot: पायथन में स्थिर, एनिमेटेड और इंटरैक्टिव विज़ुअलाइज़ेशन बनाने के लिए उपयोग किया जाता है।

- nltk: प्राकृतिक भाषा टूलकिट, प्राकृतिक भाषा प्रसंस्करण के लिए पुस्तकालयों और कार्यक्रमों का एक सूट।

- पांडा: डेटा हेरफेर और विश्लेषण लाइब्रेरी।

- pdftotext: पीडीएफ दस्तावेज़ों को सादे पाठ में परिवर्तित करने के लिए लाइब्रेरी।

- re: नियमित अभिव्यक्ति मिलान संचालन प्रदान करता है।

- सीबॉर्न: मैटप्लोटलिब पर आधारित सांख्यिकीय डेटा विज़ुअलाइज़ेशन लाइब्रेरी।

- nltk.tokenize.sent_tokenize: एक स्ट्रिंग को वाक्यों में टोकनाइज़ करने के लिए NLTK फ़ंक्शन।

- top2vec: विषय मॉडलिंग और सिमेंटिक खोज के लिए लाइब्रेरी।

- वर्डक्लाउड: टेक्स्ट डेटा से वर्ड क्लाउड बनाने के लिए लाइब्रेरी।

प्रारंभिक व्यवस्था

मॉड्यूल आयात करें

import os import matplotlib.pyplot as plt import nltk import pandas as pd import pdftotext import re import seaborn as sns from nltk.tokenize import sent_tokenize from top2vec import Top2Vec from wordcloud import WordCloud from cleantext import clean

इसके बाद, सुनिश्चित करें कि पंकट टोकनाइज़र डाउनलोड हो गया है:

nltk.download('punkt')

पाठ सामान्यीकरण

def normalize_text(text):

"""Normalize text by removing special characters and extra spaces,

and applying various other cleaning options."""

# Apply the clean function with specified parameters

cleaned_text = clean(

text,

fix_unicode=True, # fix various unicode errors

to_ascii=True, # transliterate to closest ASCII representation

lower=True, # lowercase text

no_line_breaks=False, # fully strip line breaks as opposed to only normalizing them

no_urls=True, # replace all URLs with a special token

no_emails=True, # replace all email addresses with a special token

no_phone_numbers=True, # replace all phone numbers with a special token

no_numbers=True, # replace all numbers with a special token

no_digits=True, # replace all digits with a special token

no_currency_symbols=True, # replace all currency symbols with a special token

no_punct=False, # remove punctuations

lang="en", # set to 'de' for German special handling

)

# Further clean the text by removing any remaining special characters except word characters, whitespace, and periods/commas

cleaned_text = re.sub(r"[^\w\s.,]", "", cleaned_text)

# Replace multiple whitespace characters with a single space and strip leading/trailing spaces

cleaned_text = re.sub(r"\s ", " ", cleaned_text).strip()

return cleaned_text

पीडीएफ पाठ निष्कर्षण

def extract_text_from_pdf(pdf_path):

with open(pdf_path, "rb") as f:

pdf = pdftotext.PDF(f)

all_text = "\n\n".join(pdf)

return normalize_text(all_text)

वाक्य टोकनीकरण

def split_into_sentences(text):

return sent_tokenize(text)

एकाधिक फ़ाइलें संसाधित करना

def process_files(file_paths):

authors, titles, all_sentences = [], [], []

for file_path in file_paths:

file_name = os.path.basename(file_path)

parts = file_name.split(" - ", 2)

if len(parts) != 3 or not file_name.endswith(".pdf"):

print(f"Skipping file with incorrect format: {file_name}")

continue

year, author, title = parts

author, title = author.strip(), title.replace(".pdf", "").strip()

try:

text = extract_text_from_pdf(file_path)

except Exception as e:

print(f"Error extracting text from {file_name}: {e}")

continue

sentences = split_into_sentences(text)

authors.append(author)

titles.append(title)

all_sentences.extend(sentences)

print(f"Number of sentences for {file_name}: {len(sentences)}")

return authors, titles, all_sentences

डेटा को सीएसवी में सहेजा जा रहा है

def save_data_to_csv(authors, titles, file_paths, output_file):

texts = []

for fp in file_paths:

try:

text = extract_text_from_pdf(fp)

sentences = split_into_sentences(text)

texts.append(" ".join(sentences))

except Exception as e:

print(f"Error processing file {fp}: {e}")

texts.append("")

data = pd.DataFrame({

"Author": authors,

"Title": titles,

"Text": texts

})

data.to_csv(output_file, index=False, quoting=1, encoding='utf-8')

print(f"Data has been written to {output_file}")

स्टॉपवर्ड लोड हो रहा है

def load_stopwords(filepath):

with open(filepath, "r") as f:

stopwords = f.read().splitlines()

additional_stopwords = ["able", "according", "act", "actually", "after", "again", "age", "agree", "al", "all", "already", "also", "am", "among", "an", "and", "another", "any", "appropriate", "are", "argue", "as", "at", "avoid", "based", "basic", "basis", "be", "been", "begin", "best", "book", "both", "build", "but", "by", "call", "can", "cant", "case", "cases", "claim", "claims", "class", "clear", "clearly", "cope", "could", "course", "data", "de", "deal", "dec", "did", "do", "doesnt", "done", "dont", "each", "early", "ed", "either", "end", "etc", "even", "ever", "every", "far", "feel", "few", "field", "find", "first", "follow", "follows", "for", "found", "free", "fri", "fully", "get", "had", "hand", "has", "have", "he", "help", "her", "here", "him", "his", "how", "however", "httpsabout", "ibid", "if", "im", "in", "is", "it", "its", "jstor", "june", "large", "lead", "least", "less", "like", "long", "look", "man", "many", "may", "me", "money", "more", "most", "move", "moves", "my", "neither", "net", "never", "new", "no", "nor", "not", "notes", "notion", "now", "of", "on", "once", "one", "ones", "only", "open", "or", "order", "orgterms", "other", "our", "out", "own", "paper", "past", "place", "plan", "play", "point", "pp", "precisely", "press", "put", "rather", "real", "require", "right", "risk", "role", "said", "same", "says", "search", "second", "see", "seem", "seems", "seen", "sees", "set", "shall", "she", "should", "show", "shows", "since", "so", "step", "strange", "style", "such", "suggests", "talk", "tell", "tells", "term", "terms", "than", "that", "the", "their", "them", "then", "there", "therefore", "these", "they", "this", "those", "three", "thus", "to", "todes", "together", "too", "tradition", "trans", "true", "try", "trying", "turn", "turns", "two", "up", "us", "use", "used", "uses", "using", "very", "view", "vol", "was", "way", "ways", "we", "web", "well", "were", "what", "when", "whether", "which", "who", "why", "with", "within", "works", "would", "years", "york", "you", "your", "suggests", "without"]

stopwords.extend(additional_stopwords)

return set(stopwords)

विषयों से स्टॉपवर्ड फ़िल्टर करना

def filter_stopwords_from_topics(topic_words, stopwords):

filtered_topics = []

for words in topic_words:

filtered_topics.append([word for word in words if word.lower() not in stopwords])

return filtered_topics

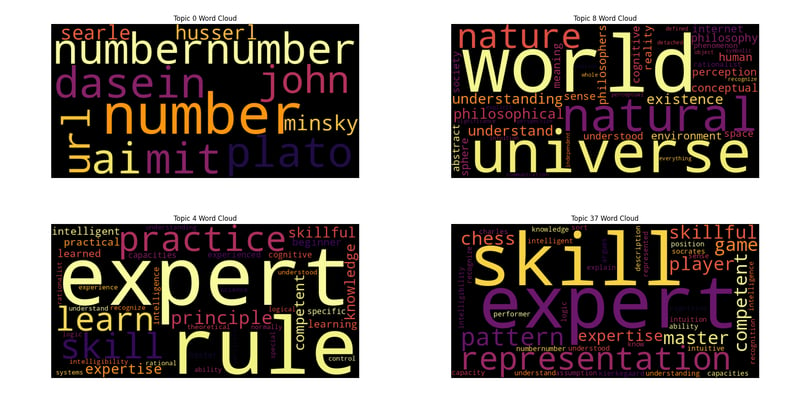

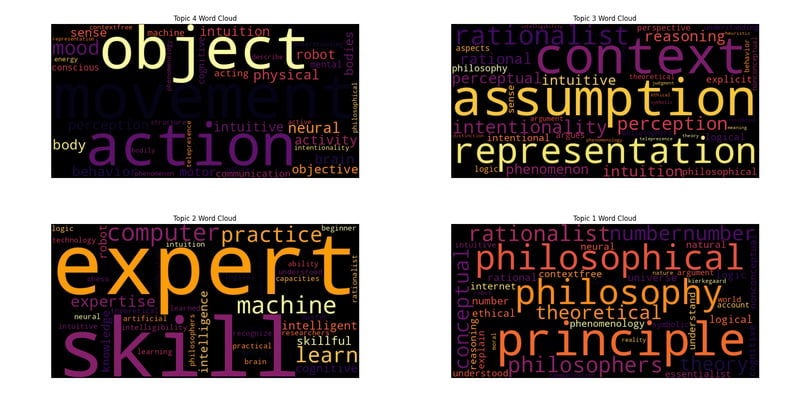

वर्ड क्लाउड जनरेशन

def generate_wordcloud(topic_words, topic_num, palette='inferno'):

colors = sns.color_palette(palette, n_colors=256).as_hex()

def color_func(word, font_size, position, orientation, random_state=None, **kwargs):

return colors[random_state.randint(0, len(colors) - 1)]

wordcloud = WordCloud(width=800, height=400, background_color='black', color_func=color_func).generate(' '.join(topic_words))

plt.figure(figsize=(10, 5))

plt.imshow(wordcloud, interpolation='bilinear')

plt.axis('off')

plt.title(f'Topic {topic_num} Word Cloud')

plt.show()

मुख्य निष्पादन

file_paths = [f"/home/roomal/Desktop/Dreyfus-Project/Dreyfus/{fname}" for fname in os.listdir("/home/roomal/Desktop/Dreyfus-Project/Dreyfus/") if fname.endswith(".pdf")]

authors, titles, all_sentences = process_files(file_paths)

output_file = "/home/roomal/Desktop/Dreyfus-Project/Dreyfus_Papers.csv"

save_data_to_csv(authors, titles, file_paths, output_file)

stopwords_filepath = "/home/roomal/Documents/Lists/stopwords.txt"

stopwords = load_stopwords(stopwords_filepath)

try:

topic_model = Top2Vec(

all_sentences,

embedding_model="distiluse-base-multilingual-cased",

speed="deep-learn",

workers=6

)

print("Top2Vec model created successfully.")

except ValueError as e:

print(f"Error initializing Top2Vec: {e}")

except Exception as e:

print(f"Unexpected error: {e}")

num_topics = topic_model.get_num_topics()

topic_words, word_scores, topic_nums = topic_model.get_topics(num_topics)

filtered_topic_words = filter_stopwords_from_topics(topic_words, stopwords)

for i, words in enumerate(filtered_topic_words):

print(f"Topic {i}: {', '.join(words)}")

keywords = ["heidegger"]

topic_words, word_scores, topic_scores, topic_nums = topic_model.search_topics(keywords=keywords, num_topics=num_topics)

filtered

_search_topic_words = filter_stopwords_from_topics(topic_words, stopwords)

for i, words in enumerate(filtered_search_topic_words):

generate_wordcloud(words, topic_nums[i])

for i in range(reduced_num_topics):

topic_words = topic_model.topic_words_reduced[i]

filtered_words = [word for word in topic_words if word.lower() not in stopwords]

print(f"Reduced Topic {i}: {', '.join(filtered_words)}")

generate_wordcloud(filtered_words, i)

विषयों की संख्या कम करें

reduced_num_topics = 5

topic_mapping = topic_model.hierarchical_topic_reduction(num_topics=reduced_num_topics)

# Print reduced topics and generate word clouds

for i in range(reduced_num_topics):

topic_words = topic_model.topic_words_reduced[i]

filtered_words = [word for word in topic_words if word.lower() not in stopwords]

print(f"Reduced Topic {i}: {', '.join(filtered_words)}")

generate_wordcloud(filtered_words, i)

विज्ञप्ति वक्तव्य

यह आलेख यहां पुन: प्रस्तुत किया गया है: https://dev.to/roomals/topic-modeling-with-top2vec-drefus-ai-and-wordclouds-1ggl?1 यदि कोई उल्लंघन है, तो कृपया हटाने के लिए स्टडी_गोलंग@163.कॉम से संपर्क करें। यह

नवीनतम ट्यूटोरियल

अधिक>

-

MacOS पर Django में \"अनुचित कॉन्फ़िगर: MySQLdb मॉड्यूल लोड करने में त्रुटि\" को कैसे ठीक करें?MySQL अनुचित तरीके से कॉन्फ़िगर किया गया: सापेक्ष पथों के साथ समस्याDjango में Python मैनेज.py runserver चलाते समय, आपको निम्न त्रुटि का सामना करना पड...प्रोग्रामिंग 2024-12-19 को प्रकाशित

MacOS पर Django में \"अनुचित कॉन्फ़िगर: MySQLdb मॉड्यूल लोड करने में त्रुटि\" को कैसे ठीक करें?MySQL अनुचित तरीके से कॉन्फ़िगर किया गया: सापेक्ष पथों के साथ समस्याDjango में Python मैनेज.py runserver चलाते समय, आपको निम्न त्रुटि का सामना करना पड...प्रोग्रामिंग 2024-12-19 को प्रकाशित -

जेएस और मूल बातेंजावास्क्रिप्ट और प्रोग्रामिंग फंडामेंटल के लिए एक शुरुआती मार्गदर्शिका जावास्क्रिप्ट (जेएस) एक शक्तिशाली और बहुमुखी प्रोग्रामिंग भाषा है जिसका उपयोग म...प्रोग्रामिंग 2024-12-19 को प्रकाशित

जेएस और मूल बातेंजावास्क्रिप्ट और प्रोग्रामिंग फंडामेंटल के लिए एक शुरुआती मार्गदर्शिका जावास्क्रिप्ट (जेएस) एक शक्तिशाली और बहुमुखी प्रोग्रामिंग भाषा है जिसका उपयोग म...प्रोग्रामिंग 2024-12-19 को प्रकाशित -

सरणीतरीके एफएनएस हैं जिन्हें ऑब्जेक्ट पर कॉल किया जा सकता है ऐरे ऑब्जेक्ट हैं, इसलिए जेएस में उनके तरीके भी हैं। स्लाइस (शुरू): मूल सरणी को बदले ब...प्रोग्रामिंग 2024-12-19 को प्रकाशित

सरणीतरीके एफएनएस हैं जिन्हें ऑब्जेक्ट पर कॉल किया जा सकता है ऐरे ऑब्जेक्ट हैं, इसलिए जेएस में उनके तरीके भी हैं। स्लाइस (शुरू): मूल सरणी को बदले ब...प्रोग्रामिंग 2024-12-19 को प्रकाशित -

वैध कोड के बावजूद पोस्ट अनुरोध PHP में इनपुट कैप्चर क्यों नहीं कर रहा है?PHP में POST अनुरोध की खराबी को संबोधित करनाप्रस्तुत कोड स्निपेट में:action=''इरादा टेक्स्ट बॉक्स से इनपुट कैप्चर करना और सबमिट बटन पर क्लिक करने पर इ...प्रोग्रामिंग 2024-12-19 को प्रकाशित

वैध कोड के बावजूद पोस्ट अनुरोध PHP में इनपुट कैप्चर क्यों नहीं कर रहा है?PHP में POST अनुरोध की खराबी को संबोधित करनाप्रस्तुत कोड स्निपेट में:action=''इरादा टेक्स्ट बॉक्स से इनपुट कैप्चर करना और सबमिट बटन पर क्लिक करने पर इ...प्रोग्रामिंग 2024-12-19 को प्रकाशित -

मैं जावा स्ट्रिंग में एकाधिक सबस्ट्रिंग को कुशलतापूर्वक कैसे बदल सकता हूं?जावा में एक स्ट्रिंग में एकाधिक सबस्ट्रिंग को कुशलतापूर्वक बदलनाजब एक स्ट्रिंग के भीतर कई सबस्ट्रिंग को बदलने की आवश्यकता का सामना करना पड़ता है, तो य...प्रोग्रामिंग 2024-12-19 को प्रकाशित

मैं जावा स्ट्रिंग में एकाधिक सबस्ट्रिंग को कुशलतापूर्वक कैसे बदल सकता हूं?जावा में एक स्ट्रिंग में एकाधिक सबस्ट्रिंग को कुशलतापूर्वक बदलनाजब एक स्ट्रिंग के भीतर कई सबस्ट्रिंग को बदलने की आवश्यकता का सामना करना पड़ता है, तो य...प्रोग्रामिंग 2024-12-19 को प्रकाशित -

डेटा डालते समय ''सामान्य त्रुटि: 2006 MySQL सर्वर चला गया है'' को कैसे ठीक करें?रिकॉर्ड सम्मिलित करते समय "सामान्य त्रुटि: 2006 MySQL सर्वर चला गया है" को कैसे हल करेंपरिचय:MySQL डेटाबेस में डेटा डालने से कभी-कभी त्रुटि ...प्रोग्रामिंग 2024-12-19 को प्रकाशित

डेटा डालते समय ''सामान्य त्रुटि: 2006 MySQL सर्वर चला गया है'' को कैसे ठीक करें?रिकॉर्ड सम्मिलित करते समय "सामान्य त्रुटि: 2006 MySQL सर्वर चला गया है" को कैसे हल करेंपरिचय:MySQL डेटाबेस में डेटा डालने से कभी-कभी त्रुटि ...प्रोग्रामिंग 2024-12-19 को प्रकाशित -

जावास्क्रिप्ट ऑब्जेक्ट्स में कुंजी को गतिशील रूप से कैसे सेट करें?जावास्क्रिप्ट ऑब्जेक्ट वेरिएबल के लिए डायनामिक कुंजी कैसे बनाएंजावास्क्रिप्ट ऑब्जेक्ट के लिए डायनामिक कुंजी बनाने का प्रयास करते समय, इस सिंटैक्स का उ...प्रोग्रामिंग 2024-12-19 को प्रकाशित

जावास्क्रिप्ट ऑब्जेक्ट्स में कुंजी को गतिशील रूप से कैसे सेट करें?जावास्क्रिप्ट ऑब्जेक्ट वेरिएबल के लिए डायनामिक कुंजी कैसे बनाएंजावास्क्रिप्ट ऑब्जेक्ट के लिए डायनामिक कुंजी बनाने का प्रयास करते समय, इस सिंटैक्स का उ...प्रोग्रामिंग 2024-12-19 को प्रकाशित -

PHP के फ़ंक्शन पुनर्परिभाषा प्रतिबंधों पर कैसे काबू पाएं?PHP की फ़ंक्शन पुनर्परिभाषा सीमाओं पर काबू पानाPHP में, एक ही नाम के साथ एक फ़ंक्शन को कई बार परिभाषित करना एक नो-नो है। ऐसा करने का प्रयास करने पर, ज...प्रोग्रामिंग 2024-12-19 को प्रकाशित

PHP के फ़ंक्शन पुनर्परिभाषा प्रतिबंधों पर कैसे काबू पाएं?PHP की फ़ंक्शन पुनर्परिभाषा सीमाओं पर काबू पानाPHP में, एक ही नाम के साथ एक फ़ंक्शन को कई बार परिभाषित करना एक नो-नो है। ऐसा करने का प्रयास करने पर, ज...प्रोग्रामिंग 2024-12-19 को प्रकाशित -

मैं अद्वितीय आईडी को संरक्षित करते हुए और डुप्लिकेट नामों को संभालते हुए PHP में दो सहयोगी सरणियों को कैसे जोड़ूं?PHP में एसोसिएटिव एरेज़ का संयोजनPHP में, दो एसोसिएटिव एरेज़ को एक ही एरे में संयोजित करना एक सामान्य कार्य है। निम्नलिखित अनुरोध पर विचार करें:समस्या...प्रोग्रामिंग 2024-12-19 को प्रकाशित

मैं अद्वितीय आईडी को संरक्षित करते हुए और डुप्लिकेट नामों को संभालते हुए PHP में दो सहयोगी सरणियों को कैसे जोड़ूं?PHP में एसोसिएटिव एरेज़ का संयोजनPHP में, दो एसोसिएटिव एरेज़ को एक ही एरे में संयोजित करना एक सामान्य कार्य है। निम्नलिखित अनुरोध पर विचार करें:समस्या...प्रोग्रामिंग 2024-12-19 को प्रकाशित -

मैं MySQL का उपयोग करके आज के जन्मदिन वाले उपयोगकर्ताओं को कैसे ढूँढ सकता हूँ?MySQL का उपयोग करके आज के जन्मदिन वाले उपयोगकर्ताओं की पहचान कैसे करेंMySQL का उपयोग करके यह निर्धारित करना कि आज उपयोगकर्ता का जन्मदिन है या नहीं, इस...प्रोग्रामिंग 2024-12-19 को प्रकाशित

मैं MySQL का उपयोग करके आज के जन्मदिन वाले उपयोगकर्ताओं को कैसे ढूँढ सकता हूँ?MySQL का उपयोग करके आज के जन्मदिन वाले उपयोगकर्ताओं की पहचान कैसे करेंMySQL का उपयोग करके यह निर्धारित करना कि आज उपयोगकर्ता का जन्मदिन है या नहीं, इस...प्रोग्रामिंग 2024-12-19 को प्रकाशित -

`if` कथनों से परे: स्पष्ट `bool` रूपांतरण वाले प्रकार को कास्टिंग के बिना और कहाँ उपयोग किया जा सकता है?बूल में प्रासंगिक रूपांतरण बिना कास्ट के स्वीकृतआपकी कक्षा बूल में एक स्पष्ट रूपांतरण को परिभाषित करती है, जिससे आप सीधे सशर्त बयानों में इसके उदाहरण ...प्रोग्रामिंग 2024-12-19 को प्रकाशित

`if` कथनों से परे: स्पष्ट `bool` रूपांतरण वाले प्रकार को कास्टिंग के बिना और कहाँ उपयोग किया जा सकता है?बूल में प्रासंगिक रूपांतरण बिना कास्ट के स्वीकृतआपकी कक्षा बूल में एक स्पष्ट रूपांतरण को परिभाषित करती है, जिससे आप सीधे सशर्त बयानों में इसके उदाहरण ...प्रोग्रामिंग 2024-12-19 को प्रकाशित -

मेरा स्प्रिंग बूट ऐप स्वचालित रूप से डेटाबेस स्कीमा क्यों नहीं बना रहा है?स्प्रिंग बूट में स्वचालित रूप से डेटाबेस स्कीमा बनानास्प्रिंग बूट एप्लिकेशन लॉन्च करते समय, किसी को स्वचालित डेटाबेस स्कीमा निर्माण के साथ समस्याओं का...प्रोग्रामिंग 2024-12-18 को प्रकाशित

मेरा स्प्रिंग बूट ऐप स्वचालित रूप से डेटाबेस स्कीमा क्यों नहीं बना रहा है?स्प्रिंग बूट में स्वचालित रूप से डेटाबेस स्कीमा बनानास्प्रिंग बूट एप्लिकेशन लॉन्च करते समय, किसी को स्वचालित डेटाबेस स्कीमा निर्माण के साथ समस्याओं का...प्रोग्रामिंग 2024-12-18 को प्रकाशित -

क्या CSS3 ट्रांज़िशन प्रारंभ और समाप्ति बिंदुओं का पता लगाने के लिए ईवेंट प्रदान करते हैं?CSS3 ट्रांज़िशन इवेंट को समझनाCSS3 ट्रांज़िशन वेब तत्वों पर सहज एनिमेशन और दृश्य प्रभावों की अनुमति देता है। उपयोगकर्ता अनुभव को बढ़ाने और इन बदलावों ...प्रोग्रामिंग 2024-12-18 को प्रकाशित

क्या CSS3 ट्रांज़िशन प्रारंभ और समाप्ति बिंदुओं का पता लगाने के लिए ईवेंट प्रदान करते हैं?CSS3 ट्रांज़िशन इवेंट को समझनाCSS3 ट्रांज़िशन वेब तत्वों पर सहज एनिमेशन और दृश्य प्रभावों की अनुमति देता है। उपयोगकर्ता अनुभव को बढ़ाने और इन बदलावों ...प्रोग्रामिंग 2024-12-18 को प्रकाशित -

क्या आप जावा में मेमोरी को मैन्युअल रूप से हटा सकते हैं?जावा में मैनुअल मेमोरी डीलोकेशन बनाम कचरा संग्रहसी के विपरीत, जावा एक प्रबंधित मेमोरी फ्रेमवर्क को नियोजित करता है जहां मेमोरी आवंटन और डीलोकेशन को नि...प्रोग्रामिंग 2024-12-18 को प्रकाशित

क्या आप जावा में मेमोरी को मैन्युअल रूप से हटा सकते हैं?जावा में मैनुअल मेमोरी डीलोकेशन बनाम कचरा संग्रहसी के विपरीत, जावा एक प्रबंधित मेमोरी फ्रेमवर्क को नियोजित करता है जहां मेमोरी आवंटन और डीलोकेशन को नि...प्रोग्रामिंग 2024-12-18 को प्रकाशित -

विश्वसनीय रूप से कैसे निर्धारित करें कि कोई फ़ाइल जावा 1.6 में एक प्रतीकात्मक लिंक है?जावा 1.6 में प्रतीकात्मक लिंक का सत्यापनप्रतीकात्मक लिंक की उपस्थिति का निर्धारण विभिन्न फ़ाइल-हैंडलिंग कार्यों के लिए महत्वपूर्ण हो सकता है। जावा में...प्रोग्रामिंग 2024-12-17 को प्रकाशित

विश्वसनीय रूप से कैसे निर्धारित करें कि कोई फ़ाइल जावा 1.6 में एक प्रतीकात्मक लिंक है?जावा 1.6 में प्रतीकात्मक लिंक का सत्यापनप्रतीकात्मक लिंक की उपस्थिति का निर्धारण विभिन्न फ़ाइल-हैंडलिंग कार्यों के लिए महत्वपूर्ण हो सकता है। जावा में...प्रोग्रामिंग 2024-12-17 को प्रकाशित

चीनी भाषा का अध्ययन करें

- 1 आप चीनी भाषा में "चलना" कैसे कहते हैं? #का चीनी उच्चारण, #का चीनी सीखना

- 2 आप चीनी भाषा में "विमान ले लो" कैसे कहते हैं? #का चीनी उच्चारण, #का चीनी सीखना

- 3 आप चीनी भाषा में "ट्रेन ले लो" कैसे कहते हैं? #का चीनी उच्चारण, #का चीनी सीखना

- 4 आप चीनी भाषा में "बस ले लो" कैसे कहते हैं? #का चीनी उच्चारण, #का चीनी सीखना

- 5 चीनी भाषा में ड्राइव को क्या कहते हैं? #का चीनी उच्चारण, #का चीनी सीखना

- 6 तैराकी को चीनी भाषा में क्या कहते हैं? #का चीनी उच्चारण, #का चीनी सीखना

- 7 आप चीनी भाषा में साइकिल चलाने को क्या कहते हैं? #का चीनी उच्चारण, #का चीनी सीखना

- 8 आप चीनी भाषा में नमस्ते कैसे कहते हैं? 你好चीनी उच्चारण, 你好चीनी सीखना

- 9 आप चीनी भाषा में धन्यवाद कैसे कहते हैं? 谢谢चीनी उच्चारण, 谢谢चीनी सीखना

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning