कंटेनरीकरण .NET - भाग संबंधी विचार

This is part 2 of the Containerizing .NET series. You can read the series of articles here:

- Containerizing .NET: Part 1 - A Guide to Containerizing .NET Applications

- Containerizing .NET: Part 2 - Considerations

Considerations

Welcome to the second installment in our series on containerizing .NET applications. Building on the foundation laid in our first article—where we introduced Dockerfiles and the dotnet publish command—this piece delves into pivotal considerations for transitioning .NET applications into containers. As containers become a cornerstone of the ecosystem, understanding these factors is critical for developers aiming to enhance application deployment in containerized environments.

Architectural Alignment and Security

Architectural Considerations in Containerization

As we delve into containerizing .NET applications, it’s essential to recognize that the architectural style—whether you’re working with a microservices pattern or a monolithic design—plays a pivotal role in shaping the containerization strategy. However, regardless of the architecture chosen, there are several critical considerations that universally impact the transition to a containerized environment.

CI/CD and Deployment Strategies

The move to containers necessitates a reevaluation of your Continuous Integration/Continuous Deployment (CI/CD) pipelines and deployment strategies. Containers offer the advantage of immutable deployment artifacts, which can streamline the CI/CD process by ensuring consistency across different environments. However, this also means adapting your pipelines to handle container image building, storage, and deployment, which may involve new tools and practices. I will dive into those in a future article.

Scalability Concerns

Ensuring Scalable Design

Your application must be architected to support horizontal scaling, allowing for the addition or removal of container instances based on demand. This scalability is crucial for optimizing resource use and maintaining performance across varying loads.

Session State Management

In containerized architectures, statelessness is paramount. Containers, designed to be ephemeral, should not maintain session states internally, as this can impede scalability. Opt for external storage solutions like Redis, SQL databases, or distributed caches to handle session states, ensuring your application remains scalable and responsive to load changes.

Dependency Management Strategies

Linux Compatibility

Migration to containerized environments often involves transitioning from Windows to Linux-based containers. Ensure that your application’s dependencies and libraries are compatible with Linux, and that your Dockerfile and container environment are configured accordingly.

Handling Internal Dependencies

Ensure all necessary libraries and components are either bundled within the container or accessible via network endpoints, enabling your application to function seamlessly in its containerized form.

Integrating with External Services

Containerization demands a dynamic approach to connecting with external services like databases and messaging systems. Implement configurations that allow for flexible service discovery and connections through environment variables or specialized discovery tools.

File and Network Access

File Access Considerations

The encapsulated filesystem within containers requires a strategic approach to file access. Unlike traditional deployments where applications might directly access local file paths, containerized applications should be designed with portability and flexibility in mind. Here are some strategies to consider:

- Volume Mounts : Use Docker volumes or Kubernetes persistent volumes to persist data outside containers, enabling state persistence across container restarts and deployments. This approach is particularly useful for databases, logs, or any data that needs to survive beyond the container’s lifecycle.

- Cloud Storage Services : For applications that require access to large amounts of data or need to share data across multiple instances, integrating with cloud storage services (like Azure Blob Storage, Amazon S3, or Google Cloud Storage) provides a scalable and secure solution. This not only decouples your application from the underlying infrastructure but also enhances scalability by leveraging the cloud provider’s global network.

- File Permissions and Security : Carefully manage file permissions within the container to prevent unauthorized access. Ensure that your application runs with the least privileges necessary to access only the files it needs, enhancing security within the containerized environment.

Network Configuration and Service Discovery

Containers often run in orchestrated environments where networking is dynamically managed, and services discover each other through service discovery mechanisms rather than static IP addresses or hostnames. Consider these aspects to ensure robust network configurations:

- Service Discovery : Utilize service discovery tools provided by container orchestration platforms (like Kubernetes DNS or Docker Swarm’s embedded DNS) to dynamically discover and communicate with other services within the cluster.

- Container Networking Models : Familiarize yourself with the container network models (such as bridge, overlay, or host networks) and choose the appropriate model based on your application’s needs. For instance, overlay networks facilitate communication between containers across different hosts in a cluster.

- Port Configuration and Exposition : Explicitly define and manage which ports are exposed by your container and how they are mapped to the host system. This is crucial for ensuring that your application’s services are accessible as intended while maintaining control over network security.

Identity and Authentication Adjustments

In containerized environments, traditional methods of managing identity and authentication may not directly apply. Here are ways to adapt:

- Managed Identities for Azure Resources : Azure offers managed identities, automatically handling the management of credentials for accessing Azure services. This eliminates the need to store sensitive credentials in your application code or configuration.

- OAuth and OpenID Connect : Implement OAuth 2.0 and OpenID Connect protocols to manage user identities and authenticate against identity providers. This approach is effective for applications that require user authentication and can be integrated with most identity providers.

- Secrets Management : Use a secrets management tool (like Azure Key Vault, AWS Secrets Manager, or HashiCorp Vault) to securely store and access API keys, database connection strings, and other sensitive information. Modern container orchestration platforms, such as Kubernetes, offer native secrets management capabilities, allowing you to inject secrets into containers at runtime securely.

- Role-Based Access Control (RBAC): Implement RBAC within your application and infrastructure to ensure that only authorized users and services can perform specific actions. This is particularly important in microservices architectures where different services may have different access requirements.

Configuration Management

Efficient configuration management emerges as a critical component in the containerization of .NET applications. The dynamic nature of containerized environments necessitates a flexible and secure approach to configuring applications, ensuring they can adapt to different environments without necessitating changes to the container images themselves.

The .NET ecosystem offers various strategies for managing configurations effectively, aligning with cloud-native best practices. There are configuration providers for reading settings from environment variables, JSON files, and other sources, enabling applications to adapt to different environments seamlessly. Here are some strategies to consider:

Environment Variables

- Dynamic Configuration : Utilize environment variables to externalize configuration settings, enabling applications to adapt to various environments (development, staging, production) seamlessly.

- Best Practices : Define environment variables in container orchestration configurations, such as Kubernetes manifests or Docker Compose files, to inject settings at runtime.

Configuration Files

- Externalized Settings : Store configuration settings in external files (e.g., appsettings.json for .NET applications) that can be mounted into containers at runtime.

- Volume Mounts : Use Docker volumes or Kubernetes ConfigMaps and Secrets to mount configuration files into containers, ensuring sensitive information is managed securely.

Centralized Configuration Services

- Cloud Services : Leverage cloud-based configuration services like Azure App Configuration or AWS Parameter Store to centralize and manage application settings.

- Service Discovery : Integrate service discovery mechanisms to dynamically locate services and resources, reducing the need for hard-coded configurations.

Secrets Management

- Secure Storage : Utilize dedicated secrets management tools (e.g., Azure Key Vault, HashiCorp Vault) to securely store and manage sensitive configuration data such as passwords, tokens, and connection strings.

- Runtime Injection : Automate the injection of secrets into containers at runtime using platforms like Kubernetes Secrets, CSI Secret Store, or specific cloud provider integrations.

Immutable Configurations

- Immutable Infrastructure : Adopt an immutable infrastructure mindset, where configuration changes require redeploying containers rather than modifying running containers. This approach enhances consistency, reliability, and auditability across environments.

Configuration Drift Prevention

- Version Control : Keep configuration files and definitions under version control to track changes and prevent configuration drift.

- Continuous Integration : Integrate configuration management into the CI/CD pipeline, ensuring configurations are tested and validated before deployment.

Incorporating these configuration management strategies within the containerization process for .NET applications not only enhances flexibility and scalability but also bolsters security and compliance, aligning with best practices for cloud-native development.

Security and Compliance

In the realm of containerization, adherence to stringent security and compliance frameworks becomes paramount. The encapsulated nature of containers introduces unique security considerations:

- Vulnerability Scanning : Implementing automated tools to scan container images for known vulnerabilities at each stage of the CI/CD pipeline ensures that only secure images are deployed.

- Non-Root Privileges : Running containers as non-root users minimizes the risk of privileged escalations if a container is compromised. This practice is essential for limiting the attack surface and safeguarding the underlying host system.

- Secrets Management : Securely handling secrets necessitates moving away from embedding sensitive information within container images or environment variables. Utilizing dedicated secrets management tools or services, such as Kubernetes Secrets, HashiCorp Vault, or Azure Key Vault, allows for dynamic, secure injection of credentials and keys at runtime.

- Network Policies and Firewall Rules : Enforcing strict network policies and firewall rules to control inbound and outbound traffic to containers can prevent unauthorized access and mitigate potential attacks.

- Read-Only Filesystems : Where applicable, configuring containers with read-only filesystems can prevent malicious attempts to alter the runtime environment, further enhancing security posture.

- Continuous Monitoring and Logging : Implementing real-time monitoring and logging mechanisms to detect unusual activities and potential security breaches. Tools like Prometheus, Grafana, and ELK stack play a pivotal role in observing container behavior and ensuring operational integrity.

Tools, Frameworks, and Ecosystems

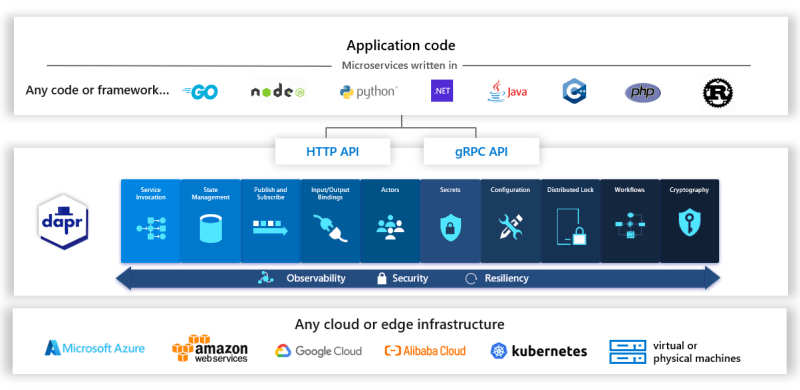

Distributed Application Runtime (DAPR)

DAPR (Distributed Application Runtime) has emerged as a transformative tool, simplifying the development of distributed applications. DAPR abstracts complex tasks such as state management, service discovery, and messaging into straightforward, consistent APIs, enabling developers to focus on business logic rather than infrastructure concerns. This abstraction is particularly beneficial in a containerized environment, where applications must be flexible, scalable, and capable of running across diverse platforms.

DAPR’s cloud-agnostic design ensures seamless integration with various cloud services, including Azure, without locking developers into a specific ecosystem. It supports dynamic configuration and facilitates local development, mirroring cloud environments on developers’ machines. By decoupling application logic from infrastructure intricacies, DAPR enhances portability and eases the transition of .NET applications into the cloud-native landscape, making it an indispensable tool for developers navigating the complexities of modern application development.

Azure Developer CLI

The Azure Developer CLI (azd) significantly streamlines the journey of containerizing and deploying .NET applications to the cloud. A pivotal feature, azd init, automates the scaffolding process, generating Dockerfiles and Azure resource definitions tailored to your project’s needs. This command is instrumental for developers seeking to swiftly prepare their applications for Azure, ensuring an optimized setup for either Azure Container Apps (ACA) or Azure Kubernetes Service (AKS). By abstracting the complexities of Docker and Kubernetes, azd allows developers to concentrate on building their applications, while effortlessly integrating with Azure’s robust cloud infrastructure.

.NET Aspire

.NET Aspire equips developers with an opinionated framework tailored for crafting observable, distributed .NET applications that are primed for cloud environments. It simplifies the development process by offering a curated collection of NuGet packages, each addressing specific cloud-native application challenges such as service integration, state management, and messaging. .NET Aspire stands out by facilitating the creation of microservices and distributed applications, enabling seamless service connections and promoting architectural best practices. This framework not only accelerates the development of cloud-ready .NET applications but also ensures they are scalable, resilient, and maintainable, aligning with the principles of modern, cloud-native development.

Conclusion

The journey to containerizing .NET applications is paved with considerations that span architecture, security, performance, and beyond. By addressing these aspects thoughtfully, developers can harness the full potential of containerization, ensuring their .NET applications are efficient, secure, and poised for the cloud-native future. Stay tuned for subsequent articles, where we’ll explore strategies and tools to navigate these considerations, empowering your .NET applications to excel in a containerized landscape.

-

प्रतिक्रिया में मुख्य संपत्ति को समझना - सामान्य गलतियाँअगर आपको मेरे लेख पसंद आते हैं, तो आप मेरे लिए कॉफ़ी खरीद सकते हैं :) रिएक्ट में सूचियों के साथ काम करते समय, सबसे महत्वपूर्ण अवधारणाओं में से एक कुंज...प्रोग्रामिंग 2024-11-08 को प्रकाशित

प्रतिक्रिया में मुख्य संपत्ति को समझना - सामान्य गलतियाँअगर आपको मेरे लेख पसंद आते हैं, तो आप मेरे लिए कॉफ़ी खरीद सकते हैं :) रिएक्ट में सूचियों के साथ काम करते समय, सबसे महत्वपूर्ण अवधारणाओं में से एक कुंज...प्रोग्रामिंग 2024-11-08 को प्रकाशित -

प्रतिक्रिया में महारत हासिल करना: शक्तिशाली वेब अनुप्रयोगों के निर्माण के लिए एक चरण-दर-चरण यात्रा (परिचय)React is a popular JavaScript library used to build user interfaces, especially for single-page websites or apps. Whether you're a complete beginner o...प्रोग्रामिंग 2024-11-08 को प्रकाशित

प्रतिक्रिया में महारत हासिल करना: शक्तिशाली वेब अनुप्रयोगों के निर्माण के लिए एक चरण-दर-चरण यात्रा (परिचय)React is a popular JavaScript library used to build user interfaces, especially for single-page websites or apps. Whether you're a complete beginner o...प्रोग्रामिंग 2024-11-08 को प्रकाशित -

जावास्क्रिप्ट डोम बनाम बीओएम!डोम DOM का अर्थ है डॉक्यूमेंट ऑब्जेक्ट मॉडल और वेब पेज का प्रतिनिधित्व करता है। यह प्रोग्राम को दस्तावेज़ संरचना, शैली और सामग्री में हेरफेर ...प्रोग्रामिंग 2024-11-08 को प्रकाशित

जावास्क्रिप्ट डोम बनाम बीओएम!डोम DOM का अर्थ है डॉक्यूमेंट ऑब्जेक्ट मॉडल और वेब पेज का प्रतिनिधित्व करता है। यह प्रोग्राम को दस्तावेज़ संरचना, शैली और सामग्री में हेरफेर ...प्रोग्रामिंग 2024-11-08 को प्रकाशित -

बाइंडिंग और टेम्प्लेट: पेसी-यूआई श्रृंखला का भागTable of Contents Introduction Bindings and the Template Text Bindings Basic Binding Conditional Boolean Text B...प्रोग्रामिंग 2024-11-08 को प्रकाशित

बाइंडिंग और टेम्प्लेट: पेसी-यूआई श्रृंखला का भागTable of Contents Introduction Bindings and the Template Text Bindings Basic Binding Conditional Boolean Text B...प्रोग्रामिंग 2024-11-08 को प्रकाशित -

इंटरफ़ेस लागू करनाइंटरफ़ेस को परिभाषित करने के बाद, एक या अधिक कक्षाएं इसे कार्यान्वित कर सकती हैं। इंटरफ़ेस को लागू करने के लिए, क्लास परिभाषा में इम्प्लीमेंट्स क्लॉज...प्रोग्रामिंग 2024-11-08 को प्रकाशित

इंटरफ़ेस लागू करनाइंटरफ़ेस को परिभाषित करने के बाद, एक या अधिक कक्षाएं इसे कार्यान्वित कर सकती हैं। इंटरफ़ेस को लागू करने के लिए, क्लास परिभाषा में इम्प्लीमेंट्स क्लॉज...प्रोग्रामिंग 2024-11-08 को प्रकाशित -

प्रभाव-टीएस में विकल्पों में तत्वों की जाँच करना: एक व्यावहारिक मार्गदर्शिकाइफेक्ट-टीएस यह जांचने के तरीके प्रदान करता है कि किसी विकल्प में कोई विशिष्ट मान है या नहीं। ये फ़ंक्शन आपको कस्टम समकक्ष फ़ंक्शन या डिफ़ॉल्ट समकक्ष क...प्रोग्रामिंग 2024-11-08 को प्रकाशित

प्रभाव-टीएस में विकल्पों में तत्वों की जाँच करना: एक व्यावहारिक मार्गदर्शिकाइफेक्ट-टीएस यह जांचने के तरीके प्रदान करता है कि किसी विकल्प में कोई विशिष्ट मान है या नहीं। ये फ़ंक्शन आपको कस्टम समकक्ष फ़ंक्शन या डिफ़ॉल्ट समकक्ष क...प्रोग्रामिंग 2024-11-08 को प्रकाशित -

पायथन में ऑब्जेक्ट-ओरिएंटेड प्रोग्रामिंग का परिचयपायथन प्रोग्रामिंग भाषा पायथन एक व्याख्या की गई, ऑब्जेक्ट-ओरिएंटेड प्रोग्रामिंग भाषा है। इसकी उच्च-स्तरीय अंतर्निहित डेटा संरचनाओं और गतिशील ...प्रोग्रामिंग 2024-11-08 को प्रकाशित

पायथन में ऑब्जेक्ट-ओरिएंटेड प्रोग्रामिंग का परिचयपायथन प्रोग्रामिंग भाषा पायथन एक व्याख्या की गई, ऑब्जेक्ट-ओरिएंटेड प्रोग्रामिंग भाषा है। इसकी उच्च-स्तरीय अंतर्निहित डेटा संरचनाओं और गतिशील ...प्रोग्रामिंग 2024-11-08 को प्रकाशित -

सर्वोत्तम सॉफ़्टवेयर की तुलनात्मक समीक्षा में शीर्ष डेटा विज्ञान उपकरणपरिचय 2024 में, डेटा साइंस परिष्कृत एनालिटिक्स, आर्टिफिशियल इंटेलिजेंस और मशीन लर्निंग का उपयोग करके निर्णय लेने को प्रेरित करके व्यवसायों को...प्रोग्रामिंग 2024-11-08 को प्रकाशित

सर्वोत्तम सॉफ़्टवेयर की तुलनात्मक समीक्षा में शीर्ष डेटा विज्ञान उपकरणपरिचय 2024 में, डेटा साइंस परिष्कृत एनालिटिक्स, आर्टिफिशियल इंटेलिजेंस और मशीन लर्निंग का उपयोग करके निर्णय लेने को प्रेरित करके व्यवसायों को...प्रोग्रामिंग 2024-11-08 को प्रकाशित -

मैंने अपने ऐप के प्रदर्शन को कैसे बढ़ाया⌛ पुनर्कथन समय अपने पिछले ब्लॉग में मैंने इस बारे में बात की थी कि कैसे मैंने केवल 2 सप्ताह में अपने ऐप का आकार 75एमबी से घटाकर 34एमबी कर दिय...प्रोग्रामिंग 2024-11-08 को प्रकाशित

मैंने अपने ऐप के प्रदर्शन को कैसे बढ़ाया⌛ पुनर्कथन समय अपने पिछले ब्लॉग में मैंने इस बारे में बात की थी कि कैसे मैंने केवल 2 सप्ताह में अपने ऐप का आकार 75एमबी से घटाकर 34एमबी कर दिय...प्रोग्रामिंग 2024-11-08 को प्रकाशित -

MySQL "चेतावनी: mysql_fetch_assoc के लिए अमान्य तर्क" त्रुटि क्यों देता है?MySQL चेतावनी: mysql_fetch_assoc के लिए अमान्य तर्कसमस्या:MySQL से डेटा पुनर्प्राप्त करने का प्रयास करते समय डेटाबेस, निम्न त्रुटि संदेश मिलता है:mysq...प्रोग्रामिंग 2024-11-08 को प्रकाशित

MySQL "चेतावनी: mysql_fetch_assoc के लिए अमान्य तर्क" त्रुटि क्यों देता है?MySQL चेतावनी: mysql_fetch_assoc के लिए अमान्य तर्कसमस्या:MySQL से डेटा पुनर्प्राप्त करने का प्रयास करते समय डेटाबेस, निम्न त्रुटि संदेश मिलता है:mysq...प्रोग्रामिंग 2024-11-08 को प्रकाशित -

क्या Django क्वेरीसेट को मॉडल गुणों द्वारा फ़िल्टर किया जा सकता है?मॉडल गुणों द्वारा Django क्वेरीसेट को फ़िल्टर करनाDjango मॉडल पर क्वेरीज़ अक्सर पूर्वनिर्धारित फ़ील्ड मानों के आधार पर विशिष्ट उदाहरणों का चयन करने के...प्रोग्रामिंग 2024-11-08 को प्रकाशित

क्या Django क्वेरीसेट को मॉडल गुणों द्वारा फ़िल्टर किया जा सकता है?मॉडल गुणों द्वारा Django क्वेरीसेट को फ़िल्टर करनाDjango मॉडल पर क्वेरीज़ अक्सर पूर्वनिर्धारित फ़ील्ड मानों के आधार पर विशिष्ट उदाहरणों का चयन करने के...प्रोग्रामिंग 2024-11-08 को प्रकाशित -

सही कॉन्फ़िगरेशन के बावजूद मैं लारवेल में टीएलएस ईमेल क्यों नहीं भेज सकता?टीएलएस ईमेल भेजने में असमर्थ: लारवेल प्रमाणपत्र सत्यापन त्रुटियों का समाधानकम सुरक्षित जीमेल सेटिंग्स सक्षम होने और लारवेल की .env फ़ाइल को सही ढंग से...प्रोग्रामिंग 2024-11-08 को प्रकाशित

सही कॉन्फ़िगरेशन के बावजूद मैं लारवेल में टीएलएस ईमेल क्यों नहीं भेज सकता?टीएलएस ईमेल भेजने में असमर्थ: लारवेल प्रमाणपत्र सत्यापन त्रुटियों का समाधानकम सुरक्षित जीमेल सेटिंग्स सक्षम होने और लारवेल की .env फ़ाइल को सही ढंग से...प्रोग्रामिंग 2024-11-08 को प्रकाशित -

वास्मटाइम और वाएसएम3 के साथ गोलांग को वासम में संकलित करते समय त्रुटियों का समाधान कैसे करें?Wasmtime और Wasm3 के साथ गोलांग से वासम संकलन में त्रुटियांGOOS=js GOARCH=wasm go का उपयोग करके गोलांग कोड को WebAssembly (Wasm) में संकलित करना बिल्ड...प्रोग्रामिंग 2024-11-08 को प्रकाशित

वास्मटाइम और वाएसएम3 के साथ गोलांग को वासम में संकलित करते समय त्रुटियों का समाधान कैसे करें?Wasmtime और Wasm3 के साथ गोलांग से वासम संकलन में त्रुटियांGOOS=js GOARCH=wasm go का उपयोग करके गोलांग कोड को WebAssembly (Wasm) में संकलित करना बिल्ड...प्रोग्रामिंग 2024-11-08 को प्रकाशित -

मैं किसी आईफ्रेम के वर्तमान स्थान तक कैसे पहुंच सकता हूं?आईफ्रेम के वर्तमान स्थान तक पहुंच: चुनौतियां और समाधानक्रॉस-ओरिजिन रिसोर्स शेयरिंग (CORS) नियम एक महत्वपूर्ण चुनौती पेश करते हैं जब किसी को पुनः प्राप...प्रोग्रामिंग 2024-11-08 को प्रकाशित

मैं किसी आईफ्रेम के वर्तमान स्थान तक कैसे पहुंच सकता हूं?आईफ्रेम के वर्तमान स्थान तक पहुंच: चुनौतियां और समाधानक्रॉस-ओरिजिन रिसोर्स शेयरिंग (CORS) नियम एक महत्वपूर्ण चुनौती पेश करते हैं जब किसी को पुनः प्राप...प्रोग्रामिंग 2024-11-08 को प्रकाशित -

JWT के साथ स्प्रिंग सुरक्षाIn this article, we will explore how to integrate Spring Security with JWT to build a solid security layer for your application. We will go through ea...प्रोग्रामिंग 2024-11-08 को प्रकाशित

JWT के साथ स्प्रिंग सुरक्षाIn this article, we will explore how to integrate Spring Security with JWT to build a solid security layer for your application. We will go through ea...प्रोग्रामिंग 2024-11-08 को प्रकाशित

चीनी भाषा का अध्ययन करें

- 1 आप चीनी भाषा में "चलना" कैसे कहते हैं? #का चीनी उच्चारण, #का चीनी सीखना

- 2 आप चीनी भाषा में "विमान ले लो" कैसे कहते हैं? #का चीनी उच्चारण, #का चीनी सीखना

- 3 आप चीनी भाषा में "ट्रेन ले लो" कैसे कहते हैं? #का चीनी उच्चारण, #का चीनी सीखना

- 4 आप चीनी भाषा में "बस ले लो" कैसे कहते हैं? #का चीनी उच्चारण, #का चीनी सीखना

- 5 चीनी भाषा में ड्राइव को क्या कहते हैं? #का चीनी उच्चारण, #का चीनी सीखना

- 6 तैराकी को चीनी भाषा में क्या कहते हैं? #का चीनी उच्चारण, #का चीनी सीखना

- 7 आप चीनी भाषा में साइकिल चलाने को क्या कहते हैं? #का चीनी उच्चारण, #का चीनी सीखना

- 8 आप चीनी भाषा में नमस्ते कैसे कहते हैं? 你好चीनी उच्चारण, 你好चीनी सीखना

- 9 आप चीनी भाषा में धन्यवाद कैसे कहते हैं? 谢谢चीनी उच्चारण, 谢谢चीनी सीखना

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning