Modelado de temas con Topc: Dreyfus, AI y Wordclouds

Publicado el 2024-07-30

Extracción de información de archivos PDF con Python: una guía completa

Este script demuestra un poderoso flujo de trabajo para procesar archivos PDF, extraer texto, tokenizar oraciones y realizar modelado de temas con visualización, diseñado para un análisis eficiente y profundo.

Descripción general de las bibliotecas

- os: Proporciona funciones para interactuar con el sistema operativo.

- matplotlib.pyplot: se utiliza para crear visualizaciones estáticas, animadas e interactivas en Python.

- nltk: Natural Language Toolkit, un conjunto de bibliotecas y programas para el procesamiento del lenguaje natural.

- pandas: Biblioteca de análisis y manipulación de datos.

- pdftotext: Biblioteca para convertir documentos PDF a texto sin formato.

- re: proporciona operaciones de coincidencia de expresiones regulares.

- seaborn: Biblioteca de visualización de datos estadísticos basada en matplotlib.

- nltk.tokenize.sent_tokenize: Función NLTK para tokenizar una cadena en oraciones.

- top2vec: Biblioteca para modelado de temas y búsqueda semántica.

- wordcloud: Biblioteca para crear nubes de palabras a partir de datos de texto.

Configuración inicial

Importar módulos

import os import matplotlib.pyplot as plt import nltk import pandas as pd import pdftotext import re import seaborn as sns from nltk.tokenize import sent_tokenize from top2vec import Top2Vec from wordcloud import WordCloud from cleantext import clean

A continuación, asegúrese de que el tokenizador punkt esté descargado:

nltk.download('punkt')

Normalización de texto

def normalize_text(text):

"""Normalize text by removing special characters and extra spaces,

and applying various other cleaning options."""

# Apply the clean function with specified parameters

cleaned_text = clean(

text,

fix_unicode=True, # fix various unicode errors

to_ascii=True, # transliterate to closest ASCII representation

lower=True, # lowercase text

no_line_breaks=False, # fully strip line breaks as opposed to only normalizing them

no_urls=True, # replace all URLs with a special token

no_emails=True, # replace all email addresses with a special token

no_phone_numbers=True, # replace all phone numbers with a special token

no_numbers=True, # replace all numbers with a special token

no_digits=True, # replace all digits with a special token

no_currency_symbols=True, # replace all currency symbols with a special token

no_punct=False, # remove punctuations

lang="en", # set to 'de' for German special handling

)

# Further clean the text by removing any remaining special characters except word characters, whitespace, and periods/commas

cleaned_text = re.sub(r"[^\w\s.,]", "", cleaned_text)

# Replace multiple whitespace characters with a single space and strip leading/trailing spaces

cleaned_text = re.sub(r"\s ", " ", cleaned_text).strip()

return cleaned_text

Extracción de texto PDF

def extract_text_from_pdf(pdf_path):

with open(pdf_path, "rb") as f:

pdf = pdftotext.PDF(f)

all_text = "\n\n".join(pdf)

return normalize_text(all_text)

Tokenización de oraciones

def split_into_sentences(text):

return sent_tokenize(text)

Procesamiento de múltiples archivos

def process_files(file_paths):

authors, titles, all_sentences = [], [], []

for file_path in file_paths:

file_name = os.path.basename(file_path)

parts = file_name.split(" - ", 2)

if len(parts) != 3 or not file_name.endswith(".pdf"):

print(f"Skipping file with incorrect format: {file_name}")

continue

year, author, title = parts

author, title = author.strip(), title.replace(".pdf", "").strip()

try:

text = extract_text_from_pdf(file_path)

except Exception as e:

print(f"Error extracting text from {file_name}: {e}")

continue

sentences = split_into_sentences(text)

authors.append(author)

titles.append(title)

all_sentences.extend(sentences)

print(f"Number of sentences for {file_name}: {len(sentences)}")

return authors, titles, all_sentences

Guardar datos en CSV

def save_data_to_csv(authors, titles, file_paths, output_file):

texts = []

for fp in file_paths:

try:

text = extract_text_from_pdf(fp)

sentences = split_into_sentences(text)

texts.append(" ".join(sentences))

except Exception as e:

print(f"Error processing file {fp}: {e}")

texts.append("")

data = pd.DataFrame({

"Author": authors,

"Title": titles,

"Text": texts

})

data.to_csv(output_file, index=False, quoting=1, encoding='utf-8')

print(f"Data has been written to {output_file}")

Cargando palabras vacías

def load_stopwords(filepath):

with open(filepath, "r") as f:

stopwords = f.read().splitlines()

additional_stopwords = ["able", "according", "act", "actually", "after", "again", "age", "agree", "al", "all", "already", "also", "am", "among", "an", "and", "another", "any", "appropriate", "are", "argue", "as", "at", "avoid", "based", "basic", "basis", "be", "been", "begin", "best", "book", "both", "build", "but", "by", "call", "can", "cant", "case", "cases", "claim", "claims", "class", "clear", "clearly", "cope", "could", "course", "data", "de", "deal", "dec", "did", "do", "doesnt", "done", "dont", "each", "early", "ed", "either", "end", "etc", "even", "ever", "every", "far", "feel", "few", "field", "find", "first", "follow", "follows", "for", "found", "free", "fri", "fully", "get", "had", "hand", "has", "have", "he", "help", "her", "here", "him", "his", "how", "however", "httpsabout", "ibid", "if", "im", "in", "is", "it", "its", "jstor", "june", "large", "lead", "least", "less", "like", "long", "look", "man", "many", "may", "me", "money", "more", "most", "move", "moves", "my", "neither", "net", "never", "new", "no", "nor", "not", "notes", "notion", "now", "of", "on", "once", "one", "ones", "only", "open", "or", "order", "orgterms", "other", "our", "out", "own", "paper", "past", "place", "plan", "play", "point", "pp", "precisely", "press", "put", "rather", "real", "require", "right", "risk", "role", "said", "same", "says", "search", "second", "see", "seem", "seems", "seen", "sees", "set", "shall", "she", "should", "show", "shows", "since", "so", "step", "strange", "style", "such", "suggests", "talk", "tell", "tells", "term", "terms", "than", "that", "the", "their", "them", "then", "there", "therefore", "these", "they", "this", "those", "three", "thus", "to", "todes", "together", "too", "tradition", "trans", "true", "try", "trying", "turn", "turns", "two", "up", "us", "use", "used", "uses", "using", "very", "view", "vol", "was", "way", "ways", "we", "web", "well", "were", "what", "when", "whether", "which", "who", "why", "with", "within", "works", "would", "years", "york", "you", "your", "suggests", "without"]

stopwords.extend(additional_stopwords)

return set(stopwords)

Filtrar palabras vacías de temas

def filter_stopwords_from_topics(topic_words, stopwords):

filtered_topics = []

for words in topic_words:

filtered_topics.append([word for word in words if word.lower() not in stopwords])

return filtered_topics

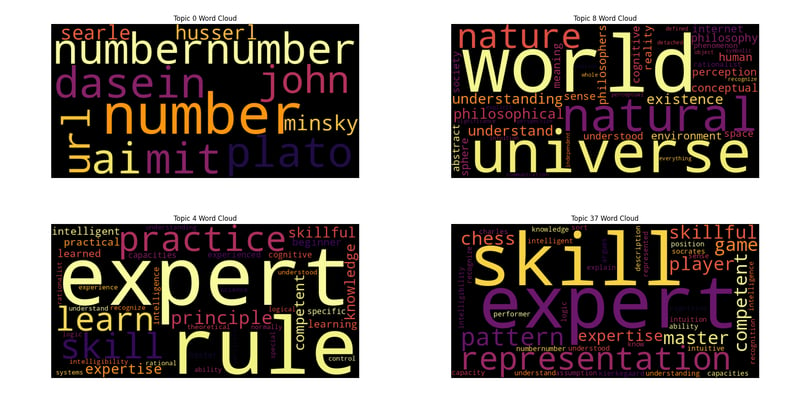

Generación de nube de palabras

def generate_wordcloud(topic_words, topic_num, palette='inferno'):

colors = sns.color_palette(palette, n_colors=256).as_hex()

def color_func(word, font_size, position, orientation, random_state=None, **kwargs):

return colors[random_state.randint(0, len(colors) - 1)]

wordcloud = WordCloud(width=800, height=400, background_color='black', color_func=color_func).generate(' '.join(topic_words))

plt.figure(figsize=(10, 5))

plt.imshow(wordcloud, interpolation='bilinear')

plt.axis('off')

plt.title(f'Topic {topic_num} Word Cloud')

plt.show()

Ejecución principal

file_paths = [f"/home/roomal/Desktop/Dreyfus-Project/Dreyfus/{fname}" for fname in os.listdir("/home/roomal/Desktop/Dreyfus-Project/Dreyfus/") if fname.endswith(".pdf")]

authors, titles, all_sentences = process_files(file_paths)

output_file = "/home/roomal/Desktop/Dreyfus-Project/Dreyfus_Papers.csv"

save_data_to_csv(authors, titles, file_paths, output_file)

stopwords_filepath = "/home/roomal/Documents/Lists/stopwords.txt"

stopwords = load_stopwords(stopwords_filepath)

try:

topic_model = Top2Vec(

all_sentences,

embedding_model="distiluse-base-multilingual-cased",

speed="deep-learn",

workers=6

)

print("Top2Vec model created successfully.")

except ValueError as e:

print(f"Error initializing Top2Vec: {e}")

except Exception as e:

print(f"Unexpected error: {e}")

num_topics = topic_model.get_num_topics()

topic_words, word_scores, topic_nums = topic_model.get_topics(num_topics)

filtered_topic_words = filter_stopwords_from_topics(topic_words, stopwords)

for i, words in enumerate(filtered_topic_words):

print(f"Topic {i}: {', '.join(words)}")

keywords = ["heidegger"]

topic_words, word_scores, topic_scores, topic_nums = topic_model.search_topics(keywords=keywords, num_topics=num_topics)

filtered

_search_topic_words = filter_stopwords_from_topics(topic_words, stopwords)

for i, words in enumerate(filtered_search_topic_words):

generate_wordcloud(words, topic_nums[i])

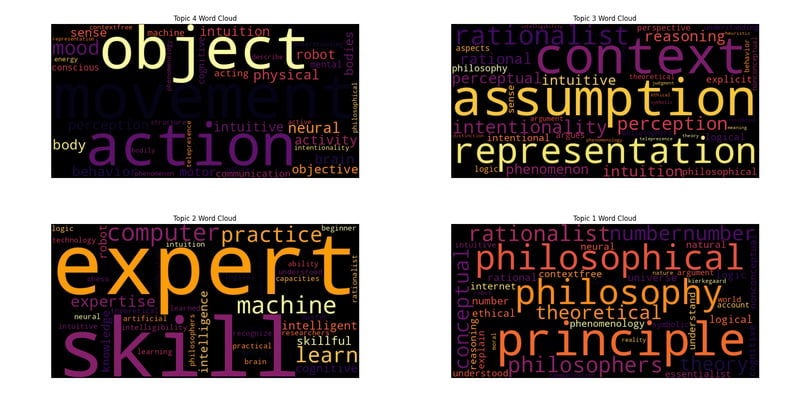

for i in range(reduced_num_topics):

topic_words = topic_model.topic_words_reduced[i]

filtered_words = [word for word in topic_words if word.lower() not in stopwords]

print(f"Reduced Topic {i}: {', '.join(filtered_words)}")

generate_wordcloud(filtered_words, i)

Reducir el número de temas.

reduced_num_topics = 5

topic_mapping = topic_model.hierarchical_topic_reduction(num_topics=reduced_num_topics)

# Print reduced topics and generate word clouds

for i in range(reduced_num_topics):

topic_words = topic_model.topic_words_reduced[i]

filtered_words = [word for word in topic_words if word.lower() not in stopwords]

print(f"Reduced Topic {i}: {', '.join(filtered_words)}")

generate_wordcloud(filtered_words, i)

Declaración de liberación

Este artículo se reproduce en: https://dev.to/roomals/topic-modeling-with-top2vec-dreyfus-ai-and-wordclouds-1ggl?1 Si hay alguna infracción, comuníquese con [email protected] para eliminarla. él

Último tutorial

Más>

-

¿Por qué recibo un error de "no pude encontrar una implementación del patrón de consulta" en mi consulta de Silverlight Linq?Ausencia de implementación del patrón de consulta: Resolver "no se pudo encontrar" errores en una aplicación de Silverlight, un inte...Programación Publicado el 2025-07-13

¿Por qué recibo un error de "no pude encontrar una implementación del patrón de consulta" en mi consulta de Silverlight Linq?Ausencia de implementación del patrón de consulta: Resolver "no se pudo encontrar" errores en una aplicación de Silverlight, un inte...Programación Publicado el 2025-07-13 -

¿Cómo redirigir múltiples tipos de usuarios (estudiantes, maestros y administradores) a sus respectivas actividades en una aplicación Firebase?rojo: cómo redirigir múltiples tipos de usuarios a las actividades respectivas Comprender el problema en una aplicación de votación basada...Programación Publicado el 2025-07-13

¿Cómo redirigir múltiples tipos de usuarios (estudiantes, maestros y administradores) a sus respectivas actividades en una aplicación Firebase?rojo: cómo redirigir múltiples tipos de usuarios a las actividades respectivas Comprender el problema en una aplicación de votación basada...Programación Publicado el 2025-07-13 -

Causas y soluciones para la falla de detección de cara: Error -215Error manejo: resolución "error: (-215)! Vacía () en function detectMultiscale" en openCV cuando intente utilizar el método detectar...Programación Publicado el 2025-07-13

Causas y soluciones para la falla de detección de cara: Error -215Error manejo: resolución "error: (-215)! Vacía () en function detectMultiscale" en openCV cuando intente utilizar el método detectar...Programación Publicado el 2025-07-13 -

¿Cómo usar correctamente las consultas como los parámetros PDO?usando consultas similares en pdo al intentar implementar una consulta similar en PDO, puede encontrar problemas como el que se describe en la...Programación Publicado el 2025-07-13

¿Cómo usar correctamente las consultas como los parámetros PDO?usando consultas similares en pdo al intentar implementar una consulta similar en PDO, puede encontrar problemas como el que se describe en la...Programación Publicado el 2025-07-13 -

¿Cómo eliminar los emojis de las cuerdas en Python: una guía para principiantes para solucionar errores comunes?Eliminación de emojis de las cadenas en python el código de python proporcionado para eliminar emojis falla porque contiene errores de sintaxi...Programación Publicado el 2025-07-13

¿Cómo eliminar los emojis de las cuerdas en Python: una guía para principiantes para solucionar errores comunes?Eliminación de emojis de las cadenas en python el código de python proporcionado para eliminar emojis falla porque contiene errores de sintaxi...Programación Publicado el 2025-07-13 -

¿Por qué las imágenes todavía tienen fronteras en Chrome? `Border: Ninguno;` Solución inválidaeliminando el borde de la imagen en Chrome un problema frecuente encontrado cuando se trabaja con imágenes en Chrome e IE9 es la apariencia de...Programación Publicado el 2025-07-13

¿Por qué las imágenes todavía tienen fronteras en Chrome? `Border: Ninguno;` Solución inválidaeliminando el borde de la imagen en Chrome un problema frecuente encontrado cuando se trabaja con imágenes en Chrome e IE9 es la apariencia de...Programación Publicado el 2025-07-13 -

¿Cómo mostrar correctamente la fecha y hora actuales en el formato "DD/MM/YYYY HH: MM: SS.SS" en Java?cómo mostrar la fecha y la hora actuales en "dd/mm/aa radica en el uso de diferentes instancias de SimpleFormat con diferentes patrones de f...Programación Publicado el 2025-07-13

¿Cómo mostrar correctamente la fecha y hora actuales en el formato "DD/MM/YYYY HH: MM: SS.SS" en Java?cómo mostrar la fecha y la hora actuales en "dd/mm/aa radica en el uso de diferentes instancias de SimpleFormat con diferentes patrones de f...Programación Publicado el 2025-07-13 -

Consejos para encontrar la posición del elemento en Java ArrayRecuperando la posición del elemento en las matrices Java dentro de la clase de matrices de Java, no hay un método directo de "índice de ...Programación Publicado el 2025-07-13

Consejos para encontrar la posición del elemento en Java ArrayRecuperando la posición del elemento en las matrices Java dentro de la clase de matrices de Java, no hay un método directo de "índice de ...Programación Publicado el 2025-07-13 -

¿Cómo puede usar los datos de Group by para pivotar en MySQL?pivotando resultados de consulta usando el grupo mySQL mediante en una base de datos relacional, los datos giratorios se refieren al reorganiz...Programación Publicado el 2025-07-13

¿Cómo puede usar los datos de Group by para pivotar en MySQL?pivotando resultados de consulta usando el grupo mySQL mediante en una base de datos relacional, los datos giratorios se refieren al reorganiz...Programación Publicado el 2025-07-13 -

Implementación dinámica reflectante de la interfaz GO para la exploración del método RPCReflection para la implementación de la interfaz dinámica en Go Reflection In GO es una herramienta poderosa que permite la inspección y manip...Programación Publicado el 2025-07-13

Implementación dinámica reflectante de la interfaz GO para la exploración del método RPCReflection para la implementación de la interfaz dinámica en Go Reflection In GO es una herramienta poderosa que permite la inspección y manip...Programación Publicado el 2025-07-13 -

¿Cómo los map.entry de Java y simplificando la gestión de pares de valores clave?una colección integral para pares de valor: Introducción de Java Map.entry y SimpleEntry en Java, al definir una colección donde cada elemento...Programación Publicado el 2025-07-13

¿Cómo los map.entry de Java y simplificando la gestión de pares de valores clave?una colección integral para pares de valor: Introducción de Java Map.entry y SimpleEntry en Java, al definir una colección donde cada elemento...Programación Publicado el 2025-07-13 -

¿Cómo evitar presentaciones duplicadas después de la actualización del formulario?evitando las presentaciones duplicadas con el manejo de actualización en el desarrollo web, es común encontrar el problema de los envíos dupli...Programación Publicado el 2025-07-13

¿Cómo evitar presentaciones duplicadas después de la actualización del formulario?evitando las presentaciones duplicadas con el manejo de actualización en el desarrollo web, es común encontrar el problema de los envíos dupli...Programación Publicado el 2025-07-13 -

¿Cómo verificar si un objeto tiene un atributo específico en Python?para determinar el atributo de objeto existencia Esta consulta busca un método para verificar la presencia de un atributo específico dentro de...Programación Publicado el 2025-07-13

¿Cómo verificar si un objeto tiene un atributo específico en Python?para determinar el atributo de objeto existencia Esta consulta busca un método para verificar la presencia de un atributo específico dentro de...Programación Publicado el 2025-07-13 -

¿Por qué no aparece mi imagen de fondo CSS?Solución de problemas: css La imagen de fondo que no aparece ha encontrado un problema en el que su imagen de fondo no se carga a pesar de las...Programación Publicado el 2025-07-13

¿Por qué no aparece mi imagen de fondo CSS?Solución de problemas: css La imagen de fondo que no aparece ha encontrado un problema en el que su imagen de fondo no se carga a pesar de las...Programación Publicado el 2025-07-13 -

¿Cómo analizar las matrices JSON en ir usando el paquete `JSON`?Parsing Json Matray en Go con el paquete JSON Problema: ¿Cómo puede analizar una cadena JSON que representa una matriz en ir usando el paque...Programación Publicado el 2025-07-13

¿Cómo analizar las matrices JSON en ir usando el paquete `JSON`?Parsing Json Matray en Go con el paquete JSON Problema: ¿Cómo puede analizar una cadena JSON que representa una matriz en ir usando el paque...Programación Publicado el 2025-07-13

Estudiar chino

- 1 ¿Cómo se dice "caminar" en chino? 走路 pronunciación china, 走路 aprendizaje chino

- 2 ¿Cómo se dice "tomar un avión" en chino? 坐飞机 pronunciación china, 坐飞机 aprendizaje chino

- 3 ¿Cómo se dice "tomar un tren" en chino? 坐火车 pronunciación china, 坐火车 aprendizaje chino

- 4 ¿Cómo se dice "tomar un autobús" en chino? 坐车 pronunciación china, 坐车 aprendizaje chino

- 5 ¿Cómo se dice conducir en chino? 开车 pronunciación china, 开车 aprendizaje chino

- 6 ¿Cómo se dice nadar en chino? 游泳 pronunciación china, 游泳 aprendizaje chino

- 7 ¿Cómo se dice andar en bicicleta en chino? 骑自行车 pronunciación china, 骑自行车 aprendizaje chino

- 8 ¿Cómo se dice hola en chino? 你好Pronunciación china, 你好Aprendizaje chino

- 9 ¿Cómo se dice gracias en chino? 谢谢Pronunciación china, 谢谢Aprendizaje chino

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning

Decodificación de imagen base64

pinyin chino

Codificación Unicode

Compresión de cifrado de ofuscación JS

Herramienta de cifrado hexadecimal de URL

Herramienta de conversión de codificación UTF-8

Herramientas de codificación y decodificación Ascii en línea

herramienta de cifrado MD5

Herramienta de cifrado y descifrado de texto hash/hash en línea

Cifrado SHA en línea