K Nearest Neighbors Regression, Regression: Supervised Machine Learning

k-Nearest Neighbors Regression

k-Nearest Neighbors (k-NN) regression is a non-parametric method that predicts the output value based on the average (or weighted average) of the k-nearest training data points in the feature space. This approach can effectively model complex relationships in data without assuming a specific functional form.

The k-NN regression method can be summarized as follows:

- Distance Metric: The algorithm uses a distance metric (commonly Euclidean distance) to determine the "closeness" of data points.

- k Neighbors: The parameter k specifies how many nearest neighbors to consider when making predictions.

- Prediction: The predicted value for a new data point is the average of the values of its k nearest neighbors.

Key Concepts

Non-Parametric: Unlike parametric models, k-NN does not assume a specific form for the underlying relationship between the input features and the target variable. This makes it flexible in capturing complex patterns.

Distance Calculation: The choice of distance metric can significantly affect the model's performance. Common metrics include Euclidean, Manhattan, and Minkowski distances.

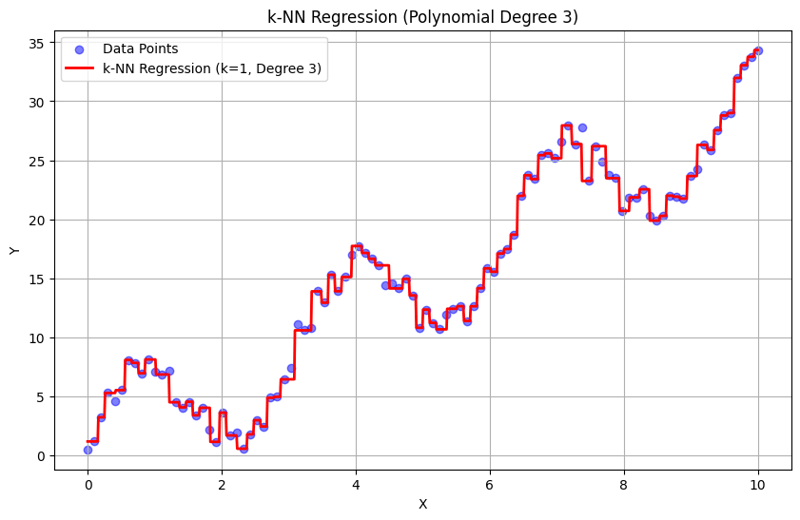

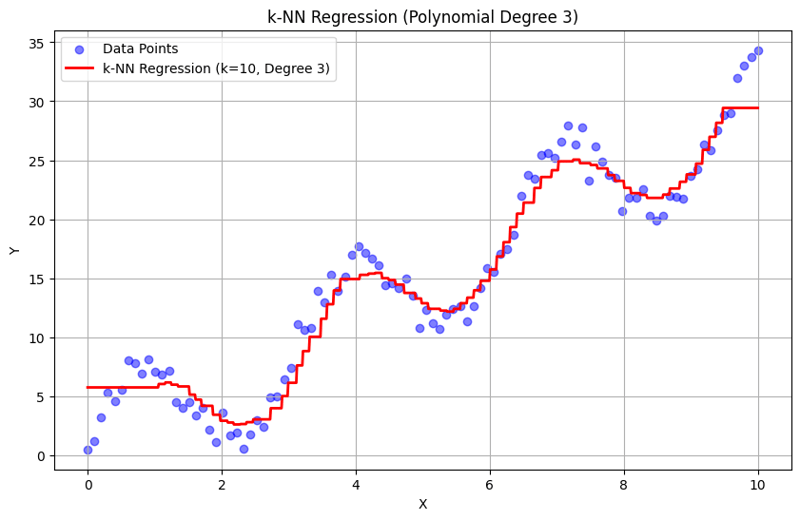

Choice of k: The number of neighbors (k) can be chosen based on cross-validation. A small k can lead to overfitting, while a large k can smooth out the prediction too much, potentially underfitting.

k-Nearest Neighbors Regression Example

This example demonstrates how to use k-NN regression with polynomial features to model complex relationships while leveraging the non-parametric nature of k-NN.

Python Code Example

1. Import Libraries

import numpy as np import matplotlib.pyplot as plt from sklearn.model_selection import train_test_split from sklearn.preprocessing import PolynomialFeatures from sklearn.neighbors import KNeighborsRegressor from sklearn.metrics import mean_squared_error, r2_score

This block imports the necessary libraries for data manipulation, plotting, and machine learning.

2. Generate Sample Data

np.random.seed(42) # For reproducibility X = np.linspace(0, 10, 100).reshape(-1, 1) y = 3 * X.ravel() np.sin(2 * X.ravel()) * 5 np.random.normal(0, 1, 100)

This block generates sample data representing a relationship with some noise, simulating real-world data variations.

3. Split the Dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

This block splits the dataset into training and testing sets for model evaluation.

4. Create Polynomial Features

degree = 3 # Change this value for different polynomial degrees poly = PolynomialFeatures(degree=degree) X_poly_train = poly.fit_transform(X_train) X_poly_test = poly.transform(X_test)

This block generates polynomial features from the training and testing datasets, allowing the model to capture non-linear relationships.

5. Create and Train the k-NN Regression Model

k = 5 # Number of neighbors knn_model = KNeighborsRegressor(n_neighbors=k) knn_model.fit(X_poly_train, y_train)

This block initializes the k-NN regression model and trains it using the polynomial features derived from the training dataset.

6. Make Predictions

y_pred = knn_model.predict(X_poly_test)

This block uses the trained model to make predictions on the test set.

7. Plot the Results

plt.figure(figsize=(10, 6))

plt.scatter(X, y, color='blue', alpha=0.5, label='Data Points')

X_grid = np.linspace(0, 10, 1000).reshape(-1, 1)

X_poly_grid = poly.transform(X_grid)

y_grid = knn_model.predict(X_poly_grid)

plt.plot(X_grid, y_grid, color='red', linewidth=2, label=f'k-NN Regression (k={k}, Degree {degree})')

plt.title(f'k-NN Regression (Polynomial Degree {degree})')

plt.xlabel('X')

plt.ylabel('Y')

plt.legend()

plt.grid(True)

plt.show()

This block creates a scatter plot of the actual data points versus the predicted values from the k-NN regression model, visualizing the fitted curve.

Output with k = 1:

Output with k = 10:

This structured approach demonstrates how to implement and evaluate k-Nearest Neighbors regression with polynomial features. By capturing local patterns through averaging the responses of nearby neighbors, k-NN regression effectively models complex relationships in data while providing a straightforward implementation. The choice of k and polynomial degree significantly influences the model's performance and flexibility in capturing underlying trends.

-

Why Isn\'t My CSS Background Image Appearing?Troubleshoot: CSS Background Image Not AppearingYou've encountered an issue where your background image fails to load despite following tutorial i...Programming Posted on 2025-07-09

Why Isn\'t My CSS Background Image Appearing?Troubleshoot: CSS Background Image Not AppearingYou've encountered an issue where your background image fails to load despite following tutorial i...Programming Posted on 2025-07-09 -

Why do Lambda expressions require "final" or "valid final" variables in Java?Lambda Expressions Require "Final" or "Effectively Final" VariablesThe error message "Variable used in lambda expression shou...Programming Posted on 2025-07-09

Why do Lambda expressions require "final" or "valid final" variables in Java?Lambda Expressions Require "Final" or "Effectively Final" VariablesThe error message "Variable used in lambda expression shou...Programming Posted on 2025-07-09 -

How to Convert a Pandas DataFrame Column to DateTime Format and Filter by Date?Transform Pandas DataFrame Column to DateTime FormatScenario:Data within a Pandas DataFrame often exists in various formats, including strings. When w...Programming Posted on 2025-07-09

How to Convert a Pandas DataFrame Column to DateTime Format and Filter by Date?Transform Pandas DataFrame Column to DateTime FormatScenario:Data within a Pandas DataFrame often exists in various formats, including strings. When w...Programming Posted on 2025-07-09 -

How to implement custom events using observer pattern in Java?Creating Custom Events in JavaCustom events are indispensable in many programming scenarios, enabling components to communicate with each other based ...Programming Posted on 2025-07-09

How to implement custom events using observer pattern in Java?Creating Custom Events in JavaCustom events are indispensable in many programming scenarios, enabling components to communicate with each other based ...Programming Posted on 2025-07-09 -

How to Redirect Multiple User Types (Students, Teachers, and Admins) to Their Respective Activities in a Firebase App?Red: How to Redirect Multiple User Types to Respective ActivitiesUnderstanding the ProblemIn a Firebase-based voting app with three distinct user type...Programming Posted on 2025-07-09

How to Redirect Multiple User Types (Students, Teachers, and Admins) to Their Respective Activities in a Firebase App?Red: How to Redirect Multiple User Types to Respective ActivitiesUnderstanding the ProblemIn a Firebase-based voting app with three distinct user type...Programming Posted on 2025-07-09 -

CSS strongly typed language analysisOne of the ways you can classify a programming language is by how strongly or weakly typed it is. Here, “typed” means if variables are known at compil...Programming Posted on 2025-07-09

CSS strongly typed language analysisOne of the ways you can classify a programming language is by how strongly or weakly typed it is. Here, “typed” means if variables are known at compil...Programming Posted on 2025-07-09 -

How to Correctly Display the Current Date and Time in "dd/MM/yyyy HH:mm:ss.SS" Format in Java?How to Display Current Date and Time in "dd/MM/yyyy HH:mm:ss.SS" FormatIn the provided Java code, the issue with displaying the date and tim...Programming Posted on 2025-07-09

How to Correctly Display the Current Date and Time in "dd/MM/yyyy HH:mm:ss.SS" Format in Java?How to Display Current Date and Time in "dd/MM/yyyy HH:mm:ss.SS" FormatIn the provided Java code, the issue with displaying the date and tim...Programming Posted on 2025-07-09 -

`console.log` shows the reason for the modified object value exceptionObjects and Console.log: An Oddity UnraveledWhen working with objects and console.log, you may encounter peculiar behavior. Let's unravel this mys...Programming Posted on 2025-07-09

`console.log` shows the reason for the modified object value exceptionObjects and Console.log: An Oddity UnraveledWhen working with objects and console.log, you may encounter peculiar behavior. Let's unravel this mys...Programming Posted on 2025-07-09 -

MySQL database method is not required to dump the same instanceCopying a MySQL Database on the Same Instance without DumpingCopying a database on the same MySQL instance can be done without having to create an int...Programming Posted on 2025-07-09

MySQL database method is not required to dump the same instanceCopying a MySQL Database on the Same Instance without DumpingCopying a database on the same MySQL instance can be done without having to create an int...Programming Posted on 2025-07-09 -

How Can I Efficiently Create Dictionaries Using Python Comprehension?Python Dictionary ComprehensionIn Python, dictionary comprehensions offer a concise way to generate new dictionaries. While they are similar to list c...Programming Posted on 2025-07-09

How Can I Efficiently Create Dictionaries Using Python Comprehension?Python Dictionary ComprehensionIn Python, dictionary comprehensions offer a concise way to generate new dictionaries. While they are similar to list c...Programming Posted on 2025-07-09 -

Why can't Java create generic arrays?Generic Array Creation ErrorQuestion:When attempting to create an array of generic classes using an expression like:public static ArrayList<myObjec...Programming Posted on 2025-07-09

Why can't Java create generic arrays?Generic Array Creation ErrorQuestion:When attempting to create an array of generic classes using an expression like:public static ArrayList<myObjec...Programming Posted on 2025-07-09 -

Why Doesn't `body { margin: 0; }` Always Remove Top Margin in CSS?Addressing Body Margin Removal in CSSFor novice web developers, removing the margin of the body element can be a confusing task. Often, the code provi...Programming Posted on 2025-07-09

Why Doesn't `body { margin: 0; }` Always Remove Top Margin in CSS?Addressing Body Margin Removal in CSSFor novice web developers, removing the margin of the body element can be a confusing task. Often, the code provi...Programming Posted on 2025-07-09 -

How to deal with sliced memory in Go language garbage collection?Garbage Collection in Go Slices: A Detailed AnalysisIn Go, a slice is a dynamic array that references an underlying array. When working with slices, i...Programming Posted on 2025-07-09

How to deal with sliced memory in Go language garbage collection?Garbage Collection in Go Slices: A Detailed AnalysisIn Go, a slice is a dynamic array that references an underlying array. When working with slices, i...Programming Posted on 2025-07-09 -

How to efficiently detect empty arrays in PHP?Checking Array Emptiness in PHPAn empty array can be determined in PHP through various approaches. If the need is to verify the presence of any array ...Programming Posted on 2025-07-09

How to efficiently detect empty arrays in PHP?Checking Array Emptiness in PHPAn empty array can be determined in PHP through various approaches. If the need is to verify the presence of any array ...Programming Posted on 2025-07-09 -

Reasons for CodeIgniter to connect to MySQL database after switching to MySQLiUnable to Connect to MySQL Database: Troubleshooting Error MessageWhen attempting to switch from the MySQL driver to the MySQLi driver in CodeIgniter,...Programming Posted on 2025-07-09

Reasons for CodeIgniter to connect to MySQL database after switching to MySQLiUnable to Connect to MySQL Database: Troubleshooting Error MessageWhen attempting to switch from the MySQL driver to the MySQLi driver in CodeIgniter,...Programming Posted on 2025-07-09

Study Chinese

- 1 How do you say "walk" in Chinese? 走路 Chinese pronunciation, 走路 Chinese learning

- 2 How do you say "take a plane" in Chinese? 坐飞机 Chinese pronunciation, 坐飞机 Chinese learning

- 3 How do you say "take a train" in Chinese? 坐火车 Chinese pronunciation, 坐火车 Chinese learning

- 4 How do you say "take a bus" in Chinese? 坐车 Chinese pronunciation, 坐车 Chinese learning

- 5 How to say drive in Chinese? 开车 Chinese pronunciation, 开车 Chinese learning

- 6 How do you say swimming in Chinese? 游泳 Chinese pronunciation, 游泳 Chinese learning

- 7 How do you say ride a bicycle in Chinese? 骑自行车 Chinese pronunciation, 骑自行车 Chinese learning

- 8 How do you say hello in Chinese? 你好Chinese pronunciation, 你好Chinese learning

- 9 How do you say thank you in Chinese? 谢谢Chinese pronunciation, 谢谢Chinese learning

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning