Front page > Programming > Magic and Muscles: ETL with Magic and DuckDB with data from my powerlifting training

Front page > Programming > Magic and Muscles: ETL with Magic and DuckDB with data from my powerlifting training

Magic and Muscles: ETL with Magic and DuckDB with data from my powerlifting training

You can acecces the full pipeline here

Mage

In my last post, i writed about a dashboard I builted using Python and Looker Studio, to visualize my powrlifting training data. In this post I will walk you through the step by step of a ETL (Extract, Transform, Load) pipeline, using the same dataset.

To build the pipeline we will use Mage to orchestrate the pipeline and Python for transforming and loading the data, as a last step we will export the transformed data into a DuckDB database.

To execute Mage, we're a gointo use the oficial docker image:

docker pull mageai/mageai:latest

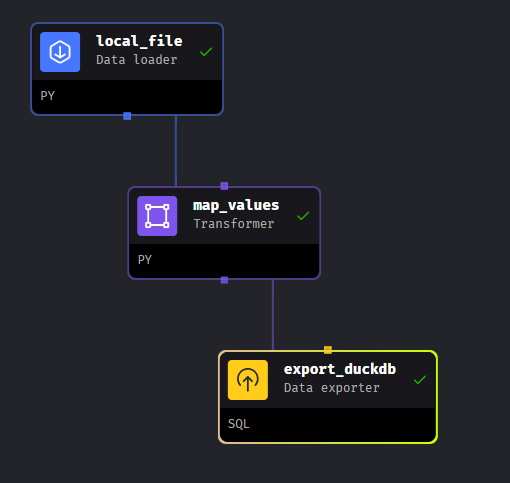

The pipeline will look like this:

Extract

The extraction will be simple, we just have to read a csv file and create a pandas dataframe with it, so we can procide to the next steps. Using the Data loader block, we already have a tamplate to work with, just remember to set the "sep" parameter int he read_csv( ) function, só the data is loaded correct.

from mage_ai.io.file import FileIO import pandas as pd if 'data_loader' not in globals(): from mage_ai.data_preparation.decorators import data_loader if 'test' not in globals(): from mage_ai.data_preparation.decorators import test @data_loader def load_data_from_file(*args, **kwargs): filepath = 'default_repo/data_strong.csv' df = pd.read_csv(filepath, sep=';') return df @test def test_output(output, *args) -> None: assert output is not None, 'The output is undefined'`

Transform

In this step, using the Transformer block, that have a lot of templates to chose from, we will select a custom template.

The transformation we have to do is basicly the maping of the Exercise Name column, so we can identify which body part correspond to the specific exercise.

import pandas as pd

if 'transformer' not in globals():

from mage_ai.data_preparation.decorators import transformer

if 'test' not in globals():

from mage_ai.data_preparation.decorators import test

body_part = {'Squat (Barbell)': 'Pernas',

'Bench Press (Barbell)': 'Peitoral',

'Deadlift (Barbell)': 'Costas',

'Triceps Pushdown (Cable - Straight Bar)': 'Bracos',

'Bent Over Row (Barbell)': 'Costas',

'Leg Press': 'Pernas',

'Overhead Press (Barbell)': 'Ombros',

'Romanian Deadlift (Barbell)': 'Costas',

'Lat Pulldown (Machine)': 'Costas',

'Bench Press (Dumbbell)': 'Peitoral',

'Skullcrusher (Dumbbell)': 'Bracos',

'Lying Leg Curl (Machine)': 'Pernas',

'Hammer Curl (Dumbbell)': 'Bracos',

'Overhead Press (Dumbbell)': 'Ombros',

'Lateral Raise (Dumbbell)': 'Ombros',

'Chest Press (Machine)': 'Peitoral',

'Incline Bench Press (Barbell)': 'Peitoral',

'Hip Thrust (Barbell)': 'Pernas',

'Agachamento Pausado ': 'Pernas',

'Larsen Press': 'Peitoral',

'Triceps Dip': 'Bracos',

'Farmers March ': 'Abdomen',

'Lat Pulldown (Cable)': 'Costas',

'Face Pull (Cable)': 'Ombros',

'Stiff Leg Deadlift (Barbell)': 'Pernas',

'Bulgarian Split Squat': 'Pernas',

'Front Squat (Barbell)': 'Pernas',

'Incline Bench Press (Dumbbell)': 'Peitoral',

'Reverse Fly (Dumbbell)': 'Ombros',

'Push Press': 'Ombros',

'Good Morning (Barbell)': 'Costas',

'Leg Extension (Machine)': 'Pernas',

'Standing Calf Raise (Smith Machine)': 'Pernas',

'Skullcrusher (Barbell)': 'Bracos',

'Strict Military Press (Barbell)': 'Ombros',

'Seated Leg Curl (Machine)': 'Pernas',

'Bench Press - Close Grip (Barbell)': 'Peitoral',

'Hip Adductor (Machine)': 'Pernas',

'Deficit Deadlift (Barbell)': 'Pernas',

'Sumo Deadlift (Barbell)': 'Costas',

'Box Squat (Barbell)': 'Pernas',

'Seated Row (Cable)': 'Costas',

'Bicep Curl (Dumbbell)': 'Bracos',

'Spotto Press': 'Peitoral',

'Incline Chest Fly (Dumbbell)': 'Peitoral',

'Incline Row (Dumbbell)': 'Costas'}

@transformer

def transform(data, *args, **kwargs):

strong_data = data[['Date', 'Workout Name', 'Exercise Name', 'Weight', 'Reps', 'Workout Duration']]

strong_data['Body part'] = strong_data['Exercise Name'].map(body_part)

return strong_data

@test

def test_output(output, *args) -> None:

assert output is not None, 'The output is undefined'

An intresting feature of Mage is that we can visualize the changes we are making inside the Tranformer block, using the Add chart opting it's possible to generate a pie graph using the column Body part.

Load

Now is time to load the data to DuckDB. In the docker image we already have DuckDB, so we just have to include another block in our pipeline. Lets inlcude the Data Exporter block, with a SQL template so we can create a table and insert the data.

CREATE OR REPLACE TABLE powerlifting

(

_date DATE,

workout_name STRING,

exercise_name STRING,

weight STRING,

reps STRING,

workout_duration STRING,

body_part STRING

);

INSERT INTO powerlifting SELECT * FROM {{ df_1 }};

Conclusion

Mage is a powrfull tool to orchestrate pipelines, provide a complete set of templates for developing especific tasks involving ETL. In this post we had a breathh explanation about how to get start using Mage to build pipelines of data. In future post we're gointo explore more about this amizing framework.

-

How Can I UNION Database Tables with Different Numbers of Columns?Combined tables with different columns] Can encounter challenges when trying to merge database tables with different columns. A straightforward way i...Programming Posted on 2025-04-20

How Can I UNION Database Tables with Different Numbers of Columns?Combined tables with different columns] Can encounter challenges when trying to merge database tables with different columns. A straightforward way i...Programming Posted on 2025-04-20 -

How to deal with sliced memory in Go language garbage collection?Garbage Collection in Go Slices: A Detailed AnalysisIn Go, a slice is a dynamic array that references an underlying array. When working with slices, i...Programming Posted on 2025-04-20

How to deal with sliced memory in Go language garbage collection?Garbage Collection in Go Slices: A Detailed AnalysisIn Go, a slice is a dynamic array that references an underlying array. When working with slices, i...Programming Posted on 2025-04-20 -

How do you extract a random element from an array in PHP?Random Selection from an ArrayIn PHP, obtaining a random item from an array can be accomplished with ease. Consider the following array:$items = [523,...Programming Posted on 2025-04-20

How do you extract a random element from an array in PHP?Random Selection from an ArrayIn PHP, obtaining a random item from an array can be accomplished with ease. Consider the following array:$items = [523,...Programming Posted on 2025-04-20 -

Implementing a slash method of left-aligning text in all browsers]]Text alignment on slanted lines Background Achieving Left-Aligned Text on a slanted line can pose a challenge, particully when secreta. compatibilit...Programming Posted on 2025-04-20

Implementing a slash method of left-aligning text in all browsers]]Text alignment on slanted lines Background Achieving Left-Aligned Text on a slanted line can pose a challenge, particully when secreta. compatibilit...Programming Posted on 2025-04-20 -

Method to correctly convert Latin1 characters to UTF8 in UTF8 MySQL tableConvert Latin1 Characters in a UTF8 Table to UTF8You've encountered an issue where characters with diacritics (e.g., "Jáuò Iñe") were in...Programming Posted on 2025-04-20

Method to correctly convert Latin1 characters to UTF8 in UTF8 MySQL tableConvert Latin1 Characters in a UTF8 Table to UTF8You've encountered an issue where characters with diacritics (e.g., "Jáuò Iñe") were in...Programming Posted on 2025-04-20 -

How Can You Define Variables in Laravel Blade Templates Elegantly?Defining Variables in Laravel Blade Templates with EleganceUnderstanding how to assign variables in Blade templates is crucial for storing data for la...Programming Posted on 2025-04-20

How Can You Define Variables in Laravel Blade Templates Elegantly?Defining Variables in Laravel Blade Templates with EleganceUnderstanding how to assign variables in Blade templates is crucial for storing data for la...Programming Posted on 2025-04-20 -

Is There a Performance Difference Between Using a For-Each Loop and an Iterator for Collection Traversal in Java?For Each Loop vs. Iterator: Efficiency in Collection TraversalIntroductionWhen traversing a collection in Java, the choice arises between using a for-...Programming Posted on 2025-04-20

Is There a Performance Difference Between Using a For-Each Loop and an Iterator for Collection Traversal in Java?For Each Loop vs. Iterator: Efficiency in Collection TraversalIntroductionWhen traversing a collection in Java, the choice arises between using a for-...Programming Posted on 2025-04-20 -

How Can I Maintain Custom JTable Cell Rendering After Cell Editing?Maintaining JTable Cell Rendering After Cell EditIn a JTable, implementing custom cell rendering and editing capabilities can enhance the user experie...Programming Posted on 2025-04-20

How Can I Maintain Custom JTable Cell Rendering After Cell Editing?Maintaining JTable Cell Rendering After Cell EditIn a JTable, implementing custom cell rendering and editing capabilities can enhance the user experie...Programming Posted on 2025-04-20 -

Why do images still have borders in Chrome? `border: none;` invalid solutionRemoving the Image Border in ChromeOne frequent issue encountered when working with images in Chrome and IE9 is the appearance of a persistent thin bo...Programming Posted on 2025-04-20

Why do images still have borders in Chrome? `border: none;` invalid solutionRemoving the Image Border in ChromeOne frequent issue encountered when working with images in Chrome and IE9 is the appearance of a persistent thin bo...Programming Posted on 2025-04-20 -

Why Doesn't `body { margin: 0; }` Always Remove Top Margin in CSS?Addressing Body Margin Removal in CSSFor novice web developers, removing the margin of the body element can be a confusing task. Often, the code provi...Programming Posted on 2025-04-20

Why Doesn't `body { margin: 0; }` Always Remove Top Margin in CSS?Addressing Body Margin Removal in CSSFor novice web developers, removing the margin of the body element can be a confusing task. Often, the code provi...Programming Posted on 2025-04-20 -

Why doesn't Java have unsigned integers?Understanding Java's Absence of Unsigned IntegersDespite the potential benefits of unsigned integers, such as reduced risk of overflow, self-docum...Programming Posted on 2025-04-20

Why doesn't Java have unsigned integers?Understanding Java's Absence of Unsigned IntegersDespite the potential benefits of unsigned integers, such as reduced risk of overflow, self-docum...Programming Posted on 2025-04-20 -

Why does the session data lose after PHP refresh?Troubleshooting PHP Session Data LossPHP sessions are a valuable tool for storing and retrieving data across multiple pages. However, issues can arise...Programming Posted on 2025-04-20

Why does the session data lose after PHP refresh?Troubleshooting PHP Session Data LossPHP sessions are a valuable tool for storing and retrieving data across multiple pages. However, issues can arise...Programming Posted on 2025-04-20 -

Can I use NOLOCK in SQL Server to improve performance?NOLOCK in SQL Server: Performance improvement and risk coexist SQL Server's transaction isolation level ensures that data modifications for conc...Programming Posted on 2025-04-20

Can I use NOLOCK in SQL Server to improve performance?NOLOCK in SQL Server: Performance improvement and risk coexist SQL Server's transaction isolation level ensures that data modifications for conc...Programming Posted on 2025-04-20 -

How to Convert a Pandas DataFrame Column to DateTime Format and Filter by Date?Transform Pandas DataFrame Column to DateTime FormatScenario:Data within a Pandas DataFrame often exists in various formats, including strings. When w...Programming Posted on 2025-04-20

How to Convert a Pandas DataFrame Column to DateTime Format and Filter by Date?Transform Pandas DataFrame Column to DateTime FormatScenario:Data within a Pandas DataFrame often exists in various formats, including strings. When w...Programming Posted on 2025-04-20 -

Detect click object in sprite group and resolve "AttributeError: Group has no attribute rect" errorDetecting Clicked Objects within a Sprite GroupWhen working with sprites in a Pygame application, it becomes necessary to detect when the user clicks ...Programming Posted on 2025-04-20

Detect click object in sprite group and resolve "AttributeError: Group has no attribute rect" errorDetecting Clicked Objects within a Sprite GroupWhen working with sprites in a Pygame application, it becomes necessary to detect when the user clicks ...Programming Posted on 2025-04-20

Study Chinese

- 1 How do you say "walk" in Chinese? 走路 Chinese pronunciation, 走路 Chinese learning

- 2 How do you say "take a plane" in Chinese? 坐飞机 Chinese pronunciation, 坐飞机 Chinese learning

- 3 How do you say "take a train" in Chinese? 坐火车 Chinese pronunciation, 坐火车 Chinese learning

- 4 How do you say "take a bus" in Chinese? 坐车 Chinese pronunciation, 坐车 Chinese learning

- 5 How to say drive in Chinese? 开车 Chinese pronunciation, 开车 Chinese learning

- 6 How do you say swimming in Chinese? 游泳 Chinese pronunciation, 游泳 Chinese learning

- 7 How do you say ride a bicycle in Chinese? 骑自行车 Chinese pronunciation, 骑自行车 Chinese learning

- 8 How do you say hello in Chinese? 你好Chinese pronunciation, 你好Chinese learning

- 9 How do you say thank you in Chinese? 谢谢Chinese pronunciation, 谢谢Chinese learning

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning