Local GPT with Ollama and Next.js

Introduction

With today's AI advancements, it's easy to setup a generative AI model on your computer to create a chatbot.

In this article we will see how a you can setup a chatbot on your system using Ollama and Next.js

Setup Ollama

Let's start by setting up Ollama on our system. Visit ollama.com and download it for your OS. This will allow us to use ollama command in the terminal/command prompt.

Check Ollama version by using command ollama -v

Check out the list of models on Ollama library page.

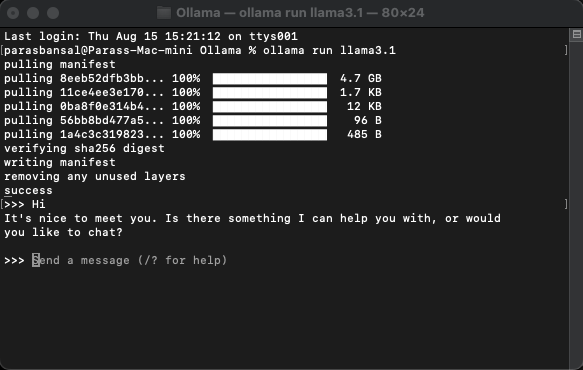

Download and run a model

To download and run a model, run command ollama run

Example: ollama run llama3.1 or ollama run gemma2

You will be able to chat with the model right in the terminal.

Setup web application

Basic setup for Next.js

- Download and install latest version of Node.js

- Navigate to a desired folder and run npx create-next-app@latest to generate Next.js project.

- It will ask some questions to generate boilerplate code. For this tutorial, we will keep everything default.

- Open the newly created project in your code editor of choice. We are going to use VS Code.

Installing dependencies

There are few npm packages that needs to be installed to use the ollama.

- ai from vercel.

- ollama The Ollama JavaScript library provides the easiest way to integrate your JavaScript project with Ollama.

- ollama-ai-provider helps connect ai and ollama together.

- react-markdown Chat results will be formatted in markdown style, to parse markdown we are going to use react-markdown package.

To install these dependencies run npm i ai ollama ollama-ai-provider.

Create chat page

Under app/src there is a file named page.tsx.

Let's remove everything in it and start with the basic functional component:

src/app/page.tsx

export default function Home() {

return (

{/* Code here... */}

);

}

Let's start by importing useChat hook from ai/react and react-markdown

"use client";

import { useChat } from "ai/react";

import Markdown from "react-markdown";

Because we are using a hook, we need to convert this page to to a client component.

Tip: You can create a separate component for chat and call it in the page.tsx for limiting client component usage.

In the component get messages, input, handleInputChange and handleSubmit from useChat hook.

const { messages, input, handleInputChange, handleSubmit } = useChat();

In JSX, create an input form to get the user input in order to initiate conversation.

The good think about this is we don't need to right the handler or maintain a state for input value, the useChat hook provide it to us.

We can display the messages by looping through the messages array.

messages.map((m, i) => ({m})

The styled version based on the role of the sender looks like this:

{messages.length ? ( messages.map((m, i) => { return m.role === "user" ? (You) : ({m.content} AI); }) ) : ({m.content} )}Local AI Chat

Let's take a look at the whole file

src/app/page.tsx

"use client";

import { useChat } from "ai/react";

import Markdown from "react-markdown";

export default function Home() {

const { messages, input, handleInputChange, handleSubmit } = useChat();

return (

);

}

With this, the frontend part is complete. Now let's handle the API.

Handling API

Let's start by creating route.ts inside app/api/chat.

Based on the Next.js naming convention, it will allow us to handle the requests on localhost:3000/api/chat endpoint.

src/app/api/chat/route.ts

import { createOllama } from "ollama-ai-provider";

import { streamText } from "ai";

const ollama = createOllama();

export async function POST(req: Request) {

const { messages } = await req.json();

const result = await streamText({

model: ollama("llama3.1"),

messages,

});

return result.toDataStreamResponse();

}

The above code is basically using the ollama and vercel ai to stream the data back as response.

- createOllama creates an instance of the ollama which will communicate with the model installed on the system.

- POST function is the route handler on the /api/chat endpoint with post method.

- The request body contains the list of all previous messages. So it's a good idea to limit it or the performance will degrade over time. In this example, the ollama function takes "llama3.1" as the model to generate the response based on the messages array.

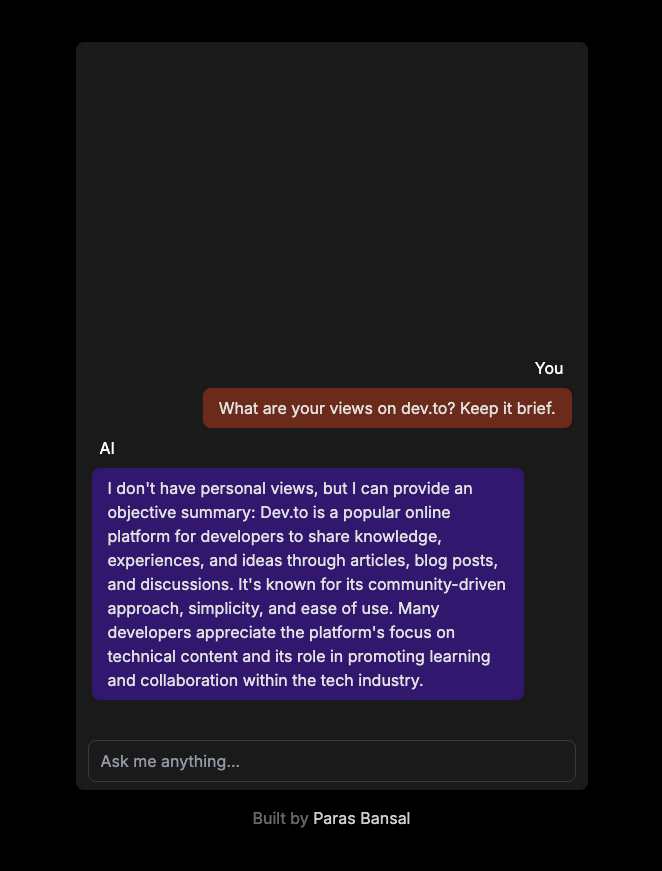

Generative AI on your system

Run npm run dev to start the server in the development mode.

Open the browser and go to localhost:3000 to see the results.

If everything is configured properly, you will be able to talk to your very own chatbot.

You can find the source code here: https://github.com/parasbansal/ai-chat

Let me know if you have any questions in the comments, I'll try to answer those.

-

Why Does Microsoft Visual C++ Fail to Correctly Implement Two-Phase Template Instantiation?The Mystery of "Broken" Two-Phase Template Instantiation in Microsoft Visual C Problem Statement:Users commonly express concerns that Micro...Programming Posted on 2025-02-28

Why Does Microsoft Visual C++ Fail to Correctly Implement Two-Phase Template Instantiation?The Mystery of "Broken" Two-Phase Template Instantiation in Microsoft Visual C Problem Statement:Users commonly express concerns that Micro...Programming Posted on 2025-02-28 -

Why Isn\'t My CSS Background Image Appearing?Troubleshoot: CSS Background Image Not AppearingYou've encountered an issue where your background image fails to load despite following tutorial i...Programming Posted on 2025-02-28

Why Isn\'t My CSS Background Image Appearing?Troubleshoot: CSS Background Image Not AppearingYou've encountered an issue where your background image fails to load despite following tutorial i...Programming Posted on 2025-02-28 -

How to Check if an Object Has a Specific Attribute in Python?Method to Determine Object Attribute ExistenceThis inquiry seeks a method to verify the presence of a specific attribute within an object. Consider th...Programming Posted on 2025-02-28

How to Check if an Object Has a Specific Attribute in Python?Method to Determine Object Attribute ExistenceThis inquiry seeks a method to verify the presence of a specific attribute within an object. Consider th...Programming Posted on 2025-02-28 -

Why Do Arrow Functions Cause Syntax Errors in IE11 and How Can I Fix Them?Why Arrow Functions Cause Syntax Errors in IE 11In the provided D3.js code, the error arises from the use of arrow functions. IE 11 does not support a...Programming Posted on 2025-02-28

Why Do Arrow Functions Cause Syntax Errors in IE11 and How Can I Fix Them?Why Arrow Functions Cause Syntax Errors in IE 11In the provided D3.js code, the error arises from the use of arrow functions. IE 11 does not support a...Programming Posted on 2025-02-28 -

How do you extract a random element from an array in PHP?Random Selection from an ArrayIn PHP, obtaining a random item from an array can be accomplished with ease. Consider the following array:$items = [523,...Programming Posted on 2025-02-28

How do you extract a random element from an array in PHP?Random Selection from an ArrayIn PHP, obtaining a random item from an array can be accomplished with ease. Consider the following array:$items = [523,...Programming Posted on 2025-02-28 -

How Can I UNION Database Tables with Different Numbers of Columns?Combined tables with different columns] Can encounter challenges when trying to merge database tables with different columns. A straightforward way i...Programming Posted on 2025-02-28

How Can I UNION Database Tables with Different Numbers of Columns?Combined tables with different columns] Can encounter challenges when trying to merge database tables with different columns. A straightforward way i...Programming Posted on 2025-02-28 -

Why Am I Getting "Invalid utf8 Character String" Errors When Saving Emojis in My MySQL utf8mb4 Database?Saving Emojis in MySQL utf8mb4 Database: Troubleshooting Invalid Character ErrorsWhen attempting to store names containing emojis in a MySQL database,...Programming Posted on 2025-02-27

Why Am I Getting "Invalid utf8 Character String" Errors When Saving Emojis in My MySQL utf8mb4 Database?Saving Emojis in MySQL utf8mb4 Database: Troubleshooting Invalid Character ErrorsWhen attempting to store names containing emojis in a MySQL database,...Programming Posted on 2025-02-27 -

How Can I Remove a Div Element While Keeping Its Contents Intact?Eliminating a Div While Preserving Its ElementsTo move elements from within a div to outside of it for varying screen sizes, an alternative to repeati...Programming Posted on 2025-02-27

How Can I Remove a Div Element While Keeping Its Contents Intact?Eliminating a Div While Preserving Its ElementsTo move elements from within a div to outside of it for varying screen sizes, an alternative to repeati...Programming Posted on 2025-02-27 -

How Can I Sort an Associative Array by a Specific Column Value in PHP?Sorting an Associative Array by Column ValueGiven an array of associative arrays, the task is to sort the elements based on a specific column value. F...Programming Posted on 2025-02-27

How Can I Sort an Associative Array by a Specific Column Value in PHP?Sorting an Associative Array by Column ValueGiven an array of associative arrays, the task is to sort the elements based on a specific column value. F...Programming Posted on 2025-02-27 -

How Can I Emulate Capturing Groups in Go Regular Expressions?Capturing Groups in Go Regular ExpressionsIn Go, regular expressions utilize the RE2 library, which lacks native support for capturing groups as found...Programming Posted on 2025-02-27

How Can I Emulate Capturing Groups in Go Regular Expressions?Capturing Groups in Go Regular ExpressionsIn Go, regular expressions utilize the RE2 library, which lacks native support for capturing groups as found...Programming Posted on 2025-02-27 -

How to Ensure Hibernate Preserves Enum Values When Mapping to a MySQL Enum Column?Preserving Enum Values in Hibernate: Troubleshooting Wrong Column TypeIn the realm of data persistence, ensuring the compatibility between data models...Programming Posted on 2025-02-27

How to Ensure Hibernate Preserves Enum Values When Mapping to a MySQL Enum Column?Preserving Enum Values in Hibernate: Troubleshooting Wrong Column TypeIn the realm of data persistence, ensuring the compatibility between data models...Programming Posted on 2025-02-27 -

How can I install MySQL on Ubuntu without a password prompt?Non-Interactive Installation of MySQL on UbuntuThe standard method of installing MySQL server on Ubuntu using sudo apt-get install mysql prompts for a...Programming Posted on 2025-02-27

How can I install MySQL on Ubuntu without a password prompt?Non-Interactive Installation of MySQL on UbuntuThe standard method of installing MySQL server on Ubuntu using sudo apt-get install mysql prompts for a...Programming Posted on 2025-02-27 -

How to Combine Multiple Rows into a Single Comma-Separated Row in MySQL?MySQL: Converting Multiple Rows into a Single Comma-Separated RowWithin a MySQL database, you may encounter a situation where you want to condense mul...Programming Posted on 2025-02-27

How to Combine Multiple Rows into a Single Comma-Separated Row in MySQL?MySQL: Converting Multiple Rows into a Single Comma-Separated RowWithin a MySQL database, you may encounter a situation where you want to condense mul...Programming Posted on 2025-02-27 -

How to Add Horizontal Scrolling to HTML Tables?Extending HTML Tables with Horizontal ScrollingWhen dealing with extensive data tables, it becomes necessary to enhance the user experience by providi...Programming Posted on 2025-02-27

How to Add Horizontal Scrolling to HTML Tables?Extending HTML Tables with Horizontal ScrollingWhen dealing with extensive data tables, it becomes necessary to enhance the user experience by providi...Programming Posted on 2025-02-27

Study Chinese

- 1 How do you say "walk" in Chinese? 走路 Chinese pronunciation, 走路 Chinese learning

- 2 How do you say "take a plane" in Chinese? 坐飞机 Chinese pronunciation, 坐飞机 Chinese learning

- 3 How do you say "take a train" in Chinese? 坐火车 Chinese pronunciation, 坐火车 Chinese learning

- 4 How do you say "take a bus" in Chinese? 坐车 Chinese pronunciation, 坐车 Chinese learning

- 5 How to say drive in Chinese? 开车 Chinese pronunciation, 开车 Chinese learning

- 6 How do you say swimming in Chinese? 游泳 Chinese pronunciation, 游泳 Chinese learning

- 7 How do you say ride a bicycle in Chinese? 骑自行车 Chinese pronunciation, 骑自行车 Chinese learning

- 8 How do you say hello in Chinese? 你好Chinese pronunciation, 你好Chinese learning

- 9 How do you say thank you in Chinese? 谢谢Chinese pronunciation, 谢谢Chinese learning

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning