Lamba LLRT

Warning: any and all content posted is intended to remind or maintain my knowledge and I hope it can help you on your journey of learning too.

This post is live and will be updated periodically.

If you find any flaws or notice that something is missing, help me improve :)

Have you ever stopped to think that we are being increasingly demanded regarding the performance of our applications?

Every day we are challenged to make them faster and with that, we are led to evaluate solutions and architectures that enable us to achieve the result.

So the idea is to bring a short post, informing about a new evolution that can help us to have a considerable increase in performance in serverless applications in AWS Lambda. This solution is LLRT Javascript.

LLRT Javascript(Low Latency Runtime Javascript)

A new Javascript runtime is being developed by the aws team.

It is currently experimental and there are efforts to try to release a stable version by the end of 2024.

see the description that AWS presents:

LLRT (Low Latency Runtime) is a lightweight JavaScript runtime designed to address the growing demand for fast and efficient Serverless applications. LLRT offers up to over 10x faster startup and up to 2x overall lower cost compared to other JavaScript runtimes running on AWS Lambda

It's built in Rust, utilizing QuickJS as JavaScript engine, ensuring efficient memory usage and swift startup.

See that they aim to deliver something up to 10x faster than other JS runtimes.

All of this construction is done using Rust, which is a high-performance language, and QuickJS, which is a lightweight, high-performance JavaScript engine designed to be small, efficient and compatible with the latest ECMAScript specification. recent, including modern features like classes, async/await, and modules. Furthermore, an approach that does not use JIT is used. Therefore, instead of allocating resources for Just-In-Time compilation, it conserves these resources for executing tasks within the code itself.

But don't worry, not everything is rosy, it's tradeoffs (horrible pun, I know lol).

Therefore, there are some important points to consider before thinking about adopting LLRT JS. See what AWS says:

There are many cases where LLRT shows notable performance drawbacks compared with JIT-powered runtimes, such as large data processing, Monte Carlo simulations or performing tasks with hundreds of thousands or millions of iterations. LLRT is most effective when applied to smaller Serverless functions dedicated to tasks such as data transformation, real time processing, AWS service integrations, authorization, validation etc. It is designed to complement existing components rather than serve as a comprehensive replacement for everything. Notably, given its supported APIs are based on Node.js specification, transitioning back to alternative solutions requires minimal code adjustments.

Furthermore, the idea is that LLRT JS is not a replacement for node.js and nor will it ever be.

Look:

LLRT only supports a fraction of the Node.js APIs. It is NOT a drop in replacement for Node.js, nor will it ever be. Below is a high level overview of partially supported APIs and modules. For more details consult the API documentation.

Evaluative Tests

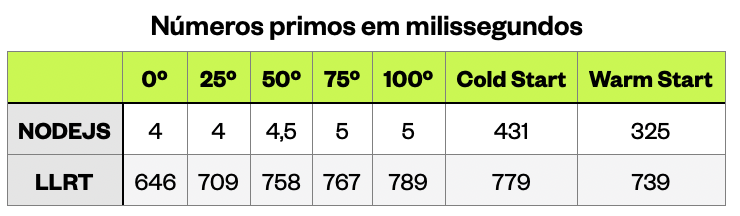

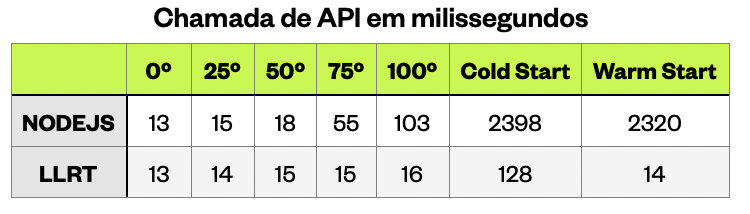

Taking into consideration the applicability mentioned by AWS itself, we will carry out two tests to evaluate and compare LLRT with NodeJS. One of the tests will be for calculating prime numbers and the other will be for a simple API call.

Why use the calculation of prime numbers?

The answer is that the high processing required to identify prime numbers results from the need to perform many mathematical operations (divisions) to verify primality, the unpredictable distribution of primes, and the increasing complexity with the size of the numbers. These factors combine to make primality checking and the search for prime numbers a computationally intensive task, especially at large scales.

Hands on then...

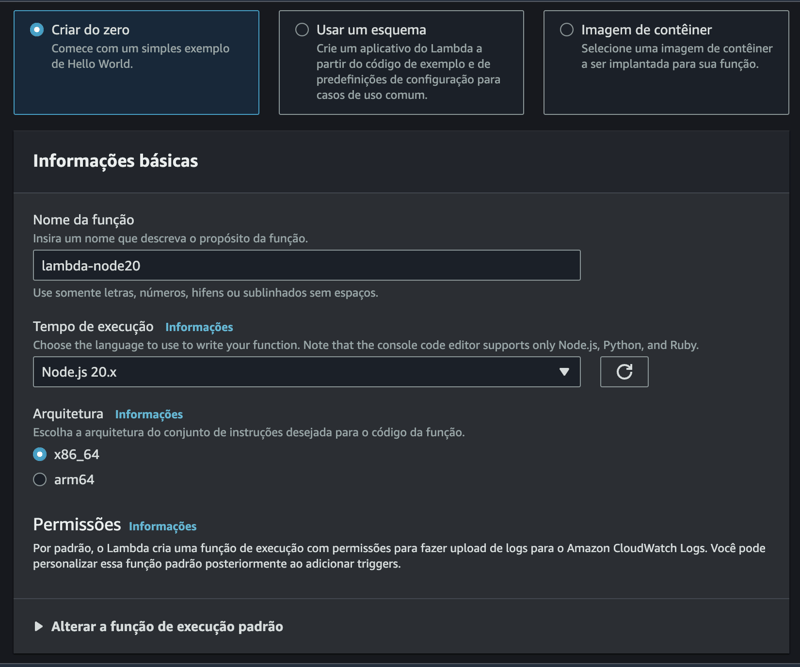

Create the first lambda function with nodejs:

Now, let's create the function with LLRT JS. I chose to use the layer option.

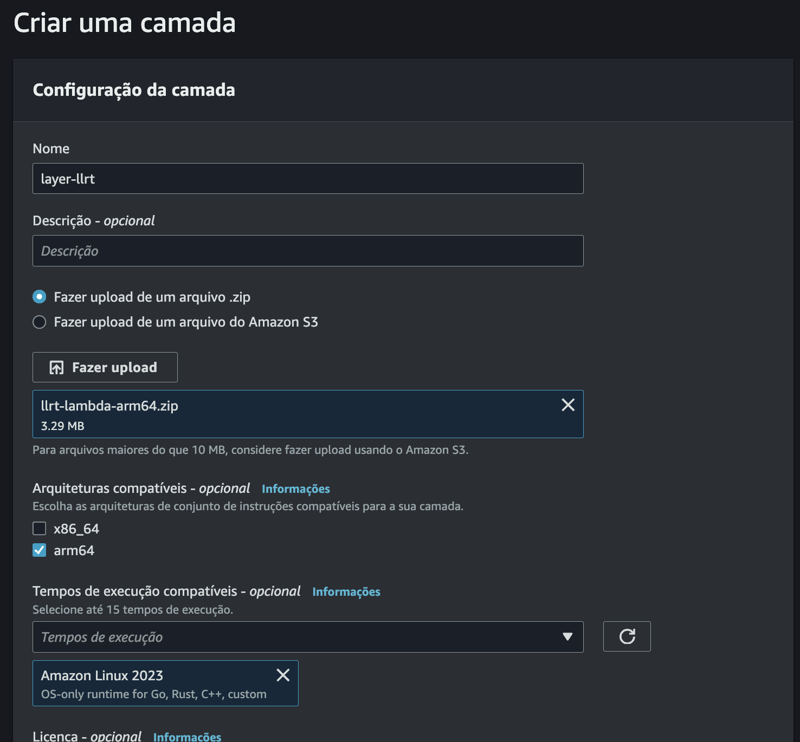

Create the layer:

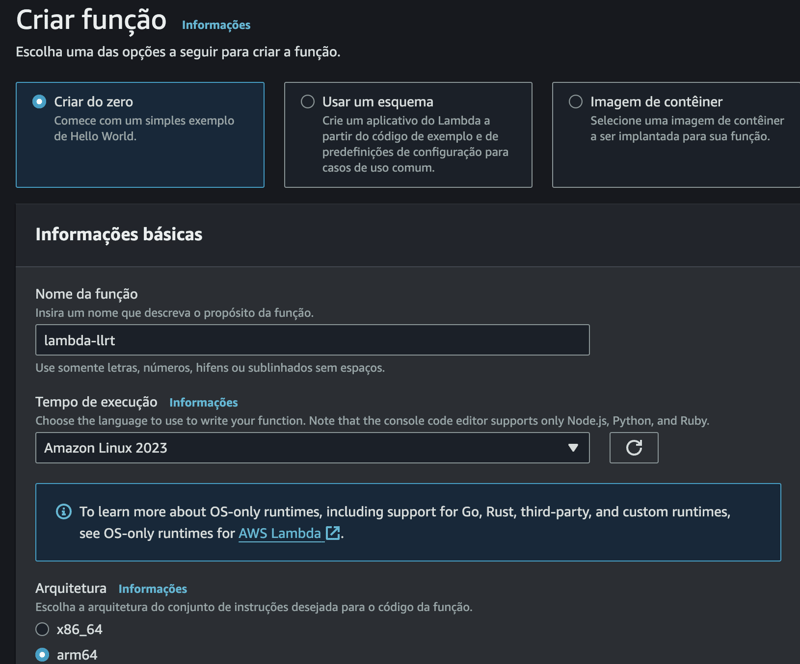

Then create the function:

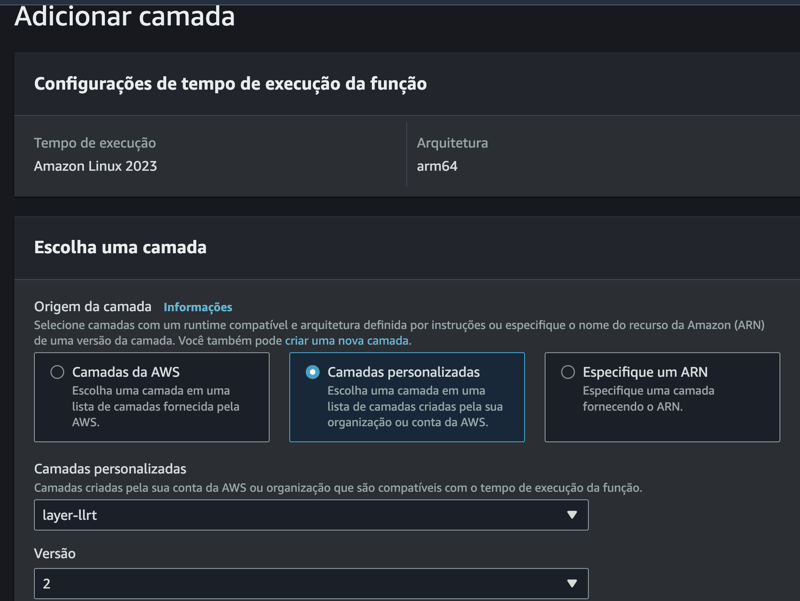

And add this layer to the LLRT JS function created:

For the prime number test, we will use the following code:

let isLambdaWarm = false

export async function handler(event) {

const limit = event.limit || 100000; // Defina um limite alto para aumentar a complexidade

const primes = [];

const startTime = Date.now()

const isPrime = (num) => {

if (num

And for API testing, we will use the code below:

let isLambdaWarm = false

export async function handler(event) {

const url = event.url || 'https://jsonplaceholder.typicode.com/posts/1'

console.log('starting fetch url', { url })

const startTime = Date.now()

let resp;

try {

const response = await fetch(url)

const data = await response.json()

const endTime = Date.now() - startTime

resp = {

statusCode: 200,

body: JSON.stringify({

executionTime: `${endTime} ms`,

isLambdaWarm: `${isLambdaWarm}`

}),

}

}

catch (error) {

resp = {

statusCode: 500,

body: JSON.stringify({

message: 'Error fetching data',

error: error.message,

}),

}

}

if (!isLambdaWarm) {

isLambdaWarm = true

}

return resp;

};

Test results

The objective is more educational here, so our sample for each test consists of 15 warm start data and 1 cold start data.

Memory consumption

LLRT JS - for both tests, the same amount of memory was consumed: 23mb.

NodeJS - for the prime number test, nodejs started consuming 69mb and went up to 106mb.

For the API test, the minimum was 86mb and the maximum was 106mb.

Execution time

after removing the outliers, this was the result:

Final report

Memory consumption - for memory consumption it was observed that LLRT made better use of the available resource compared to nodejs.

Performance - we noticed that in the high processing scenario, the node maintained much better performance than LLRT, both in cold start and warm start.

For the lower processing scenario, LLRT had a certain advantage, especially in the cold start.

Let's wait for the final results and hope that we can have even more significant improvements, but it's great to see the flexibility of JS and see how much it can and still has to deliver to us.

I hope you enjoyed it and helped you improve your understanding of something or even opened paths to new knowledge. I count on you for criticism and suggestions so that we can improve the content and always keep it updated for the community.

-

Tips for finding element position in Java arrayRetrieving Element Position in Java ArraysWithin Java's Arrays class, there is no direct "indexOf" method to determine the position of a...Programming Posted on 2025-07-13

Tips for finding element position in Java arrayRetrieving Element Position in Java ArraysWithin Java's Arrays class, there is no direct "indexOf" method to determine the position of a...Programming Posted on 2025-07-13 -

Why HTML cannot print page numbers and solutionsCan't Print Page Numbers on HTML Pages?Problem Description:Despite researching extensively, page numbers fail to appear when printing an HTML docu...Programming Posted on 2025-07-13

Why HTML cannot print page numbers and solutionsCan't Print Page Numbers on HTML Pages?Problem Description:Despite researching extensively, page numbers fail to appear when printing an HTML docu...Programming Posted on 2025-07-13 -

Can You Use CSS to Color Console Output in Chrome and Firefox?Displaying Colors in JavaScript ConsoleIs it possible to use Chrome's console to display colored text, such as red for errors, orange for warnings...Programming Posted on 2025-07-13

Can You Use CSS to Color Console Output in Chrome and Firefox?Displaying Colors in JavaScript ConsoleIs it possible to use Chrome's console to display colored text, such as red for errors, orange for warnings...Programming Posted on 2025-07-13 -

How do you extract a random element from an array in PHP?Random Selection from an ArrayIn PHP, obtaining a random item from an array can be accomplished with ease. Consider the following array:$items = [523,...Programming Posted on 2025-07-13

How do you extract a random element from an array in PHP?Random Selection from an ArrayIn PHP, obtaining a random item from an array can be accomplished with ease. Consider the following array:$items = [523,...Programming Posted on 2025-07-13 -

Reasons for CodeIgniter to connect to MySQL database after switching to MySQLiUnable to Connect to MySQL Database: Troubleshooting Error MessageWhen attempting to switch from the MySQL driver to the MySQLi driver in CodeIgniter,...Programming Posted on 2025-07-13

Reasons for CodeIgniter to connect to MySQL database after switching to MySQLiUnable to Connect to MySQL Database: Troubleshooting Error MessageWhen attempting to switch from the MySQL driver to the MySQLi driver in CodeIgniter,...Programming Posted on 2025-07-13 -

How to Simplify JSON Parsing in PHP for Multi-Dimensional Arrays?Parsing JSON with PHPTrying to parse JSON data in PHP can be challenging, especially when dealing with multi-dimensional arrays. To simplify the proce...Programming Posted on 2025-07-13

How to Simplify JSON Parsing in PHP for Multi-Dimensional Arrays?Parsing JSON with PHPTrying to parse JSON data in PHP can be challenging, especially when dealing with multi-dimensional arrays. To simplify the proce...Programming Posted on 2025-07-13 -

Tips for floating pictures to the right side of the bottom and wrapping around textFloating an Image to the Bottom Right with Text Wrapping AroundIn web design, it is sometimes desirable to float an image to the bottom right corner o...Programming Posted on 2025-07-13

Tips for floating pictures to the right side of the bottom and wrapping around textFloating an Image to the Bottom Right with Text Wrapping AroundIn web design, it is sometimes desirable to float an image to the bottom right corner o...Programming Posted on 2025-07-13 -

How does Android send POST data to PHP server?Sending POST Data in AndroidIntroductionThis article addresses the need to send POST data to a PHP script and display the result in an Android applica...Programming Posted on 2025-07-13

How does Android send POST data to PHP server?Sending POST Data in AndroidIntroductionThis article addresses the need to send POST data to a PHP script and display the result in an Android applica...Programming Posted on 2025-07-13 -

How to Efficiently Convert Timezones in PHP?Efficient Timezone Conversion in PHPIn PHP, handling timezones can be a straightforward task. This guide will provide an easy-to-implement method for ...Programming Posted on 2025-07-13

How to Efficiently Convert Timezones in PHP?Efficient Timezone Conversion in PHPIn PHP, handling timezones can be a straightforward task. This guide will provide an easy-to-implement method for ...Programming Posted on 2025-07-13 -

Reflective dynamic implementation of Go interface for RPC method explorationReflection for Dynamic Interface Implementation in GoReflection in Go is a powerful tool that allows for the inspection and manipulation of code at ru...Programming Posted on 2025-07-13

Reflective dynamic implementation of Go interface for RPC method explorationReflection for Dynamic Interface Implementation in GoReflection in Go is a powerful tool that allows for the inspection and manipulation of code at ru...Programming Posted on 2025-07-13 -

How to Correctly Display the Current Date and Time in "dd/MM/yyyy HH:mm:ss.SS" Format in Java?How to Display Current Date and Time in "dd/MM/yyyy HH:mm:ss.SS" FormatIn the provided Java code, the issue with displaying the date and tim...Programming Posted on 2025-07-13

How to Correctly Display the Current Date and Time in "dd/MM/yyyy HH:mm:ss.SS" Format in Java?How to Display Current Date and Time in "dd/MM/yyyy HH:mm:ss.SS" FormatIn the provided Java code, the issue with displaying the date and tim...Programming Posted on 2025-07-13 -

Why do Lambda expressions require "final" or "valid final" variables in Java?Lambda Expressions Require "Final" or "Effectively Final" VariablesThe error message "Variable used in lambda expression shou...Programming Posted on 2025-07-13

Why do Lambda expressions require "final" or "valid final" variables in Java?Lambda Expressions Require "Final" or "Effectively Final" VariablesThe error message "Variable used in lambda expression shou...Programming Posted on 2025-07-13 -

How Can I UNION Database Tables with Different Numbers of Columns?Combined tables with different columns] Can encounter challenges when trying to merge database tables with different columns. A straightforward way i...Programming Posted on 2025-07-13

How Can I UNION Database Tables with Different Numbers of Columns?Combined tables with different columns] Can encounter challenges when trying to merge database tables with different columns. A straightforward way i...Programming Posted on 2025-07-13 -

Why Doesn\'t Firefox Display Images Using the CSS `content` Property?Displaying Images with Content URL in FirefoxAn issue has been encountered where certain browsers, specifically Firefox, fail to display images when r...Programming Posted on 2025-07-13

Why Doesn\'t Firefox Display Images Using the CSS `content` Property?Displaying Images with Content URL in FirefoxAn issue has been encountered where certain browsers, specifically Firefox, fail to display images when r...Programming Posted on 2025-07-13 -

How to Resolve the \"Invalid Use of Group Function\" Error in MySQL When Finding Max Count?How to Retrieve the Maximum Count Using MySQLIn MySQL, you may encounter an issue while attempting to find the maximum count of values grouped by a sp...Programming Posted on 2025-07-13

How to Resolve the \"Invalid Use of Group Function\" Error in MySQL When Finding Max Count?How to Retrieve the Maximum Count Using MySQLIn MySQL, you may encounter an issue while attempting to find the maximum count of values grouped by a sp...Programming Posted on 2025-07-13

Study Chinese

- 1 How do you say "walk" in Chinese? 走路 Chinese pronunciation, 走路 Chinese learning

- 2 How do you say "take a plane" in Chinese? 坐飞机 Chinese pronunciation, 坐飞机 Chinese learning

- 3 How do you say "take a train" in Chinese? 坐火车 Chinese pronunciation, 坐火车 Chinese learning

- 4 How do you say "take a bus" in Chinese? 坐车 Chinese pronunciation, 坐车 Chinese learning

- 5 How to say drive in Chinese? 开车 Chinese pronunciation, 开车 Chinese learning

- 6 How do you say swimming in Chinese? 游泳 Chinese pronunciation, 游泳 Chinese learning

- 7 How do you say ride a bicycle in Chinese? 骑自行车 Chinese pronunciation, 骑自行车 Chinese learning

- 8 How do you say hello in Chinese? 你好Chinese pronunciation, 你好Chinese learning

- 9 How do you say thank you in Chinese? 谢谢Chinese pronunciation, 谢谢Chinese learning

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning