Why did my Google Colab session crash while running the Llama model?

I am trying to use the meta-llama/Llama-2-7b-hf model and run it locally on my premises but the session crashed during the process.

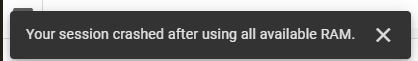

I am trying to use the meta-llama/Llama-2-7b-hf model and run it locally on my premises. To do this, I am using Google Colab and have obtained an access key from Hugging Face. I am utilizing their transformers library for the necessary tasks. Initially, I used the T4 GPU runtime stack on Google Colab, which provided 12.7 GB of system RAM, 15.0 GB of GPU RAM, and 78.2 GB of disk space. Despite these resources, my session crashed, and I encountered the following error:

Subsequently, I switched to the TPU V2 runtime stack, which offers 334.6 GB of system RAM and 225.3 GB of disk space, but the issue persisted.

Here is my code:

!pip install transformers !pip install --upgrade transformers from huggingface_hub import login login(token='Access Token From Hugging Face') import pandas as pd from transformers import AutoTokenizer, AutoModelForSequenceClassification, TrainingArguments, Trainer from torch.utils.data import Dataset # Load pre-trained Meta-Llama-3.1-8B model model_name = "meta-llama/Llama-2-7b-hf" tokenizer = AutoTokenizer.from_pretrained(model_name) model = AutoModelForSequenceClassification.from_pretrained(model_name)

-

How to Implement Custom Exception Handling with Python\'s Logging Module?Custom Error Handling with Python's Logging ModuleEnsuring that uncaught exceptions are properly handled and logged can be crucial for troubleshoo...Programming Posted on 2025-02-19

How to Implement Custom Exception Handling with Python\'s Logging Module?Custom Error Handling with Python's Logging ModuleEnsuring that uncaught exceptions are properly handled and logged can be crucial for troubleshoo...Programming Posted on 2025-02-19 -

Why Do Arrow Functions Cause Syntax Errors in IE11 and How Can I Fix Them?Why Arrow Functions Cause Syntax Errors in IE 11In the provided D3.js code, the error arises from the use of arrow functions. IE 11 does not support a...Programming Posted on 2025-02-19

Why Do Arrow Functions Cause Syntax Errors in IE11 and How Can I Fix Them?Why Arrow Functions Cause Syntax Errors in IE 11In the provided D3.js code, the error arises from the use of arrow functions. IE 11 does not support a...Programming Posted on 2025-02-19 -

How to Check if an Object Has a Specific Attribute in Python?Method to Determine Object Attribute ExistenceThis inquiry seeks a method to verify the presence of a specific attribute within an object. Consider th...Programming Posted on 2025-02-19

How to Check if an Object Has a Specific Attribute in Python?Method to Determine Object Attribute ExistenceThis inquiry seeks a method to verify the presence of a specific attribute within an object. Consider th...Programming Posted on 2025-02-19 -

Why Does Microsoft Visual C++ Fail to Correctly Implement Two-Phase Template Instantiation?The Mystery of "Broken" Two-Phase Template Instantiation in Microsoft Visual C Problem Statement:Users commonly express concerns that Micro...Programming Posted on 2025-02-19

Why Does Microsoft Visual C++ Fail to Correctly Implement Two-Phase Template Instantiation?The Mystery of "Broken" Two-Phase Template Instantiation in Microsoft Visual C Problem Statement:Users commonly express concerns that Micro...Programming Posted on 2025-02-19 -

How Can I Control Android Device Vibrations with Varying Frequencies?Controlling Android Device Vibrations with Frequency VariationsWant to add a tactile element to your Android app? Understanding how to trigger the dev...Programming Posted on 2025-02-19

How Can I Control Android Device Vibrations with Varying Frequencies?Controlling Android Device Vibrations with Frequency VariationsWant to add a tactile element to your Android app? Understanding how to trigger the dev...Programming Posted on 2025-02-19 -

How Can I Reliably Check for Column Existence in a MySQL Table?Determining Column Existence in a MySQL TableIn MySQL, verifying the presence of a column in a table can be a bit perplexing compared to other databas...Programming Posted on 2025-02-19

How Can I Reliably Check for Column Existence in a MySQL Table?Determining Column Existence in a MySQL TableIn MySQL, verifying the presence of a column in a table can be a bit perplexing compared to other databas...Programming Posted on 2025-02-19 -

How Can I UNION Database Tables with Different Numbers of Columns?Combined tables with different columns] Can encounter challenges when trying to merge database tables with different columns. A straightforward way i...Programming Posted on 2025-02-19

How Can I UNION Database Tables with Different Numbers of Columns?Combined tables with different columns] Can encounter challenges when trying to merge database tables with different columns. A straightforward way i...Programming Posted on 2025-02-19 -

How can I install MySQL on Ubuntu without a password prompt?Non-Interactive Installation of MySQL on UbuntuThe standard method of installing MySQL server on Ubuntu using sudo apt-get install mysql prompts for a...Programming Posted on 2025-02-19

How can I install MySQL on Ubuntu without a password prompt?Non-Interactive Installation of MySQL on UbuntuThe standard method of installing MySQL server on Ubuntu using sudo apt-get install mysql prompts for a...Programming Posted on 2025-02-19 -

How Can I Efficiently Count Element Occurrences in a Java List?Counting Element Occurrences in a ListWithin the realm of Java programming, the task of enumerating element occurrences within a list comes to the for...Programming Posted on 2025-02-19

How Can I Efficiently Count Element Occurrences in a Java List?Counting Element Occurrences in a ListWithin the realm of Java programming, the task of enumerating element occurrences within a list comes to the for...Programming Posted on 2025-02-19 -

How Can I Remove a Div Element While Keeping Its Contents Intact?Eliminating a Div While Preserving Its ElementsTo move elements from within a div to outside of it for varying screen sizes, an alternative to repeati...Programming Posted on 2025-02-19

How Can I Remove a Div Element While Keeping Its Contents Intact?Eliminating a Div While Preserving Its ElementsTo move elements from within a div to outside of it for varying screen sizes, an alternative to repeati...Programming Posted on 2025-02-19 -

How to Sort Data by String Length in MySQL Using CHAR_LENGTH()?Selecting Data by String Length in MySQLTo sort data based on string length in MySQL, instead of using string_length(column), consider using the built...Programming Posted on 2025-02-19

How to Sort Data by String Length in MySQL Using CHAR_LENGTH()?Selecting Data by String Length in MySQLTo sort data based on string length in MySQL, instead of using string_length(column), consider using the built...Programming Posted on 2025-02-19 -

How to Ensure Hibernate Preserves Enum Values When Mapping to a MySQL Enum Column?Preserving Enum Values in Hibernate: Troubleshooting Wrong Column TypeIn the realm of data persistence, ensuring the compatibility between data models...Programming Posted on 2025-02-19

How to Ensure Hibernate Preserves Enum Values When Mapping to a MySQL Enum Column?Preserving Enum Values in Hibernate: Troubleshooting Wrong Column TypeIn the realm of data persistence, ensuring the compatibility between data models...Programming Posted on 2025-02-19 -

Does `exec()` Update Local Variables in Python 3, and If Not, How Can It Be Made To?exec's Impact on Local Variables: A Dive InThe exec function, a Python staple for dynamic code execution, poses an intriguing query: can it update...Programming Posted on 2025-02-19

Does `exec()` Update Local Variables in Python 3, and If Not, How Can It Be Made To?exec's Impact on Local Variables: A Dive InThe exec function, a Python staple for dynamic code execution, poses an intriguing query: can it update...Programming Posted on 2025-02-19 -

Why is my exec() function failing, even after disabling safe mode and checking permissions?Debugging exec() Function IssuesProblem StatementDespite efforts to disable safe mode, ensure proper console command functionality, and test with expl...Programming Posted on 2025-02-18

Why is my exec() function failing, even after disabling safe mode and checking permissions?Debugging exec() Function IssuesProblem StatementDespite efforts to disable safe mode, ensure proper console command functionality, and test with expl...Programming Posted on 2025-02-18 -

![How to Resolve "Unrecognized name: employees at [9:8]" Error in BigQuery?](/style/images/moren/moren.png) How to Resolve "Unrecognized name: employees at [9:8]" Error in BigQuery?Error: "Unrecognized name: employees at [9:8]"] When using table alias, you may encounter the "Unrecognized name: employees at [9:8]&q...Programming Posted on 2025-02-18

How to Resolve "Unrecognized name: employees at [9:8]" Error in BigQuery?Error: "Unrecognized name: employees at [9:8]"] When using table alias, you may encounter the "Unrecognized name: employees at [9:8]&q...Programming Posted on 2025-02-18

Study Chinese

- 1 How do you say "walk" in Chinese? 走路 Chinese pronunciation, 走路 Chinese learning

- 2 How do you say "take a plane" in Chinese? 坐飞机 Chinese pronunciation, 坐飞机 Chinese learning

- 3 How do you say "take a train" in Chinese? 坐火车 Chinese pronunciation, 坐火车 Chinese learning

- 4 How do you say "take a bus" in Chinese? 坐车 Chinese pronunciation, 坐车 Chinese learning

- 5 How to say drive in Chinese? 开车 Chinese pronunciation, 开车 Chinese learning

- 6 How do you say swimming in Chinese? 游泳 Chinese pronunciation, 游泳 Chinese learning

- 7 How do you say ride a bicycle in Chinese? 骑自行车 Chinese pronunciation, 骑自行车 Chinese learning

- 8 How do you say hello in Chinese? 你好Chinese pronunciation, 你好Chinese learning

- 9 How do you say thank you in Chinese? 谢谢Chinese pronunciation, 谢谢Chinese learning

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning

![How to Resolve "Unrecognized name: employees at [9:8]" Error in BigQuery?](http://www.luping.net/uploads/20250218/173987605067b466d280e71.jpg173987605067b466d280e79.jpg)