Evaluating A Machine Learning Classification Model

Outline

- What is the goal of model evaluation?

- What is the purpose of model evaluation, and what are some common evaluation procedures?

- What is the usage of classification accuracy, and what are its limitations?

- How does a confusion matrix describe the performance of a classifier?

- What metrics can be computed from a confusion matrix?

The goal of model evaluation is to answer the question;

how do I choose between different models?

The process of evaluating a machine learning helps determines how well the model is reliable and effective for its application. This involves assessing different factors such as its performance, metrics and accuracy for predictions or decision making.

No matter what model you choose to use, you need a way to choose between models: different model types, tuning parameters, and features. Also you need a model evaluation procedure to estimate how well a model will generalize to unseen data. Lastly you need an evaluation procedure to pair with your procedure in other to quantify your model performance.

Before we proceed, let's review some of the different model evaluation procedures and how they operate.

Model Evaluation Procedures and How They Operate.

-

Training and testing on the same data

- Rewards overly complex models that "overfit" the training data and won't necessarily generalize

-

Train/test split

- Split the dataset into two pieces, so that the model can be trained and tested on different data

- Better estimate of out-of-sample performance, but still a "high variance" estimate

- Useful due to its speed, simplicity, and flexibility

-

K-fold cross-validation

- Systematically create "K" train/test splits and average the results together

- Even better estimate of out-of-sample performance

- Runs "K" times slower than train/test split.

From above, we can deduce that:

Training and testing on the same data is a classic cause of overfitting in which you build an overly complex model that won't generalize to new data and that is not actually useful.

Train_Test_Split provides a much better estimate of out-of-sample performance.

K-fold cross-validation does better by systematically K train test splits and averaging the results together.

In summary, train_tests_split is still profitable to cross validation due to its speed and simplicity, and that's what we will use in this tutorial guide.

Model Evaluation Metrics:

You will always need an evaluation metric to go along with your chosen procedure, and your choice of metric depends on the problem you are addressing. For classification problems, you can use classification accuracy. But we will focus on other important classification evaluation metrics in this guide.

Before we learn any new evaluation metrics' Lets review the classification accuracy, and talk about its strength and weaknesses.

Classification accuracy

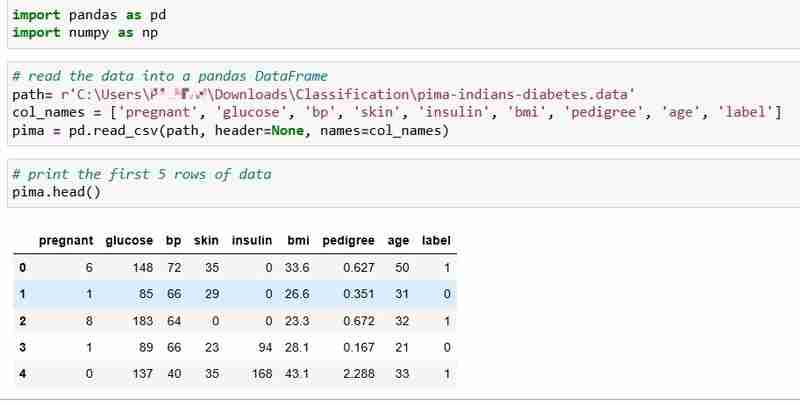

We've chosen the Pima Indians Diabetes dataset for this tutorial, which includes the health data and diabetes status of 768 patients.

Let's read the data and print the first 5 rows of the data. The label column indicates 1 if the patients has diabetes and 0 if the patients doesn't have diabetes, and we intend to answer the question:

Question: Can we predict the diabetes status of a patient given their health measurements?

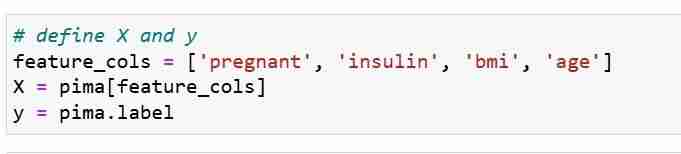

We define our features metrics X and response vector Y. We use train_test_split to split X and Y into training and testing set.

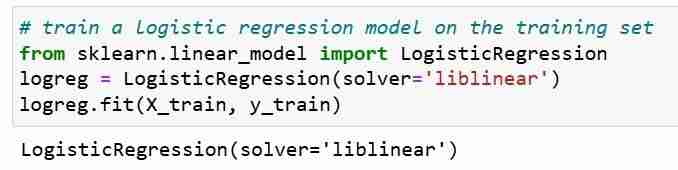

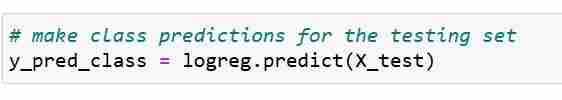

Next, we train a logistic regression model on training set. During then fit step, the logreg model object is learning the relationship between the X_train and Y_train. Finally we make a class predictions for the testing sets.

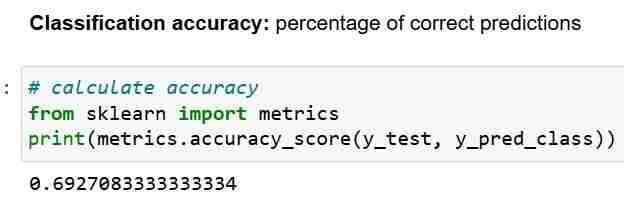

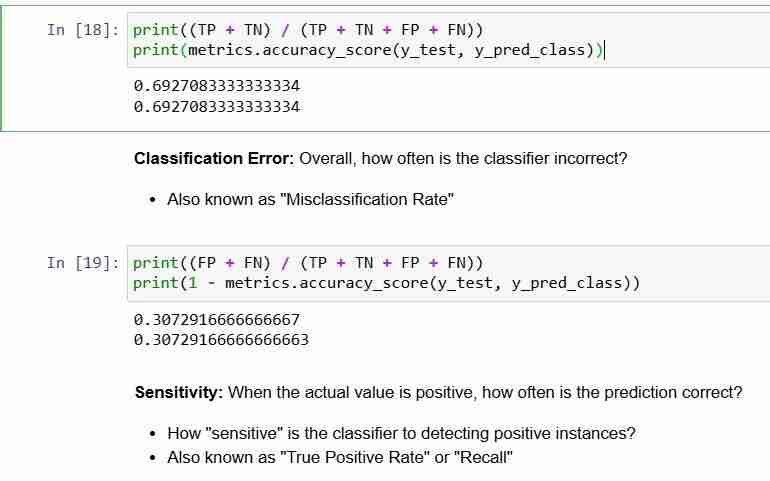

Now , we've made prediction for the testing set, we can calculate the classification accuracy,, which is the simply the percentage of correct predictions.

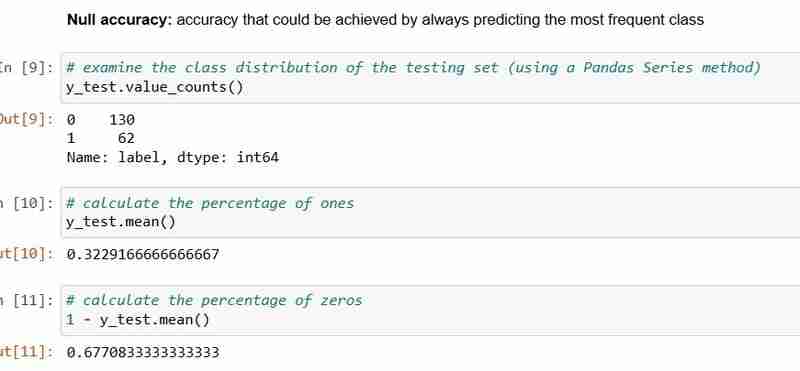

However, anytime you use classification accuracy as your evaluation metrics, it is important to compare it with Null accuracy, which is the accuracy that could be achieved by always predicting the most frequent class.

Null accuracy answers the question; if my model was to predict the predominant class 100 percent of the time, how often will it be correct? In the scenario above, 32% of the y_test are 1 (ones). In other words, a dumb model that predicts that the patients has diabetes, would be right 68% of the time(which is the zeros).This provides a baseline against which we might want to measure our logistic regression model.

When we compare the Null accuracy of 68% and the model accuracy of 69%, our model doesn't look very good. This demonstrates one weakness of classification accuracy as a model evaluation metric. The classification accuracy doesn't tell us anything about the underlying distribution of the testing test.

In Summary:

- Classification accuracy is the easiest classification metric to understand

- But, it does not tell you the underlying distribution of response values

- And, it does not tell you what "types" of errors your classifier is making.

Let's now look at the confusion matrix.

Confusion matrix

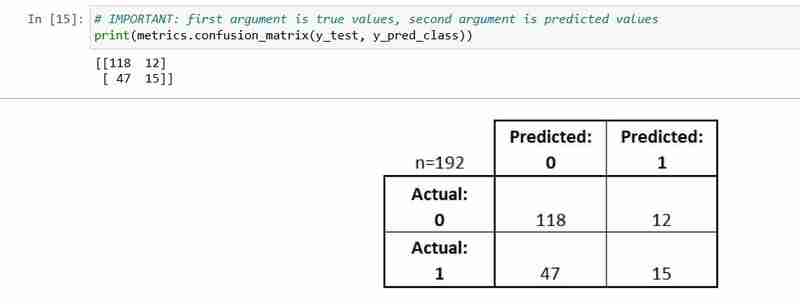

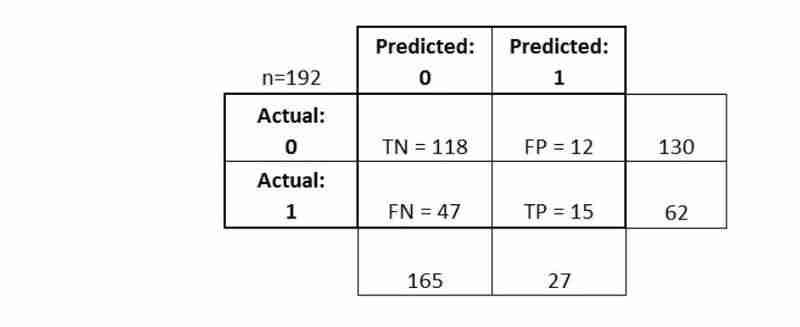

The Confusion matrix is a table that describes the performance of a classification model.

It is useful to help you understand the performance of your classifier, but it is not a model evaluation metric; so you can't tell scikit learn to choose the model with the best confusion matrix. However, there are many metrics that can be calculated from the confusion matrix and those can be directly used to choose between models.

- Every observation in the testing set is represented in exactly one box

- It's a 2x2 matrix because there are 2 response classes

- The format shown here is not universal

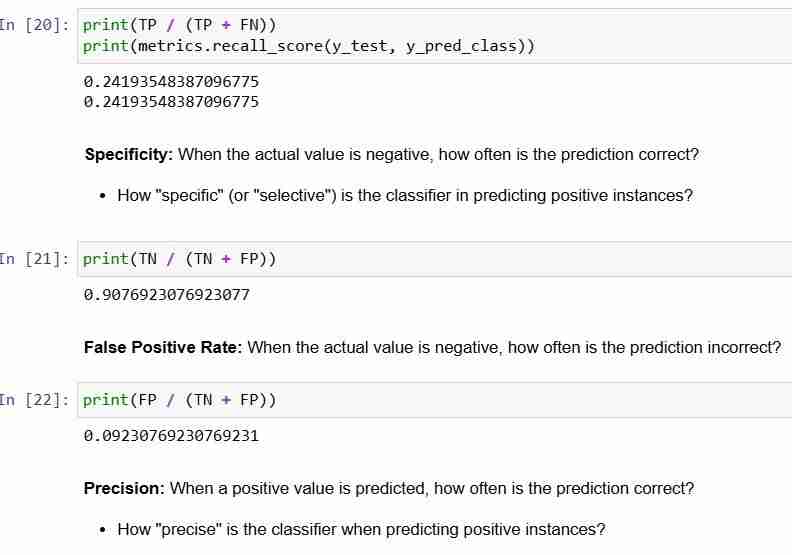

Let's explain some of its basic terminologies.

- True Positives (TP): we correctly predicted that they do have diabetes

- True Negatives (TN): we correctly predicted that they don't have diabetes

- False Positives (FP): we incorrectly predicted that they do have diabetes (a "Type I error")

- False Negatives (FN): we incorrectly predicted that they don't have diabetes (a "Type II error")

Let’s see how we can calculate the metrics

In Conclusion:

- Confusion matrix gives you a more complete picture of how your classifier is performing

- Also allows you to compute various classification metrics, and these metrics can guide your model selection

-

How to Bypass Website Blocks with Python's Requests and Fake User Agents?How to Simulate Browser Behavior with Python's Requests and Fake User AgentsPython's Requests library is a powerful tool for making HTTP reque...Programming Posted on 2025-07-16

How to Bypass Website Blocks with Python's Requests and Fake User Agents?How to Simulate Browser Behavior with Python's Requests and Fake User AgentsPython's Requests library is a powerful tool for making HTTP reque...Programming Posted on 2025-07-16 -

How Can I UNION Database Tables with Different Numbers of Columns?Combined tables with different columns] Can encounter challenges when trying to merge database tables with different columns. A straightforward way i...Programming Posted on 2025-07-16

How Can I UNION Database Tables with Different Numbers of Columns?Combined tables with different columns] Can encounter challenges when trying to merge database tables with different columns. A straightforward way i...Programming Posted on 2025-07-16 -

Why Doesn\'t Firefox Display Images Using the CSS `content` Property?Displaying Images with Content URL in FirefoxAn issue has been encountered where certain browsers, specifically Firefox, fail to display images when r...Programming Posted on 2025-07-16

Why Doesn\'t Firefox Display Images Using the CSS `content` Property?Displaying Images with Content URL in FirefoxAn issue has been encountered where certain browsers, specifically Firefox, fail to display images when r...Programming Posted on 2025-07-16 -

Can template parameters in C++20 Consteval function depend on function parameters?Consteval Functions and Template Parameters Dependent on Function ArgumentsIn C 17, a template parameter cannot depend on a function argument because...Programming Posted on 2025-07-16

Can template parameters in C++20 Consteval function depend on function parameters?Consteval Functions and Template Parameters Dependent on Function ArgumentsIn C 17, a template parameter cannot depend on a function argument because...Programming Posted on 2025-07-16 -

How to implement custom events using observer pattern in Java?Creating Custom Events in JavaCustom events are indispensable in many programming scenarios, enabling components to communicate with each other based ...Programming Posted on 2025-07-16

How to implement custom events using observer pattern in Java?Creating Custom Events in JavaCustom events are indispensable in many programming scenarios, enabling components to communicate with each other based ...Programming Posted on 2025-07-16 -

Can You Use CSS to Color Console Output in Chrome and Firefox?Displaying Colors in JavaScript ConsoleIs it possible to use Chrome's console to display colored text, such as red for errors, orange for warnings...Programming Posted on 2025-07-16

Can You Use CSS to Color Console Output in Chrome and Firefox?Displaying Colors in JavaScript ConsoleIs it possible to use Chrome's console to display colored text, such as red for errors, orange for warnings...Programming Posted on 2025-07-16 -

How can I safely concatenate text and values when constructing SQL queries in Go?Concatenating Text and Values in Go SQL QueriesWhen constructing a text SQL query in Go, there are certain syntax rules to follow when concatenating s...Programming Posted on 2025-07-16

How can I safely concatenate text and values when constructing SQL queries in Go?Concatenating Text and Values in Go SQL QueriesWhen constructing a text SQL query in Go, there are certain syntax rules to follow when concatenating s...Programming Posted on 2025-07-16 -

How to efficiently insert data into multiple MySQL tables in one transaction?MySQL Insert into Multiple TablesAttempting to insert data into multiple tables with a single MySQL query may yield unexpected results. While it may s...Programming Posted on 2025-07-16

How to efficiently insert data into multiple MySQL tables in one transaction?MySQL Insert into Multiple TablesAttempting to insert data into multiple tables with a single MySQL query may yield unexpected results. While it may s...Programming Posted on 2025-07-16 -

Why Am I Getting a "Could Not Find an Implementation of the Query Pattern" Error in My Silverlight LINQ Query?Query Pattern Implementation Absence: Resolving "Could Not Find" ErrorsIn a Silverlight application, an attempt to establish a database conn...Programming Posted on 2025-07-16

Why Am I Getting a "Could Not Find an Implementation of the Query Pattern" Error in My Silverlight LINQ Query?Query Pattern Implementation Absence: Resolving "Could Not Find" ErrorsIn a Silverlight application, an attempt to establish a database conn...Programming Posted on 2025-07-16 -

How to Handle User Input in Java's Full-Screen Exclusive Mode?Handling User Input in Full Screen Exclusive Mode in JavaIntroductionWhen running a Java application in full screen exclusive mode, the usual event ha...Programming Posted on 2025-07-16

How to Handle User Input in Java's Full-Screen Exclusive Mode?Handling User Input in Full Screen Exclusive Mode in JavaIntroductionWhen running a Java application in full screen exclusive mode, the usual event ha...Programming Posted on 2025-07-16 -

PHP Future: Adaptation and InnovationThe future of PHP will be achieved by adapting to new technology trends and introducing innovative features: 1) Adapting to cloud computing, container...Programming Posted on 2025-07-16

PHP Future: Adaptation and InnovationThe future of PHP will be achieved by adapting to new technology trends and introducing innovative features: 1) Adapting to cloud computing, container...Programming Posted on 2025-07-16 -

How to upload files with additional parameters using java.net.URLConnection and multipart/form-data encoding?Uploading Files with HTTP RequestsTo upload files to an HTTP server while also submitting additional parameters, java.net.URLConnection and multipart/...Programming Posted on 2025-07-16

How to upload files with additional parameters using java.net.URLConnection and multipart/form-data encoding?Uploading Files with HTTP RequestsTo upload files to an HTTP server while also submitting additional parameters, java.net.URLConnection and multipart/...Programming Posted on 2025-07-16 -

When to use "try" instead of "if" to detect variable values in Python?Using "try" vs. "if" to Test Variable Value in PythonIn Python, there are situations where you may need to check if a variable has...Programming Posted on 2025-07-16

When to use "try" instead of "if" to detect variable values in Python?Using "try" vs. "if" to Test Variable Value in PythonIn Python, there are situations where you may need to check if a variable has...Programming Posted on 2025-07-16 -

How to efficiently repeat string characters for indentation in C#?Repeating a String for IndentationWhen indenting a string based on an item's depth, it's convenient to have an efficient way to return a strin...Programming Posted on 2025-07-16

How to efficiently repeat string characters for indentation in C#?Repeating a String for IndentationWhen indenting a string based on an item's depth, it's convenient to have an efficient way to return a strin...Programming Posted on 2025-07-16 -

Why Isn\'t My CSS Background Image Appearing?Troubleshoot: CSS Background Image Not AppearingYou've encountered an issue where your background image fails to load despite following tutorial i...Programming Posted on 2025-07-16

Why Isn\'t My CSS Background Image Appearing?Troubleshoot: CSS Background Image Not AppearingYou've encountered an issue where your background image fails to load despite following tutorial i...Programming Posted on 2025-07-16

Study Chinese

- 1 How do you say "walk" in Chinese? 走路 Chinese pronunciation, 走路 Chinese learning

- 2 How do you say "take a plane" in Chinese? 坐飞机 Chinese pronunciation, 坐飞机 Chinese learning

- 3 How do you say "take a train" in Chinese? 坐火车 Chinese pronunciation, 坐火车 Chinese learning

- 4 How do you say "take a bus" in Chinese? 坐车 Chinese pronunciation, 坐车 Chinese learning

- 5 How to say drive in Chinese? 开车 Chinese pronunciation, 开车 Chinese learning

- 6 How do you say swimming in Chinese? 游泳 Chinese pronunciation, 游泳 Chinese learning

- 7 How do you say ride a bicycle in Chinese? 骑自行车 Chinese pronunciation, 骑自行车 Chinese learning

- 8 How do you say hello in Chinese? 你好Chinese pronunciation, 你好Chinese learning

- 9 How do you say thank you in Chinese? 谢谢Chinese pronunciation, 谢谢Chinese learning

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning