Front page > Programming > Developing Efficient Algorithms - Measuring Algorithm Efficiency Using Big O Notation

Front page > Programming > Developing Efficient Algorithms - Measuring Algorithm Efficiency Using Big O Notation

Developing Efficient Algorithms - Measuring Algorithm Efficiency Using Big O Notation

Algorithm design is to develop a mathematical process for solving a problem. Algorithm analysis is to predict the performance of an algorithm. The preceding two chapters introduced classic data structures (lists, stacks, queues, priority queues, sets, and maps) and applied them to solve problems. This chapter will use a variety of examples to introduce common algorithmic techniques (dynamic programming, divide-and-conquer, and backtracking) for developing efficient algorithms.

The Big O notation obtains a function for measuring algorithm time complexity based on the input size. You can ignore multiplicative constants and nondominating terms in the function. Suppose two algorithms perform the same task, such as search (linear search vs. binary search). Which one is better? To answer this question, you might implement these algorithms and run the programs to get execution time. But there are two problems with this approach:

- First, many tasks run concurrently on a computer. The execution time of a particular program depends on the system load.

- Second, the execution time depends on specific input. Consider, for example, linear search and binary search. If an element to be searched happens to be the first in the list, linear search will find the element quicker than binary search.

It is very difficult to compare algorithms by measuring their execution time. To overcome these problems, a theoretical approach was developed to analyze algorithms independent of computers and specific input. This approach approximates the effect of a change on the size of the input. In this way, you can see how fast an algorithm’s execution time increases as the input size increases, so you can compare two algorithms by examining their growth rates.

Consider linear search. The linear search algorithm compares the key with the elements in the array sequentially until the key is found or the array is exhausted. If the key is not in the array, it requires n comparisons for an array of size n. If the key is in the array, it requires n/2 comparisons on average. The algorithm’s execution time is proportional to the size of the array. If you double the size of the array, you will expect the number of comparisons to double. The algorithm grows at a linear rate. The growth rate has an order of magnitude of n. Computer scientists use the Big O notation to represent the “order of magnitude.” Using this notation, the complexity of the linear search algorithm is O(n), pronounced as “order of n.” We call an algorithm with a time complexity of O(n) a linear algorithm, and it exhibits a linear growth rate.

For the same input size, an algorithm’s execution time may vary, depending on the input. An input that results in the shortest execution time is called the best-case input, and an input that results in the longest execution time is the worst-case input. Best-case analysis and

worst-case analysis are to analyze the algorithms for their best-case input and worst-case input. Best-case and worst-case analysis are not representative, but worst-case analysis is very useful. You can be assured that the algorithm will never be slower than the worst case.

An average-case analysis attempts to determine the average amount of time among all possible inputs of the same size. Average-case analysis is ideal, but difficult to perform, because for many problems it is hard to determine the relative probabilities and distributions of various input instances. Worst-case analysis is easier to perform, so the analysis is generally conducted for the worst case.

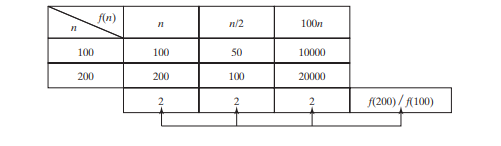

The linear search algorithm requires n comparisons in the worst case and n/2 comparisons in the average case if you are nearly always looking for something known to be in the list. Using the Big O notation, both cases require O(n) time. The multiplicative constant (1/2) can be omitted. Algorithm analysis is focused on growth rate. The multiplicative constants have no impact on growth rates. The growth rate for n/2 or 100_n_ is the same as for n, as illustrated in Table below, Growth Rates. Therefore, O(n) = O(n/2) = O(100n).

Consider the algorithm for finding the maximum number in an array of n elements. To find the maximum number if n is 2, it takes one comparison; if n is 3, two comparisons. In general, it takes n - 1 comparisons to find the maximum number in a list of n elements. Algorithm analysis is for large input size. If the input size is small, there is no significance in estimating an algorithm’s efficiency. As n grows larger, the n part in the expression n - 1 dominates the complexity. The Big O notation allows you to ignore the nondominating part (e.g., -1 in the

expression n - 1) and highlight the important part (e.g., n in the expression n - 1). Therefore, the complexity of this algorithm is O(n).

The Big O notation estimates the execution time of an algorithm in relation to the input size. If the time is not related to the input size, the algorithm is said to take constant time with the notation O(1). For example, a method that retrieves an element at a given index in an array

takes constant time, because the time does not grow as the size of the array increases.

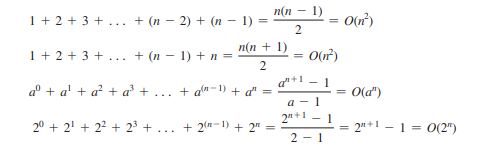

The following mathematical summations are often useful in algorithm analysis:

Time complexity is a measure of execution time using the Big-O notation. Similarly, you can also measure space complexity using the Big-O notation. Space complexity measures the amount of memory space used by an algorithm. The space complexity for most algorithms presented in the book is O(n). i.e., they exibit linear growth rate to the input size. For example, the space complexity for linear search is O(n).

-

Using WebSockets in Go for Real-Time CommunicationBuilding apps that require real-time updates—like chat applications, live notifications, or collaborative tools—requires a communication method faster...Programming Published on 2024-12-21

Using WebSockets in Go for Real-Time CommunicationBuilding apps that require real-time updates—like chat applications, live notifications, or collaborative tools—requires a communication method faster...Programming Published on 2024-12-21 -

How Can I Implement Auto-Resizing Textarea Functionality Using Prototype.js?Implementing Auto-Resizing TextArea with PrototypeTo enhance the user experience in your internal sales application, consider adding auto-resizing fun...Programming Published on 2024-12-21

How Can I Implement Auto-Resizing Textarea Functionality Using Prototype.js?Implementing Auto-Resizing TextArea with PrototypeTo enhance the user experience in your internal sales application, consider adding auto-resizing fun...Programming Published on 2024-12-21 -

How to Configure Multiple Data Sources in Spring Boot?Configuring Multiple Data Sources in Spring BootIn Spring Boot, using multiple data sources allows you to isolate data access management for different...Programming Published on 2024-12-21

How to Configure Multiple Data Sources in Spring Boot?Configuring Multiple Data Sources in Spring BootIn Spring Boot, using multiple data sources allows you to isolate data access management for different...Programming Published on 2024-12-21 -

Why Does a Zero-Length Array in C++ Cause Error 2233, and How Can I Fix It?Dealing with "Array of Zero Length" in C In C , the "array of zero length" situation can be encountered in legacy code. This inv...Programming Published on 2024-12-21

Why Does a Zero-Length Array in C++ Cause Error 2233, and How Can I Fix It?Dealing with "Array of Zero Length" in C In C , the "array of zero length" situation can be encountered in legacy code. This inv...Programming Published on 2024-12-21 -

How Can I Style and Ensure the Visibility of HTML `` Tags?Styling and Visibility of HTML < area /> TagsIssue StatementIn HTML, the tag is used to define an area of an image that can be linked to another reso...Programming Published on 2024-12-21

How Can I Style and Ensure the Visibility of HTML `` Tags?Styling and Visibility of HTML < area /> TagsIssue StatementIn HTML, the tag is used to define an area of an image that can be linked to another reso...Programming Published on 2024-12-21 -

Beyond `if` Statements: Where Else Can a Type with an Explicit `bool` Conversion Be Used Without Casting?Contextual Conversion to bool Allowed Without a CastYour class defines an explicit conversion to bool, enabling you to use its instance 't' di...Programming Published on 2024-12-21

Beyond `if` Statements: Where Else Can a Type with an Explicit `bool` Conversion Be Used Without Casting?Contextual Conversion to bool Allowed Without a CastYour class defines an explicit conversion to bool, enabling you to use its instance 't' di...Programming Published on 2024-12-21 -

How to Avoid Global Variables When Accessing a Database Object within a Class?Using Global Variables within a ClassCreating pagination functionality involves accessing a database object from within a class. However, attempting t...Programming Published on 2024-12-21

How to Avoid Global Variables When Accessing a Database Object within a Class?Using Global Variables within a ClassCreating pagination functionality involves accessing a database object from within a class. However, attempting t...Programming Published on 2024-12-21 -

How Can I Find Users with Today\'s Birthdays Using MySQL?How to Identify Users with Today's Birthdays Using MySQLDetermining if today is a user's birthday using MySQL involves finding all rows where ...Programming Published on 2024-12-21

How Can I Find Users with Today\'s Birthdays Using MySQL?How to Identify Users with Today's Birthdays Using MySQLDetermining if today is a user's birthday using MySQL involves finding all rows where ...Programming Published on 2024-12-21 -

How Can I Generate Uniformly Distributed Random Numbers Within a Specific Range in C++?Uniform Random Number Generation Across a RangeYou seek a method to uniformly generate random numbers within a specified range [min, max].Flaws with r...Programming Published on 2024-12-21

How Can I Generate Uniformly Distributed Random Numbers Within a Specific Range in C++?Uniform Random Number Generation Across a RangeYou seek a method to uniformly generate random numbers within a specified range [min, max].Flaws with r...Programming Published on 2024-12-21 -

How Can I Suppress Null Field Values During Jackson Serialization?Handling Null Field Values in Jackson SerializationJackson, a popular Java serialization library, provides various configuration options to tailor its...Programming Published on 2024-12-21

How Can I Suppress Null Field Values During Jackson Serialization?Handling Null Field Values in Jackson SerializationJackson, a popular Java serialization library, provides various configuration options to tailor its...Programming Published on 2024-12-21 -

How Can JavaScript Detect Browser Tab Activity?Determining Browser Tab Activity with JavaScriptIn web development, it's often desirable to detect whether a browser tab is actively in use. This ...Programming Published on 2024-12-21

How Can JavaScript Detect Browser Tab Activity?Determining Browser Tab Activity with JavaScriptIn web development, it's often desirable to detect whether a browser tab is actively in use. This ...Programming Published on 2024-12-21 -

What are the Limitations on Array Lengths in C++ and How Can They Be Overcome?Investigating Array Length Limitations in C Despite its immense utility, C arrays impose certain limitations on their size. The extent of these res...Programming Published on 2024-12-21

What are the Limitations on Array Lengths in C++ and How Can They Be Overcome?Investigating Array Length Limitations in C Despite its immense utility, C arrays impose certain limitations on their size. The extent of these res...Programming Published on 2024-12-21 -

How Can We Effectively Compile an AST Back to Readable Source Code?Compiling an AST Back to Source CodeCompiling an abstract syntax tree (AST) back to source code, often referred to as "prettyprinting," is c...Programming Published on 2024-12-21

How Can We Effectively Compile an AST Back to Readable Source Code?Compiling an AST Back to Source CodeCompiling an abstract syntax tree (AST) back to source code, often referred to as "prettyprinting," is c...Programming Published on 2024-12-21 -

Why Does IntelliJ Show \"Cannot Resolve Symbol\" Errors After Successful Compilation?IntelliJ Inspector Error "Cannot Resolve Symbol" Despite Successful CompilationIntelliJ users may experience the perplexing situation where ...Programming Published on 2024-12-21

Why Does IntelliJ Show \"Cannot Resolve Symbol\" Errors After Successful Compilation?IntelliJ Inspector Error "Cannot Resolve Symbol" Despite Successful CompilationIntelliJ users may experience the perplexing situation where ...Programming Published on 2024-12-21 -

How Can I View Table Variable Values During T-SQL Debugging in SSMS?Viewing Table Variable Values During DebuggingWhen debugging Transact-SQL (T-SQL) code in SQL Server Management Studio (SSMS), it can be helpful to ex...Programming Published on 2024-12-21

How Can I View Table Variable Values During T-SQL Debugging in SSMS?Viewing Table Variable Values During DebuggingWhen debugging Transact-SQL (T-SQL) code in SQL Server Management Studio (SSMS), it can be helpful to ex...Programming Published on 2024-12-21

Study Chinese

- 1 How do you say "walk" in Chinese? 走路 Chinese pronunciation, 走路 Chinese learning

- 2 How do you say "take a plane" in Chinese? 坐飞机 Chinese pronunciation, 坐飞机 Chinese learning

- 3 How do you say "take a train" in Chinese? 坐火车 Chinese pronunciation, 坐火车 Chinese learning

- 4 How do you say "take a bus" in Chinese? 坐车 Chinese pronunciation, 坐车 Chinese learning

- 5 How to say drive in Chinese? 开车 Chinese pronunciation, 开车 Chinese learning

- 6 How do you say swimming in Chinese? 游泳 Chinese pronunciation, 游泳 Chinese learning

- 7 How do you say ride a bicycle in Chinese? 骑自行车 Chinese pronunciation, 骑自行车 Chinese learning

- 8 How do you say hello in Chinese? 你好Chinese pronunciation, 你好Chinese learning

- 9 How do you say thank you in Chinese? 谢谢Chinese pronunciation, 谢谢Chinese learning

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning