Circuit Breakers in Go: Stop Cascading Failures

Circuit Breakers

A circuit breaker detects failures and encapsulate the logic of handling those failures in a way that prevents the failure from constantly recurring. For example, they’re useful when dealing with network calls to external services, databases, or really, any part of your system that might fail temporarily. By using a circuit breaker, you can prevent cascading failures, manage temporary errors, and maintain a stable and responsive system amidst a system breakdown.

Cascading Failures

Cascading failures occur when a failure in one part of the system triggers failures in other parts, leading to widespread disruption. An example is when a microservice in a distributed system becomes unresponsive, causing dependent services to timeout and eventually fail. Depending on the scale of the application, the impact of these failures can be catastrophic which is going to degrade performance and probably even impact user experience.

Circuit Breaker Patterns

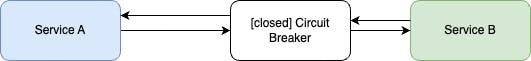

A circuit breaker itself is a technique/pattern and there are three different states it operates which we will talk about:

- Closed State: In a closed state, the circuit breaker allows all requests to pass through to the target service normally as they would. If the requests are successful, the circuit remains closed. However, if a certain threshold of failures is reached, the circuit transitions to the open state. Think of it like a fully operational service where users can log in and access data without issues. Everything is running smoothly.

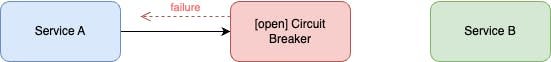

2. Open State : In an open state, the circuit breaker immediately fails all incoming requests without attempting to contact the target service. The state is entered to prevent further overload of the failing service and give it time to recover. After a predefined timeout, the circuit breaker moves to the half-open state. A relatable example is this; Imagine an online store experiences a sudden issue where every purchase attempt fails. To avoid overwhelming the system, the store temporarily stops accepting any new purchase requests.

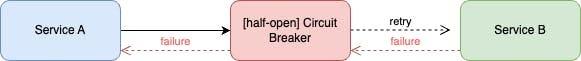

3. Half-Open State : In the half-open state, the circuit breaker allows a (configurable) limited number of test requests to pass through to the target service. And if these requests are successful, the circuit transitions back to the closed state. If they fail, the circuit returns to the open state. In the example of the online store i gave in the open state above, this is where the online store starts to allow a few purchase attempts to see if the issue has been fixed. If these few attempts succeed, the store will fully reopen its service to accept new purchase requests.

This diagram shows when the circuit breaker tries to see if requests to Service B are successful and then it fails/breaks:

The follow up diagram then shows when the test requests to Service B succeeds, the circuit is closed, and all further calls are routed to Service B again:

Note : Key configurations for a circuit breaker include the failure threshold (number of failures needed to open the circuit), the timeout for the open state, and the number of test requests in the half-open state.

Implementing Circuit Breakers in Go

It’s important to mention that prior knowledge of Go is required to follow along in this article.

As with any software engineering pattern, circuit breakers can be implemented in various languages. However, this article will focus on implementation in Golang. While there are several libraries available for this purpose, such as goresilience, go-resiliency, and gobreaker, we will specifically concentrate on using the gobreaker library.

Pro Tip : You can see the internal implementation of the gobreaker package, check here.

Let’s consider a simple Golang application where a circuit breaker is implemented to handle calls to an external API. This basic example demonstrates how to wrap an external API call with the circuit breaker technique:

Let’s touch on a few important things:

- gobreaker.NewCircuitBreaker function initializes the circuit breaker with our custom settings

- cb.Execute method wraps the HTTP request, automatically managing the circuit state.

- MaximumRequests is the maximum number of requests allowed to pass through when the state is half-open

- Interval is the cyclic period of the closed state for the circuit breaker to clear the internal counts

- Timeout is the duration before transitioning from open to half-open state.

- ReadyToTrip is called with a copy of counts whenever a request fails in the closed state. If ReadyToTrip returns true, the circuit breaker will be placed into the open state. In our case here, it returns true if requests have failed more then three consecutive times.

- OnStateChange is called whenever the state of the circuit breaker changes. You would usually want to collect the metrics of the state change here and report to any metrics collector of your choice.

Let’s write some unit tests to verify our circuit breaker implementation. I will only be explaining the most critical unit tests to understand. You can check here for the full code.

- We will write a test that simulates consecutive failed requests and checks if the circuit breaker trips to the open state. Essentially, after 3 failures, when the forth failure occurs, we expect the circuit breaker to trip (open) since our condition says counts.ConsecutiveFailures > 3 . Here's what the test looks like:

t.Run("FailedRequests", func(t *testing.T) {

// Override callExternalAPI to simulate failure

callExternalAPI = func() (int, error) {

return 0, errors.New("simulated failure")

}

for i := 0; i

- We will test the open > half - open > closed states. But we will first simulate an open circuit and call a timeout. After a timeout, we need to make at least one success request for the circuit to transition to half-open. After the half-open state, we need to make another success request for the circuit to be fully closed again. If for any reason, there’s no record of a success request in the case, it will go back to being open. Here’s how the test looks like:

//Simulates the circuit breaker being open,

//wait for the defined timeout,

//then check if it closes again after a successful request.

t.Run("RetryAfterTimeout", func(t *testing.T) {

// Simulate circuit breaker opening

callExternalAPI = func() (int, error) {

return 0, errors.New("simulated failure")

}

for i := 0; i

- Let’s test the ReadyToTrip condition which triggers after 2 consecutive failure requests. We'll have a variable that tracks for consecutive failures. The ReadyToTrip callback is updated to check if the circuit breaker trips after 2 failures ( counts.ConsecutiveFailures > 2). We will write a test that simulates failures and verifies the count and that the circuit breaker transitions to the open state after the specified number of failures.

t.Run("ReadyToTrip", func(t *testing.T) {

failures := 0

settings.ReadyToTrip = func(counts gobreaker.Counts) bool {

failures = int(counts.ConsecutiveFailures)

return counts.ConsecutiveFailures > 2 // Trip after 2 failures

}

cb = gobreaker.NewCircuitBreaker(settings)

// Simulate failures

callExternalAPI = func() (int, error) {

return 0, errors.New("simulated failure")

}

for i := 0; i

Advanced Strategies

We can take it a step further by adding an exponential backoff strategy to our circuit breaker implementation. We will this article keep it simple and concise by demonstrating an example of the exponential backoff strategy. However, there are other advanced strategies for circuit breakers worth mentioning, such as load shedding, bulkheading, fallback mechanisms, context and cancellation. These strategies basically enhance the robustness and functionality of circuit breakers. Here’s an example of using the exponential backoff strategy:

Exponential Backoff

Circuit breaker with exponential backoff

Let’s make a couple of things clear:

Custom Backoff Function: The exponentialBackoff function implements an exponential backoff strategy with a jitter. It basically calculates the backoff time based on the number of attempts, ensuring that the delay increases exponentially with each retry attempt.

Handling Retries: As you can see in the /api handler, the logic now includes a loop that attempts to call the external API up to a specified number of attempts ( attempts := 5). After each failed attempt, we wait for a duration determined by the exponentialBackoff function before retrying.

Circuit Breaker Execution: The circuit breaker is used within the loop. If the external API call succeeds ( err == nil), the loop breaks, and the successful result is returned. If all attempts fail, an HTTP 503 (Service Unavailable) error is returned.

Integrating custom backoff strategy in a circuit breaker implementation indeed aims to handle transient errors more gracefully. The increasing delays between retries help reduce the load on failing services, allowing them time to recover. As evident in our code above, our exponentialBackoff function was introduced to add delays between retries when calling an external API.

Additionally, we can integrate metrics and logging to monitor circuit breaker state changes using tools like Prometheus for real-time monitoring and alerting. Here’s a simple example:

Implementing a circuit breaker pattern with advanced strategies in go

As you’ll see, we have now done the following:

- In L16–21, we define a prometheus counter vector to keep track of the number of requests and their state (success, failure, circuit breaker state changes).

- In L25–26, the metrics defined are registered with Prometheus in the init function.

Pro Tip : The init function in Go is used to initialize the state of a package before the main function or any other code in the package is executed. In this case, the init function registers the requestCount metric with Prometheus. And this essentially ensures that Prometheus is aware of this metric and can start collect data as soon as the application starts running.

We create the circuit breaker with custom settings, including the ReadyToTrip function that increases the failure counter and determines when to trip the circuit.

OnStateChange to log state changes and increment the corresponding prometheus metric

We expose the Prometheus metrics at /metrics endpoint

Wrapping Up

To wrap up this article, i hope you saw how circuit breakers play a huge role in building resilient and reliable systems. By proactively preventing cascading failures, they fortify the reliability of microservices and distributed systems, ensuring a seamless user experience even in the face of adversity.

Keep in mind, any system designed for scalability must incorporate strategies to gracefully handle failures and swiftly recover — Oluwafemi , 2024

Originally published at https://oluwafemiakinde.dev on June 7, 2024.

-

Solve MySQL error 1153: Packet exceeds 'max_allowed_packet' limitMySQL Error 1153: Troubleshooting Got a Packet Bigger Than 'max_allowed_packet' BytesFacing the enigmatic MySQL Error 1153 while importing a d...Programming Posted on 2025-04-23

Solve MySQL error 1153: Packet exceeds 'max_allowed_packet' limitMySQL Error 1153: Troubleshooting Got a Packet Bigger Than 'max_allowed_packet' BytesFacing the enigmatic MySQL Error 1153 while importing a d...Programming Posted on 2025-04-23 -

How Can I Customize Compilation Optimizations in the Go Compiler?Customizing Compilation Optimizations in Go CompilerThe default compilation process in Go follows a specific optimization strategy. However, users may...Programming Posted on 2025-04-23

How Can I Customize Compilation Optimizations in the Go Compiler?Customizing Compilation Optimizations in Go CompilerThe default compilation process in Go follows a specific optimization strategy. However, users may...Programming Posted on 2025-04-23 -

How can I safely concatenate text and values when constructing SQL queries in Go?Concatenating Text and Values in Go SQL QueriesWhen constructing a text SQL query in Go, there are certain syntax rules to follow when concatenating s...Programming Posted on 2025-04-23

How can I safely concatenate text and values when constructing SQL queries in Go?Concatenating Text and Values in Go SQL QueriesWhen constructing a text SQL query in Go, there are certain syntax rules to follow when concatenating s...Programming Posted on 2025-04-23 -

How to efficiently INSERT or UPDATE rows based on two conditions in MySQL?INSERT INTO or UPDATE with Two ConditionsProblem Description:The user encounters a time-consuming challenge: inserting a new row into a table if there...Programming Posted on 2025-04-23

How to efficiently INSERT or UPDATE rows based on two conditions in MySQL?INSERT INTO or UPDATE with Two ConditionsProblem Description:The user encounters a time-consuming challenge: inserting a new row into a table if there...Programming Posted on 2025-04-23 -

FastAPI Custom 404 Page Creation GuideCustom 404 Not Found Page with FastAPITo create a custom 404 Not Found page, FastAPI offers several approaches. The appropriate method depends on your...Programming Posted on 2025-04-23

FastAPI Custom 404 Page Creation GuideCustom 404 Not Found Page with FastAPITo create a custom 404 Not Found page, FastAPI offers several approaches. The appropriate method depends on your...Programming Posted on 2025-04-23 -

How to avoid memory leaks when slicing Go language?Memory Leak in Go SlicesUnderstanding memory leaks in Go slices can be a challenge. This article aims to provide clarification by examining two approa...Programming Posted on 2025-04-23

How to avoid memory leaks when slicing Go language?Memory Leak in Go SlicesUnderstanding memory leaks in Go slices can be a challenge. This article aims to provide clarification by examining two approa...Programming Posted on 2025-04-23 -

How to pass exclusive pointers as function or constructor parameters in C++?Managing Unique Pointers as Parameters in Constructors and FunctionsUnique pointers (unique_ptr) uphold the principle of unique ownership in C 11. Wh...Programming Posted on 2025-04-23

How to pass exclusive pointers as function or constructor parameters in C++?Managing Unique Pointers as Parameters in Constructors and FunctionsUnique pointers (unique_ptr) uphold the principle of unique ownership in C 11. Wh...Programming Posted on 2025-04-23 -

Can template parameters in C++20 Consteval function depend on function parameters?Consteval Functions and Template Parameters Dependent on Function ArgumentsIn C 17, a template parameter cannot depend on a function argument because...Programming Posted on 2025-04-23

Can template parameters in C++20 Consteval function depend on function parameters?Consteval Functions and Template Parameters Dependent on Function ArgumentsIn C 17, a template parameter cannot depend on a function argument because...Programming Posted on 2025-04-23 -

How Can I Synchronously Iterate and Print Values from Two Equal-Sized Arrays in PHP?Synchronously Iterating and Printing Values from Two Arrays of the Same SizeWhen creating a selectbox using two arrays of equal size, one containing c...Programming Posted on 2025-04-23

How Can I Synchronously Iterate and Print Values from Two Equal-Sized Arrays in PHP?Synchronously Iterating and Printing Values from Two Arrays of the Same SizeWhen creating a selectbox using two arrays of equal size, one containing c...Programming Posted on 2025-04-23 -

When does a Go web application close the database connection?Managing Database Connections in Go Web ApplicationsIn simple Go web applications that utilize databases like PostgreSQL, the timing of database conne...Programming Posted on 2025-04-23

When does a Go web application close the database connection?Managing Database Connections in Go Web ApplicationsIn simple Go web applications that utilize databases like PostgreSQL, the timing of database conne...Programming Posted on 2025-04-23 -

How to create dynamic variables in Python?Dynamic Variable Creation in PythonThe ability to create variables dynamically can be a powerful tool, especially when working with complex data struc...Programming Posted on 2025-04-23

How to create dynamic variables in Python?Dynamic Variable Creation in PythonThe ability to create variables dynamically can be a powerful tool, especially when working with complex data struc...Programming Posted on 2025-04-23 -

Which Method for Declaring Multiple Variables in JavaScript is More Maintainable?Declaring Multiple Variables in JavaScript: Exploring Two MethodsIn JavaScript, developers often encounter the need to declare multiple variables. Two...Programming Posted on 2025-04-23

Which Method for Declaring Multiple Variables in JavaScript is More Maintainable?Declaring Multiple Variables in JavaScript: Exploring Two MethodsIn JavaScript, developers often encounter the need to declare multiple variables. Two...Programming Posted on 2025-04-23 -

Reasons for CodeIgniter to connect to MySQL database after switching to MySQLiUnable to Connect to MySQL Database: Troubleshooting Error MessageWhen attempting to switch from the MySQL driver to the MySQLi driver in CodeIgniter,...Programming Posted on 2025-04-23

Reasons for CodeIgniter to connect to MySQL database after switching to MySQLiUnable to Connect to MySQL Database: Troubleshooting Error MessageWhen attempting to switch from the MySQL driver to the MySQLi driver in CodeIgniter,...Programming Posted on 2025-04-23 -

How to Parse JSON Arrays in Go Using the `json` Package?Parsing JSON Arrays in Go with the JSON PackageProblem: How can you parse a JSON string representing an array in Go using the json package?Code Exampl...Programming Posted on 2025-04-23

How to Parse JSON Arrays in Go Using the `json` Package?Parsing JSON Arrays in Go with the JSON PackageProblem: How can you parse a JSON string representing an array in Go using the json package?Code Exampl...Programming Posted on 2025-04-23 -

What is the difference between nested functions and closures in PythonNested Functions vs. Closures in PythonWhile nested functions in Python superficially resemble closures, they are fundamentally distinct due to a key ...Programming Posted on 2025-04-23

What is the difference between nested functions and closures in PythonNested Functions vs. Closures in PythonWhile nested functions in Python superficially resemble closures, they are fundamentally distinct due to a key ...Programming Posted on 2025-04-23

Study Chinese

- 1 How do you say "walk" in Chinese? 走路 Chinese pronunciation, 走路 Chinese learning

- 2 How do you say "take a plane" in Chinese? 坐飞机 Chinese pronunciation, 坐飞机 Chinese learning

- 3 How do you say "take a train" in Chinese? 坐火车 Chinese pronunciation, 坐火车 Chinese learning

- 4 How do you say "take a bus" in Chinese? 坐车 Chinese pronunciation, 坐车 Chinese learning

- 5 How to say drive in Chinese? 开车 Chinese pronunciation, 开车 Chinese learning

- 6 How do you say swimming in Chinese? 游泳 Chinese pronunciation, 游泳 Chinese learning

- 7 How do you say ride a bicycle in Chinese? 骑自行车 Chinese pronunciation, 骑自行车 Chinese learning

- 8 How do you say hello in Chinese? 你好Chinese pronunciation, 你好Chinese learning

- 9 How do you say thank you in Chinese? 谢谢Chinese pronunciation, 谢谢Chinese learning

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning